原创文章,转载请注明: 转载自慢慢的回味

本文链接地址: Apollo自动驾驶的点云CNN分割

点云分割的主要方法为:1、映射3D点云到2D的索引图上,对每个索引图上的像素点里面的点云进行高度,强度,数量的统计;2、对2D索引图进行CNN推断,从而得到每个像素点倾向的中心点,方向,所属类型的概率等;3、通过SPP引擎集群2D索引图上的像素点;4、对每个集群里的每个像素点求所属类型概率的平均值,从而得到集群所属的类型,点云的范围,中心点,高度等。从而完成了物体的识别。

这儿以单元测试cnn_segmentation_test.cc的测试cnn_segmentation_sequence_test为例来分析Apollo自动驾驶的点云CNN分割。

Content:

CNN分割测试用例

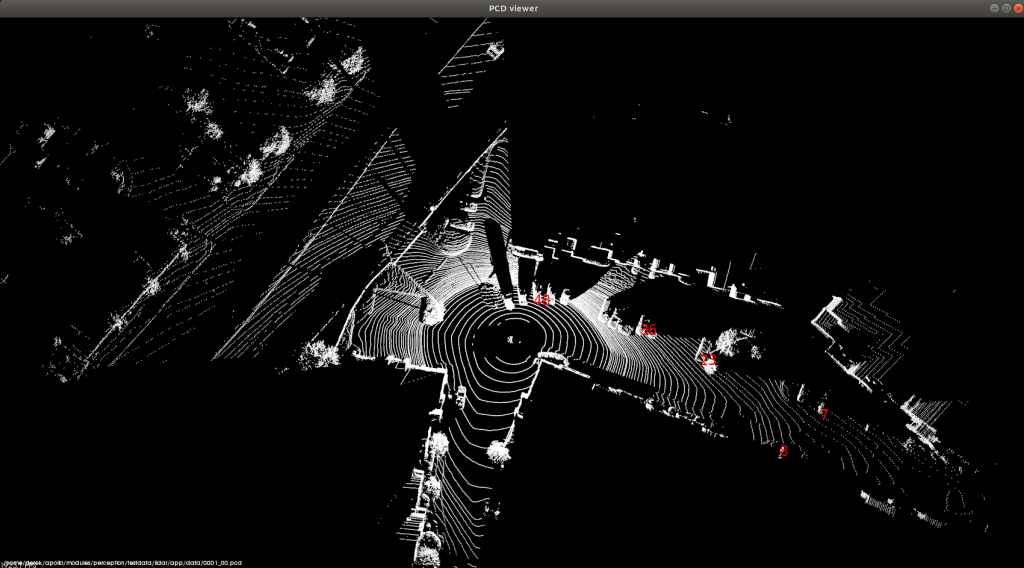

TEST(CNNSegmentationTest, cnn_segmentation_sequence_test) { unsetenv("CYBER_PATH"); unsetenv("MODULE_PATH"); //设置工作目录和配置文件路经 FLAGS_work_root = "/apollo/modules/perception/testdata/" "lidar/lib/segmentation/cnnseg/"; FLAGS_config_manager_path = "../../../../../production/conf"; //实例化一个CNN分割类实例 auto segmentation = std::shared_ptr<cnnsegmentation>(new CNNSegmentation); LidarDetectorOptions options; EXPECT_FALSE(segmentation->Detect(options, nullptr)); LidarFrame frame_data; EXPECT_FALSE(segmentation->Detect(options, &frame_data)); frame_data.cloud = base::PointFCloudPool::Instance().Get(); frame_data.world_cloud = base::PointDCloudPool::Instance().Get(); EXPECT_FALSE(segmentation->Detect(options, &frame_data)); //初始化CNN分割实例,包括参数加载,特征提取初始化,CNN推测初始化。见下面详细注解。 EXPECT_TRUE(segmentation->Init()); EXPECT_TRUE(segmentation->InitClusterAndBackgroundSegmentation()); std::string pcd_path = "/apollo/modules/perception/testdata/lidar/app/data/"; std::vector<std::string> pcd_file_names; common::GetFileList(pcd_path, ".pcd", &pcd_file_names); std::string file_name; std::sort(pcd_file_names.begin(), pcd_file_names.end(), [](const std::string& lhs, const std::string& rhs) { if (lhs.length() < rhs.length()) { return true; } else if (lhs.length() == rhs.length()) { return lhs <= rhs; } else { return false; } }); for (size_t i = 0; i < pcd_file_names.size(); ++i) { std::shared_ptr<lidarframe> frame(new LidarFrame); frame->cloud = base::PointFCloudPool::Instance().Get(); frame->world_cloud = base::PointDCloudPool::Instance().Get(); //加载点云文件,比如这儿为/apollo/modules/perception/testdata/lidar/app/data/0001_00.pcd,可视化的结果如下图 if (!LoadPCDFile(pcd_file_names[i], frame->cloud)) { continue; } frame->world_cloud->resize(frame->cloud->size()); //侦测当前帧中的对象,见下面代码详解 EXPECT_TRUE(segmentation->Detect(options, frame.get())); //打印侦测到的对象信息,见下面代码详解 PrintObjects((frame.get())->segmented_objects); } } </lidarframe></std::string></cnnsegmentation> |

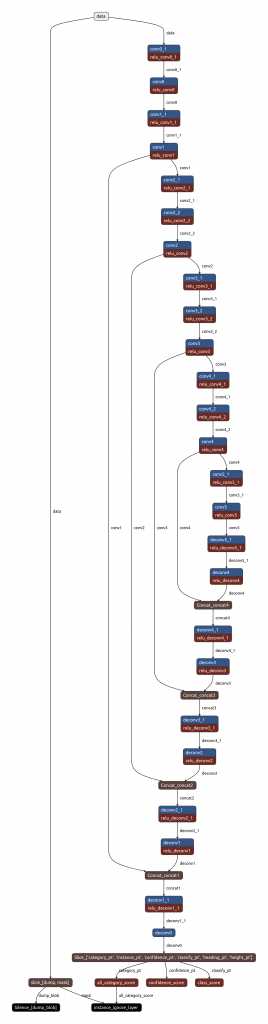

CNN分割初始化

bool CNNSegmentation::Init(const LidarDetectorInitOptions& options) { // get configs std::string param_file; std::string proto_file; std::string weight_file; std::string engine_file; if (!FLAGS_lidar_model_version.empty()) { sensor_name_ = FLAGS_lidar_model_version; } else { sensor_name_ = options.sensor_name; } CHECK(GetConfigs(¶m_file, &proto_file, &weight_file, &engine_file)); AINFO << "-- param_file: " << param_file; AINFO << "-- proto_file: " << proto_file; AINFO << "-- weight_file: " << weight_file; AINFO << "-- engine_file: " << engine_file; //获取CNN分割参数和SPP(分割点云处理器?)引擎参数 //CNN负责对2D图像上的每个单元格进行分类预测,方向预测 //SPP通过聚类算法把单元格进行聚类 // get cnnseg params ACHECK(GetProtoFromFile(param_file, &cnnseg_param_)) << "Failed to parse CNNSegParam config file." << param_file; ACHECK(GetProtoFromFile(engine_file, &spp_engine_config_)) << "Failed to parse SppEngine config file." << engine_file; //width_和height_是点云映射成2D索引图point2grid_的宽度和高度,range_是点云的最远距离 // init feature parameters const FeatureParam& feature_param = cnnseg_param_.feature_param(); //这个范围内的点云才有效 range_ = feature_param.point_cloud_range();//70 //所有点云最终映射到2D图像的宽度和高度 width_ = feature_param.width();//672 height_ = feature_param.height();//672 //点云的有效高度,lidar距地面有一定高度 min_height_ = feature_param.min_height();//-5 max_height_ = feature_param.max_height();//5 //初始化推测模型,这个模型类似与UNet网络,模型见下图。 //网络模型在data/perception/lidar/models/cnnseg/velodyne64/deploy.prototxt //网络输入input_names为data,为形状[1,6,672,672]的2D索引图point2grid_,其中6个通道依次存放的数据为point2grid_中每一格的数据: //max_height_data_:映射到此单元格的所有点云的最大高度; //mean_height_data_:映射到此单元格的所有点云的平均高度; //count_data_:映射到此单元格的所有点云的数量; //nonempty_data_:表明此单元格非空; //top_intensity_data_:映射到此单元格的所有点云的最大强度,强度有助于判断物体; //mean_intensity_data_:映射到此单元格的所有点云的平均强度。 //上面的特征数据是由feature_generator_提取的。 //网络的输出为反卷集deconv0,形状为[1,12,672,672],其中12个通道为如下数据: //category_pt:0,表示属于什么类型; //instance_pt:1-2,中心点的位置r,c; //confidence_pt:3,置信度; //classify_pt:4-8,属于某种分类的概率; //heading_pt:9-10,方向; //height_pt:11,高度。 // init inference model const NetworkParam& network_param = cnnseg_param_.network_param(); std::vector<std::string> output_names; output_names.push_back(network_param.instance_pt_blob()); output_names.push_back(network_param.category_pt_blob()); output_names.push_back(network_param.confidence_pt_blob()); output_names.push_back(network_param.height_pt_blob()); output_names.push_back(network_param.heading_pt_blob()); output_names.push_back(network_param.class_pt_blob()); std::vector<std::string> input_names; input_names.push_back(network_param.feature_blob()); inference_.reset(inference::CreateInferenceByName(cnnseg_param_.model_type(), proto_file, weight_file, output_names, input_names)); CHECK_NOTNULL(inference_.get()); gpu_id_ = cnnseg_param_.has_gpu_id() ? cnnseg_param_.gpu_id() : -1; BASE_CUDA_CHECK(cudaSetDevice(gpu_id_)); inference_->set_gpu_id(gpu_id_); // inference sets CPU mode when -1 //这儿没有开启constant_feature std::map<std::string, std::vector<int>> input_shapes; auto& input_shape = input_shapes[network_param.feature_blob()]; input_shape = {1, 8, height_, width_}; if (!feature_param.use_intensity_feature()) { input_shape[1] -= 2; } if (!feature_param.use_constant_feature()) { input_shape[1] -= 2; } ACHECK(inference_->Init(input_shapes)) << "Failed to init inference."; //连接网络的输入输出到变量上 // init blobs instance_pt_blob_ = inference_->get_blob(network_param.instance_pt_blob()); CHECK_NOTNULL(instance_pt_blob_.get()); category_pt_blob_ = inference_->get_blob(network_param.category_pt_blob()); CHECK_NOTNULL(category_pt_blob_.get()); confidence_pt_blob_ = inference_->get_blob(network_param.confidence_pt_blob()); CHECK_NOTNULL(confidence_pt_blob_.get()); height_pt_blob_ = inference_->get_blob(network_param.height_pt_blob()); CHECK_NOTNULL(height_pt_blob_.get()); feature_blob_ = inference_->get_blob(network_param.feature_blob()); CHECK_NOTNULL(feature_blob_.get()); if (cnnseg_param_.do_classification()) { classify_pt_blob_ = inference_->get_blob(network_param.class_pt_blob()); CHECK_NOTNULL(classify_pt_blob_.get()); } if (cnnseg_param_.do_heading()) { heading_pt_blob_ = inference_->get_blob(network_param.heading_pt_blob()); CHECK_NOTNULL(heading_pt_blob_.get()); } //初始化特征提取器 // init feature generator feature_generator_.reset(new FeatureGenerator); ACHECK(feature_generator_->Init(feature_param, feature_blob_.get())) << "Failed to init feature generator."; point2grid_.reserve(kDefaultPointCloudSize); //初始化SPP集群,并对点云进行背景分割,根据已知地面信息去掉表示地面的点云 // init cluster and background segmentation methods ACHECK(InitClusterAndBackgroundSegmentation()); return true; } |

侦测当前帧中的对象

bool CNNSegmentation::Detect(const LidarDetectorOptions& options, LidarFrame* frame) { ...... // record input cloud and lidar frame original_cloud_ = frame->cloud; original_world_cloud_ = frame->world_cloud; lidar_frame_ref_ = frame; // check output frame->segmented_objects.clear(); worker_.WakeUp(); // note we should use origninal cloud here, frame->cloud may be exchanged Timer timer; //映射3D点云到2D的索引图上,这样可以利用图像CNN分割的方法来对点云进行分割 //不能直接对3D点云进行训练推断,那样的话参数太多,且单独的一个点云并不具备实际意义。 // map 3d points to 2d image grids MapPointToGrid(original_cloud_); mapping_time_ = timer.toc(true); if (cudaSetDevice(gpu_id_) != cudaSuccess) { AERROR << "Failed to set device to " << gpu_id_; return false; } //特征提取器提取点云特征信息,见下面详细注解 //对映射后的点云进行特征提取 // generate features feature_generator_->Generate(original_cloud_, point2grid_); feature_time_ = timer.toc(true); //CNN模型推测,得到每个像素点倾向的中心点,方向,所属类型的概率等。 // model inference inference_->Infer(); infer_time_ = timer.toc(true); //处理CNN模型推测的集群信息,见下面详细注解 //从SPP引擎中获取侦测到的对象 // processing clustering GetObjectsFromSppEngine(&frame->segmented_objects); AINFO << "CNNSEG: mapping: " << mapping_time_ << "\t" << " feature: " << feature_time_ << "\t" << " infer: " << infer_time_ << "\t" << " fg-seg: " << fg_seg_time_ << "\t" << " join: " << join_time_ << "\t" << " collect: " << collect_time_; return true; } |

特征提取器提取点云特征信息

这儿是利用CUDA进行平行快速计算的。

void FeatureGenerator::GenerateGPU(const base::PointFCloudPtr& pc_ptr, const std::vector<int>& point2grid) { //在GPU里面初始化上面提到的6个通道数据 // fill initial value for feature blob int map_size = width_ * height_; int block_size = (map_size + kGPUThreadSize - 1) / kGPUThreadSize; SetKernel<float> <<<block_size, kGPUThreadSize>>>(map_size, -5.f, max_height_data_); BASE_CUDA_CHECK(cudaMemset(mean_height_data_, 0.f, sizeof(float) * map_size)); BASE_CUDA_CHECK(cudaMemset(count_data_, 0.f, sizeof(float) * map_size)); BASE_CUDA_CHECK(cudaMemset(nonempty_data_, 0.f, sizeof(float) * map_size)); if (use_intensity_feature_) { BASE_CUDA_CHECK( cudaMemset(top_intensity_data_, 0.f, sizeof(float) * map_size)); BASE_CUDA_CHECK( cudaMemset(mean_intensity_data_, 0.f, sizeof(float) * map_size)); } //复制点云数据和2D索引图像point2grid到GPU内存中,因为CNN网络是利用CUDA运行的。 // copy cloud data and point2grid from CPU to GPU memory size_t cloud_size = pc_ptr->size(); if (cloud_size > pc_gpu_size_) { // cloud data BASE_CUDA_CHECK(cudaFree(pc_gpu_)); BASE_CUDA_CHECK(cudaMalloc(reinterpret_cast<void**>(&pc_gpu_), cloud_size * sizeof(base::PointF))); pc_gpu_size_ = cloud_size; // point2grid BASE_CUDA_CHECK(cudaFree(point2grid_gpu_)); BASE_CUDA_CHECK(cudaMalloc(reinterpret_cast<void**>(&point2grid_gpu_), cloud_size * sizeof(int))); } BASE_CUDA_CHECK(cudaMemcpy(pc_gpu_, &(pc_ptr->front()), sizeof(base::PointF) * cloud_size, cudaMemcpyHostToDevice)); BASE_CUDA_CHECK(cudaMemcpy(point2grid_gpu_, point2grid.data(), sizeof(int) * cloud_size, cudaMemcpyHostToDevice)); //利用GPU计算特征值,MapKernel,TopIntensityKernel,AverageKernel函数都是CUDA的全局函数,在GPU上面执行。 //<<<block_size, kGPUThreadSize>>>表示这个函数需要在多少个block上面执行,每个block需要执行多少个线程(次)。 //即cloud_size个3D点都会并行执行。可参考《CUDA C编程权威指南 》详细了解。 //MapKernel方法展开后如下,CUDA由一个内核启动所产生的所有线程统称一个网格(Grid),同一网格中的所有线程共享相同的全局内存空间。 //向下一级,一个网格由多个线程块(Block)构成。再下一级,一个线程块由一组线程(Thread)构成。 //线程网格和线程块从逻辑上代表了一个核函数的线程层次结构,这种组织方式可以帮助我们有效地利用资源,优化性能。 //threadIdx是一个uint3类型,表示一个线程的索引。 //blockIdx是一个uint3类型,表示一个线程块的索引,一个线程块中通常有多个线程。 //blockDim是一个dim3类型,表示线程块的大小。 //gridDim是一个dim3类型,表示网格的大小,一个网格中通常有多个线程块。 //下面的函数MapKernel每次会同时在block_size X kGPUThreadSize个cuda核上运行 //template <typename Dtype> //__global__ void MapKernel(const int n, const base::PointF* pc, // Dtype* max_height_data, Dtype* mean_height_data, // Dtype* mean_intensity_data, Dtype* count_data, // int* point2grid) { // for (int i = blockIdx.x * blockDim.x + threadIdx.x; i < (n); i += blockDim.x * gridDim.x) { // int idx = point2grid[i]; // if (idx == -1) { // continue; // } // Dtype pz = pc[i].z; // Dtype pi = pc[i].intensity / 255.0; //计算最大高度值 // atomicMax(&max_height_data[idx], pz); //统计高度值,下一步AverageKernel求出平均值 // atomicAdd(&mean_height_data[idx], pz); // if (mean_intensity_data != nullptr) { // atomicAdd(&mean_intensity_data[idx], pi); // } // atomicAdd(&count_data[idx], (Dtype)1); // } //} // compute features // float inv_res_x = 0.5 * width_ / range_; // float inv_res_y = 0.5 * height_ / range_; { int block_size = (cloud_size + kGPUThreadSize - 1) / kGPUThreadSize; MapKernel<float><<<block_size, kGPUThreadSize>>>( cloud_size, pc_gpu_, max_height_data_, mean_height_data_, mean_intensity_data_, count_data_, point2grid_gpu_); TopIntensityKernel<float><<<block_size, kGPUThreadSize>>>( cloud_size, top_intensity_data_, pc_gpu_, max_height_data_, point2grid_gpu_); } { int block_size = (map_size + kGPUThreadSize - 1) / kGPUThreadSize; float* log_table = log_blob_->mutable_gpu_data() + log_blob_->offset(0, 0); AverageKernel<float><<<block_size, kGPUThreadSize>>>( map_size, count_data_, max_height_data_, mean_height_data_, mean_intensity_data_, nonempty_data_, log_table, kMaxLogNum); } } |

处理CNN模型推测的集群信息

void CNNSegmentation::GetObjectsFromSppEngine( std::vector<std::shared_ptr<Object>>* objects) { Timer timer; spp_engine_.GetSppData().grid_indices = point2grid_.data(); //处理前景分割对象 size_t num_foreground = spp_engine_.ProcessForegroundSegmentation(original_cloud_); fg_seg_time_ = timer.toc(true); // should sync with worker before do background segmentation worker_.Join(); join_time_ = timer.toc(true); // copy height from roi cloud to origin cloud, // note ground points include other noise points // filtered by ground detection post process AINFO << "Use origin cloud and copy height"; for (std::size_t i = 0; i < lidar_frame_ref_->roi_indices.indices.size(); ++i) { const int roi_id = lidar_frame_ref_->roi_indices.indices[i]; original_cloud_->mutable_points_height()->at(roi_id) = roi_cloud_->points_height(i); if (roi_cloud_->mutable_points_label()->at(i) == static_cast<uint8_t>(LidarPointLabel::GROUND)) { original_cloud_->mutable_points_label()->at(roi_id) = roi_cloud_->points_label().at(i); } } memcpy(&original_world_cloud_->mutable_points_height()->at(0), &original_cloud_->points_height().at(0), sizeof(float) * original_cloud_->size()); memcpy(&original_world_cloud_->mutable_points_label()->at(0), &original_cloud_->points_label().at(0), sizeof(uint8_t) * original_cloud_->size()); if (cnnseg_param_.remove_ground_points()) { num_foreground = spp_engine_.RemoveGroundPointsInForegroundCluster( original_cloud_, lidar_frame_ref_->roi_indices, lidar_frame_ref_->non_ground_indices); if (num_foreground == 0) { ADEBUG << "No foreground segmentation output"; } } const auto& clusters = spp_engine_.clusters(); objects->clear(); base::ObjectPool::Instance().BatchGet(clusters.size(), objects); size_t valid = 0; // prepare for valid point cloud for seconary segmentor // after removing pts from primary segmentor, ground and non roi pts /*CloudMask mask; if (cnnseg_param_.fill_recall_with_ncut()) { mask.Set(original_cloud_.size(), 0); mask.AddIndicesOfIndices(lidar_frame_ref->roi_indices, lidar_frame_ref->non_ground_indices, 1); }*/ //循环所有集群,过滤掉点云数少的集群,生成对象列表。 for (int i = 0; i < static_cast<int>(clusters.size()); ++i) { if (clusters[i]->points.size() <= cnnseg_param_.min_pts_num() && clusters[i]->pixels.size() < cnnseg_param_.min_pts_num()) { continue; } auto& cluster = clusters[i]; auto& object = objects->at(valid); object->lidar_supplement.num_points_in_roi = cluster->points_in_roi; object->lidar_supplement.on_use = true; object->lidar_supplement.is_background = false; //复制点云信息到侦测到的对象上 object->lidar_supplement.cloud.CopyPointCloud(*original_cloud_, cluster->point_ids); object->lidar_supplement.cloud_world.CopyPointCloud(*original_world_cloud_, cluster->point_ids); object->confidence = cluster->confidence; object->id = static_cast<int>(valid); //计算所属每个分类的概率 if (cnnseg_param_.do_classification()) { object->lidar_supplement.raw_probs.push_back(std::vector<float>( static_cast<int>(base::ObjectType::MAX_OBJECT_TYPE), 0.f)); object->lidar_supplement.raw_classification_methods.push_back(Name()); object->lidar_supplement.raw_probs .back()[static_cast<int>(base::ObjectType::UNKNOWN)] = cluster->class_prob[static_cast<int>(MetaType::META_UNKNOWN)]; object->lidar_supplement.raw_probs .back()[static_cast<int>(base::ObjectType::PEDESTRIAN)] = cluster->class_prob[static_cast<int>(MetaType::META_PEDESTRIAN)]; object->lidar_supplement.raw_probs .back()[static_cast<int>(base::ObjectType::BICYCLE)] = cluster->class_prob[static_cast<int>(MetaType::META_NONMOT)]; object->lidar_supplement.raw_probs .back()[static_cast<int>(base::ObjectType::VEHICLE)] = cluster->class_prob[static_cast<int>(MetaType::META_SMALLMOT)] + cluster->class_prob[static_cast<int>(MetaType::META_BIGMOT)]; // copy to type object->type_probs.assign( object->lidar_supplement.raw_probs.back().begin(), object->lidar_supplement.raw_probs.back().end()); object->type = static_cast<base::ObjectType>( std::distance(object->type_probs.begin(), std::max_element(object->type_probs.begin(), object->type_probs.end()))); } //计算其方向 if (cnnseg_param_.do_heading()) { // object->theta = cluster->yaw; // object->direction[0] = cos(cluster->yaw); // object->direction[1] = sin(cluster->yaw); // 2018.6.21, switch to axis rotated projection // should be reverted after retrain model. static const float quater_pi = static_cast<float>(M_PI) * 0.25f; object->theta = cluster->yaw - quater_pi; object->direction[0] = cosf(cluster->yaw - quater_pi); object->direction[1] = sinf(cluster->yaw - quater_pi); object->direction[2] = 0; object->lidar_supplement.is_orientation_ready = true; } ++valid; } objects->resize(valid); // add additional object seg logic with ncut if cnnseg miss detects /*if (cnnseg_param_.fill_recall_with_ncut() && secondary_segmentor) { mask.GetValidIndices(lidar_frame_ref_->secondary_indices); secondary_segmentor->Segment(SegmentationOptions(), lidar_frame_ref_); //segment based on lidar frame ref }*/ collect_time_ = timer.toc(true); } |

处理前景分割对象

size_t SppEngine::ProcessConnectedComponentCluster( const base::PointFCloudConstPtr point_cloud, const CloudMask& mask) { Timer timer; data_.category_pt_blob->cpu_data(); data_.instance_pt_blob->cpu_data(); double sync_time1 = timer.toc(true); worker_.WakeUp(); //调用并查集算法构建节点,合并节点。 size_t num = detector_2d_cc_.Detect(&labels_2d_); if (num == 0) { ADEBUG << "No object detected"; // Later will decide if return this function here } double detect_time = timer.toc(true); worker_.Join(); double sync_time2 = timer.toc(true); // labels_2d_.FilterClusters(data.confidence_data, // data.confidence_threshold); // 2018.6.21 filter use category data to reserve long range objects // should be reverted after retrain model //过滤掉一些置信率不高的集群 labels_2d_.FilterClusters(data_.confidence_data, data_.obs_prob_data_ref[0], data_.confidence_threshold, data_.objectness_threshold); double filter_time = timer.toc(true); //计算每个集群的分类概率信息,具体见下一节 SPP索引图处理 if (data_.class_prob_data != nullptr) { labels_2d_.CalculateClusterClass(data_.class_prob_data, data_.class_num); } //计算每个集群的朝向,具体见下一节 SPP索引图处理 if (data_.heading_data != nullptr) { labels_2d_.CalculateClusterHeading(data_.heading_data); } //计算每个集群的最大高度,具体见下一节 SPP索引图处理 if (data_.z_data != nullptr) { labels_2d_.CalculateClusterTopZ(data_.z_data); } double chz_time = timer.toc(true); // 2. process 2d to 3d // first sync between cluster list and label image, // and they shared the same cluster pointer //关联集群的3D点云 clusters_ = labels_2d_; for (size_t i = 0; i < point_cloud->size(); ++i) { if (mask.size() && mask[static_cast<int>(i)] == 0) { continue; } // out of range const int id = data_.grid_indices[i]; if (id < 0) { continue; } const auto& point = point_cloud->at(i); const uint16_t& label = labels_2d_[0][id]; if (!label) { continue; } if (point.z <= labels_2d_.GetCluster(label - 1)->top_z + data_.top_z_threshold) { //给每个集群分配3D点云,最后阶段的复制点云信息到侦测对象上会利用point_ids //void SppClusterList::AddPointSample(size_t cluster_id, // const base::PointF& point, float height, // uint32_t point_id) { // if (clusters_.size() <= cluster_id) { // resize(cluster_id + 1); // } // clusters_[cluster_id]->AddPointSample(point, height, point_id); //} // inline void AddPointSample(const base::PointF& point, float height, // uint32_t point_id) { // points.push_back(SppPoint(point, height)); // point_ids.push_back(point_id); // } clusters_.AddPointSample(label - 1, point, point_cloud->points_height(i), static_cast<uint32_t>(i)); } } double mapping_time = timer.toc(true); //删除空集群 // 5. remove empty clusters clusters_.RemoveEmptyClusters(); double remove_time = timer.toc(true); AINFO << "SegForeground: sync1 " << sync_time1 << "\tdetect: " << detect_time << "\tsync2: " << sync_time2 << "\tfilter: " << filter_time << "\tchz: " << chz_time << "\tmapping: " << mapping_time << "\tremove: " << remove_time; return clusters_.size(); } size_t SppEngine::ProcessForegroundSegmentation( const base::PointFCloudConstPtr point_cloud) { mask_.clear(); ProcessConnectedComponentCluster(point_cloud, mask_); AINFO << "Foreground: " << clusters_.size() << " clusters"; return clusters_.size(); } |

调用并查集算法构建节点,合并节点

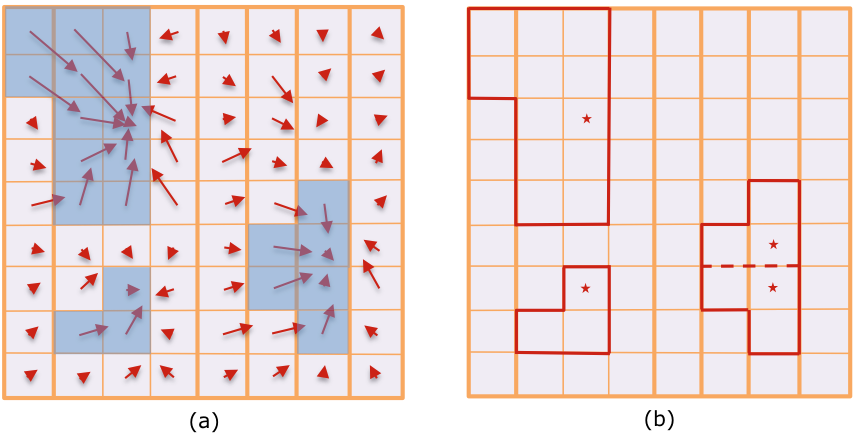

CNN算法输出的结果类似于如图,它标出了每个节点的中心节点

CNN算法输出的结果类似于如图,它标出了每个节点的中心节点

Apollo基于单元格中心偏移,预测构建有向图,并搜索连接的组件作为候选对象集群。每个单元格是图的一个节点,并且基于单元格的中心偏移预测构建有向边,其指向对应于另一单元的父节点,由offset_map_ 对象提供数据。

(a) 红色箭头表示每个单元格对象中心偏移预测;蓝色填充对应于物体概率不小于0.5的对象单元。

(b) 固体红色多边形内的单元格组成候选对象集群。其中右边的2个五角星相邻,所以UnionNodes方法会把它们合并。

由五角星填充的红色范围表示对应于连接组件子图的根节点(单元格)。一个候选对象集群可以由其根节点彼此相邻的多个相邻连接组件组成。

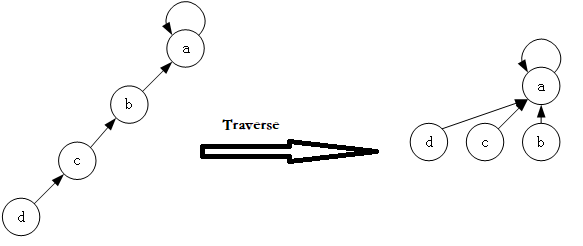

巡回所有的节点TraverseNodes,压缩每个节点的路径

巡回所有的节点TraverseNodes,压缩每个节点的路径

//利用并查集算法构建节点,合并节点 size_t SppCCDetector::Detect(SppLabelImage* labels) { Timer timer; if (!first_process_) { worker_.Join(); // sync for cleaning nodes } first_process_ = false; //构建节点(索引图中的每个像素点即单元格),设置每个节点的中心节点 BuildNodes(0, rows_); double init_time = timer.toc(true); double sync_time = timer.toc(true); //巡回所有的节点,根据中心节点,设在所有节点的父节点为最终的中心节点 TraverseNodes(); double traverse_time = timer.toc(true); //找到所有的root节点,并合并它们成一个大的集群 UnionNodes(); double union_time = timer.toc(true); size_t num = ToLabelMap(labels); worker_.WakeUp(); // for next use double collect_time = timer.toc(true); AINFO << "SppSegCC2D: init: " << init_time << "\tsync: " << sync_time << "\ttraverse: " << traverse_time << "\tunion: " << union_time << "\tcollect: " << collect_time << "\t#obj: " << num; return num; } //构建节点(索引图中的每个像素点即单元格),设置每个节点的中心节点 //通过CNN分割的输出offset_map_获取每个节点的中心节点(单元格) bool SppCCDetector::BuildNodes(int start_row_index, int end_row_index) { const float* offset_row_ptr = offset_map_ + start_row_index * cols_; const float* offset_col_ptr = offset_map_ + (rows_ + start_row_index) * cols_; const float* prob_map_ptr = prob_map_[0] + start_row_index * cols_; Node* node_ptr = nodes_[0] + start_row_index * cols_; for (int row = start_row_index; row < end_row_index; ++row) { for (int col = 0; col < cols_; ++col) { node_ptr->set_is_object(*prob_map_ptr++ >= objectness_threshold_); int center_row = static_cast<int>(*offset_row_ptr++ * scale_ + static_cast<float>(row) + 0.5f); int center_col = static_cast<int>(*offset_col_ptr++ * scale_ + static_cast<float>(col) + 0.5f); center_row = std::max(0, std::min(rows_ - 1, center_row)); center_col = std::max(0, std::min(cols_ - 1, center_col)); (node_ptr++)->center_node = center_row * cols_ + center_col; } } return true; } //巡回所有的节点,根据中心节点,设在所有节点的父节点为最终的中心节点 //每个节点的4个属性node_rank, traversed, is_center和is_object被压缩在一个16位的 //变量uint16_t status里面,结构表示为: //|is_center(1bit)|is_object(1bit)|traversed(3bit)|node_rank(11bit)| void SppCCDetector::TraverseNodes() { for (int row = 0; row < rows_; row++) { for (int col = 0; col < cols_; col++) { Node& node = nodes_[row][col]; if (node.is_object() && node.get_traversed() == 0) { Traverse(&node); } } } } void SppCCDetector::Traverse(SppCCDetector::Node* x) { std::vector<SppCCDetector::Node*> p; p.clear(); //通过节点的中心节点,迭代到最终的中心节点 while (x->get_traversed() == 0) { p.push_back(x); x->set_traversed(2); x = nodes_[0] + x->center_node; } if (x->get_traversed() == 2) { for (int i = static_cast<int>(p.size()) - 1; i >= 0 && p[i] != x; i--) { p[i]->set_is_center(true); } x->set_is_center(true); } //把找到的所有节点的父节点设置为最终节点的父节点 for (size_t i = 0; i < p.size(); i++) { Node* y = p[i]; y->set_traversed(1); y->parent = x->parent; } } //找到所有的root节点,并合并它们成一个更大的集群,便于集群里所有节点搜索 //如果root节点相邻就合并它们,因为它们就是同一个集群,即同一个对象 void SppCCDetector::UnionNodes() { for (int row = 0; row < rows_; ++row) { for (int col = 0; col < cols_; ++col) { Node* node = &nodes_[row][col]; if (!node->is_center()) { continue; } Node* node_neighbor = nullptr; // right if (col < cols_ - 1) { node_neighbor = &nodes_[row][col + 1]; if (node_neighbor->is_center()) { DisjointSetUnion(node, node_neighbor); } } // down if (row < rows_ - 1) { node_neighbor = &nodes_[row + 1][col]; if (node_neighbor->is_center()) { DisjointSetUnion(node, node_neighbor); } } // right down if (row < rows_ - 1 && col < cols_ - 1) { node_neighbor = &nodes_[row + 1][col + 1]; if (node_neighbor->is_center()) { DisjointSetUnion(node, node_neighbor); } } // left down if (row < rows_ - 1 && col > 0) { node_neighbor = &nodes_[row + 1][col - 1]; if (node_neighbor->is_center()) { DisjointSetUnion(node, node_neighbor); } } } } } //迭代每个节点,找到每个节点的根节点并设置 size_t SppCCDetector::ToLabelMap(SppLabelImage* labels) { uint16_t id = 0; uint32_t pixel_id = 0; labels->ResetClusters(kDefaultReserveSize); for (int row = 0; row < rows_; ++row) { for (int col = 0; col < cols_; ++col, ++pixel_id) { Node* node = &nodes_[row][col]; if (!node->is_object()) { (*labels)[row][col] = 0; continue; } Node* root = DisjointSetFind(node); // note label in label image started from 1, // zero is reserved from non-object if (!root->id) { root->id = ++id; } //设置每个单元格的根结点ID (*labels)[row][col] = root->id; //添加每个单元格到所属的每个集群里 labels->AddPixelSample(root->id - 1, pixel_id); } } labels->ResizeClusters(id); return id; } |

SPP索引图处理

//计算集群的分类 void SppLabelImage::CalculateClusterClass(const float* class_map, size_t class_num) { //初始化cluster->class_prob,大小为class_num for (auto& cluster : clusters_) { cluster->class_prob.assign(class_num, 0.f); } size_t size = width_ * height_; for (size_t c = 0; c < class_num; ++c) { const float* class_map_ptr = class_map + c * size; for (auto& cluster : clusters_) { //累加每一个集群里面所有的单元格所属每一个分类的概率 auto& probs = cluster->class_prob; for (auto& pixel : cluster->pixels) { probs[c] += class_map_ptr[pixel]; } } } for (auto& cluster : clusters_) { //利用上面的累加,计算每一个集群所属每一个分类的概率 auto& probs = cluster->class_prob; float sum = std::accumulate(probs.begin(), probs.end(), 0.f); if (sum > 1e-9) { for (auto& value : probs) { value /= sum; } } //概率probs的下标对应分类的标号,所以概率最大的元素的下标就是此集群所属的分类 //enum class SppClassType { // OTHERS = 0, // SMALLMOT = 1, // BIGMOT = 2, // CYCLIST = 3, // PEDESTRIAN = 4, // CONE = 5, // MAX_TYPE = 6, //}; cluster->type = static_cast<SppClassType>(std::distance( probs.begin(), std::max_element(probs.begin(), probs.end()))); } } //计算集群的方向 void SppLabelImage::CalculateClusterHeading(const float* heading_map) { const float* heading_map_x_ptr = heading_map; const float* heading_map_y_ptr = heading_map + width_ * height_; for (size_t n = 0; n < clusters_.size(); ++n) { float heading_x = 0.f, heading_y = 0.f; for (auto pixel : clusters_[n]->pixels) { heading_x += heading_map_x_ptr[pixel]; heading_y += heading_map_y_ptr[pixel]; } //每个集群内,平均所有单元格的角度。反正切求出。 clusters_[n]->yaw = std::atan2(heading_y, heading_x) * 0.5f; } } //求出每个集群的最大高度 void SppLabelImage::CalculateClusterTopZ(const float* top_z_map) { for (auto& cluster : clusters_) { float sum = 0.f; for (auto& pixel : cluster->pixels) { sum += top_z_map[pixel]; } sum = cluster->pixels.size() > 0 ? sum / static_cast<float>(cluster->pixels.size()) : sum; cluster->top_z = sum; } } |

打印侦测到的对象信息

-- Object 7 : Object [id: 7, track_id: -1, direction: (-0.390441,-0.920628,0), theta: -1.97191, theta_variance: 0, center: (0,0,0), center_uncertainty: (0,0,0,0,0,0,0,0,0), size: (0,0,0), size_variance: (0,0,0), anchor_point: (0,0,0), type: 5, confidence: 0.967627, track_id: -1, velocity: (0,0,0), velocity_confidence: 1, acceleration: (0,0,0), tracking_time: 0, latest_tracked_time: 0, type_probs: 0.00121037, 0, 0, 7.07104e-05, 0.000112207, 0.998607 *****First 30 pcs: 61.1489,-10.6766; 61.1171,-10.88; 61.0098,-11.0698; 60.7498,-11.659; 60.7925,-11.6672; 61.9524,-10.0874; 60.3771,-12.6398; 60.2857,-12.8405; 60.2115,-13.0336; 60.1178,-13.2222; 60.1328,-13.2255; 60.0518,-13.4276; 60.0317,-13.4231; 61.648,-13.9993; 59.9673,-13.6176; 61.6129,-14.2063; 59.9296,-13.8182; 59.8562,-14.0105; 60.5882,-12.4017; 60.5154,-12.596; 60.4263,-12.7867; 61.2955,-10.9537; 60.3227,-12.9849; 60.2559,-13.1798; 60.0814,-13.3505; 60.165,-13.3691; 60.0368,-13.5497; 60.0969,-13.5633; 61.0178,-13.9951; 60.0219,-13.7667; -- Object 8 : Object [id: 8, track_id: -1, direction: (-0.451645,-0.892198,0), theta: -2.0394, theta_variance: 0, center: (0,0,0), center_uncertainty: (0,0,0,0,0,0,0,0,0), size: (0,0,0), size_variance: (0,0,0), anchor_point: (0,0,0), type: 5, confidence: 0.966067, track_id: -1, velocity: (0,0,0), velocity_confidence: 1, acceleration: (0,0,0), tracking_time: 0, latest_tracked_time: 0, type_probs: 0.00387007, 0, 0, 0.000103251, 0.000834969, 0.995192 *****First 30 pcs: 52.9606,-21.4448; 52.8485,-21.6037; 52.9687,-21.8581; 53.0102,-22.0921; 52.9145,-22.2584; 53.6118,-21.6257; 52.9103,-21.5578; 52.3631,-23.2851; 52.2309,-23.4341; 52.6776,-21.8715; 52.5935,-22.0521; 52.5127,-22.2233; 52.9646,-21.4873; 52.9041,-21.6674; 52.7654,-21.8259; 52.6341,-21.9763; 52.6705,-21.9915; 52.5793,-22.1585; 52.5789,-22.1583; 52.4757,-22.32; 52.4158,-22.511; 52.1853,-23.0404; 52.9601,-21.6029; 52.9398,-21.5947; 52.8734,-21.7723; 52.0131,-23.3778; 52.7345,-21.9199; 52.6626,-21.8901; 52.6761,-22.1116; 52.6191,-22.0877; -- Object 23 : Object [id: 23, track_id: -1, direction: (-0.344406,-0.938821,0), theta: -1.9224, theta_variance: 0, center: (0,0,0), center_uncertainty: (0,0,0,0,0,0,0,0,0), size: (0,0,0), size_variance: (0,0,0), anchor_point: (0,0,0), type: 5, confidence: 0.969238, track_id: -1, velocity: (0,0,0), velocity_confidence: 1, acceleration: (0,0,0), tracking_time: 0, latest_tracked_time: 0, type_probs: 0.000468713, 0, 0, 1.45403e-05, 1.95311e-05, 0.999497 *****First 30 pcs: 37.0942,0.321136; 37.0186,0.197724; 36.9991,0.19762; 37.0071,0.0684897; 36.9396,0.0683648; 36.9471,-0.0541532; 36.8596,-0.0540249; 36.7829,-0.175901; 36.7912,-0.175941; 36.826,-0.304651; 36.7264,-0.303828; 36.7328,-0.425714; 36.6852,-0.425162; 36.6953,-0.546979; 36.6356,-0.54609; 36.6293,-0.667506; 36.5416,-0.665909; 36.5669,-0.787689; 36.5512,-0.78735; 36.496,-0.913618; 36.5243,-0.914325; 37.0215,0.11762; 36.4089,-1.03223; 36.4931,-1.03462; 37.0456,-0.00517963; 36.3693,-1.15185; 36.4255,-1.15363; 36.9295,-0.134047; 37.1128,-0.134712; 36.3331,-1.27765; -- Object 36 : Object [id: 36, track_id: -1, direction: (-0.351604,-0.936149,0), theta: -1.93008, theta_variance: 0, center: (0,0,0), center_uncertainty: (0,0,0,0,0,0,0,0,0), size: (0,0,0), size_variance: (0,0,0), anchor_point: (0,0,0), type: 5, confidence: 0.983952, track_id: -1, velocity: (0,0,0), velocity_confidence: 1, acceleration: (0,0,0), tracking_time: 0, latest_tracked_time: 0, type_probs: 0.000658799, 0, 0, 4.93891e-06, 1.93475e-05, 0.999317 *****First 30 pcs: 24.7072,4.06634; 24.6573,3.97419; 24.7264,3.89682; 25.3654,3.73931; 24.3235,3.58572; 24.268,3.49534; 24.2444,3.40561; 24.5222,4.1529; 26.036,3.56927; 24.2239,3.32085; 24.6108,4.08399; 24.442,3.26821; 24.1674,3.23148; 24.4623,3.97607; 24.3774,3.17733; 24.6918,4.01336; 24.1542,3.14824; 24.4714,3.89428; 24.697,3.93018; 24.1248,3.06307; 24.8551,4.76743; 24.4328,3.80511; 24.6162,3.04256; 24.1309,2.98257; 24.7568,4.66352; 24.3946,3.71199; 24.7074,3.75959; 24.0816,2.89117; 24.7257,4.56836; 24.4265,3.63402; -- Object 48 : Object [id: 48, track_id: -1, direction: (0.0384386,-0.999261,0), theta: -1.53235, theta_variance: 0, center: (0,0,0), center_uncertainty: (0,0,0,0,0,0,0,0,0), size: (0,0,0), size_variance: (0,0,0), anchor_point: (0,0,0), type: 5, confidence: 0.985447, track_id: -1, velocity: (0,0,0), velocity_confidence: 1, acceleration: (0,0,0), tracking_time: 0, latest_tracked_time: 0, type_probs: 0.000298379, 0, 0, 3.35831e-06, 2.82577e-05, 0.99967 *****First 30 pcs: 3.30941,11.1196; 3.34093,11.2255; 3.33935,11.0856; 3.37698,11.2106; 3.32745,11.0461; 3.3505,10.9905; 3.42812,11.2452; 3.35825,11.016; 3.36104,10.8954; 3.4701,11.249; 3.36186,10.8981; 3.36406,10.9052; 3.42688,10.9795; 3.34195,10.7074; 3.38376,10.8414; 3.3956,10.8793; 3.37519,10.8139; 3.32078,10.5168; 3.36232,10.6484; 3.36782,10.6658; 3.39332,10.7466; 3.41726,10.8224; 3.37854,10.6997; 3.36594,10.5317; 3.37761,10.5682; 3.36855,10.5398; 3.42112,10.7043; 3.37571,10.5622; 3.39351,10.4978; 3.36721,10.4164; |

本作品采用知识共享署名 4.0 国际许可协议进行许可。

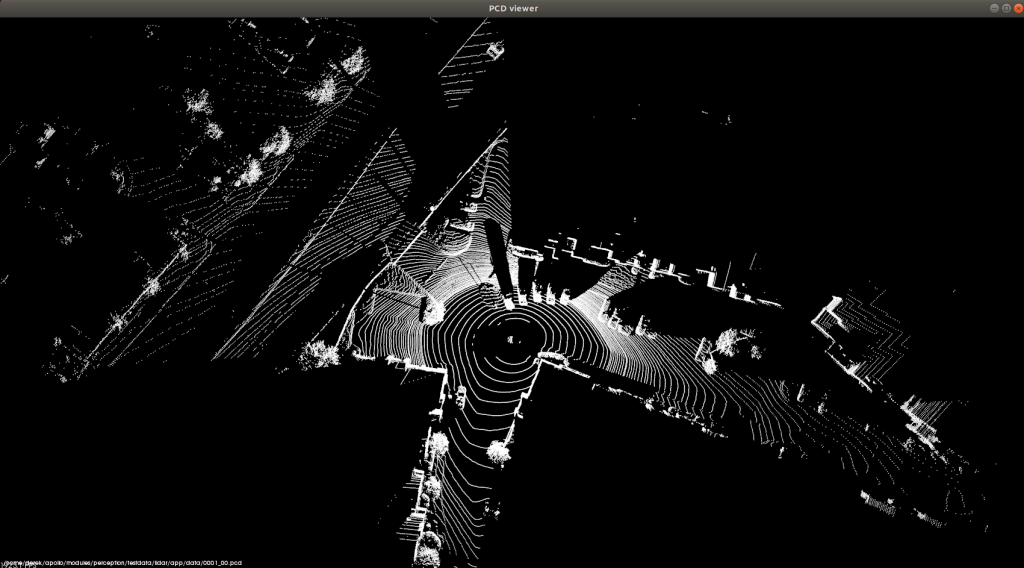

测试用例PCD文件可视化结果

测试用例PCD文件可视化结果 CNN Segment网络模型

CNN Segment网络模型 通过构建节点方法BuildNodes,构建并查集

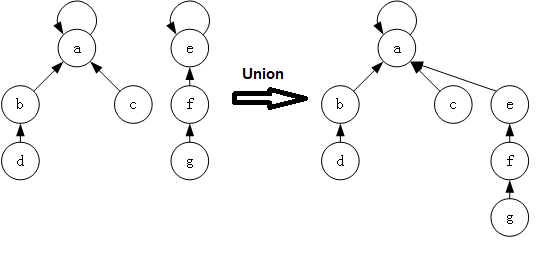

通过构建节点方法BuildNodes,构建并查集 合并根节点UnionNodes,构成一个大的集群

合并根节点UnionNodes,构成一个大的集群

《Apollo自动驾驶的点云CNN分割》有1个想法