使用AWS的EKS来托管Kubernetes是比较复杂,按照如下的方法可以创建出一个满足大部分使用环境的EKS。

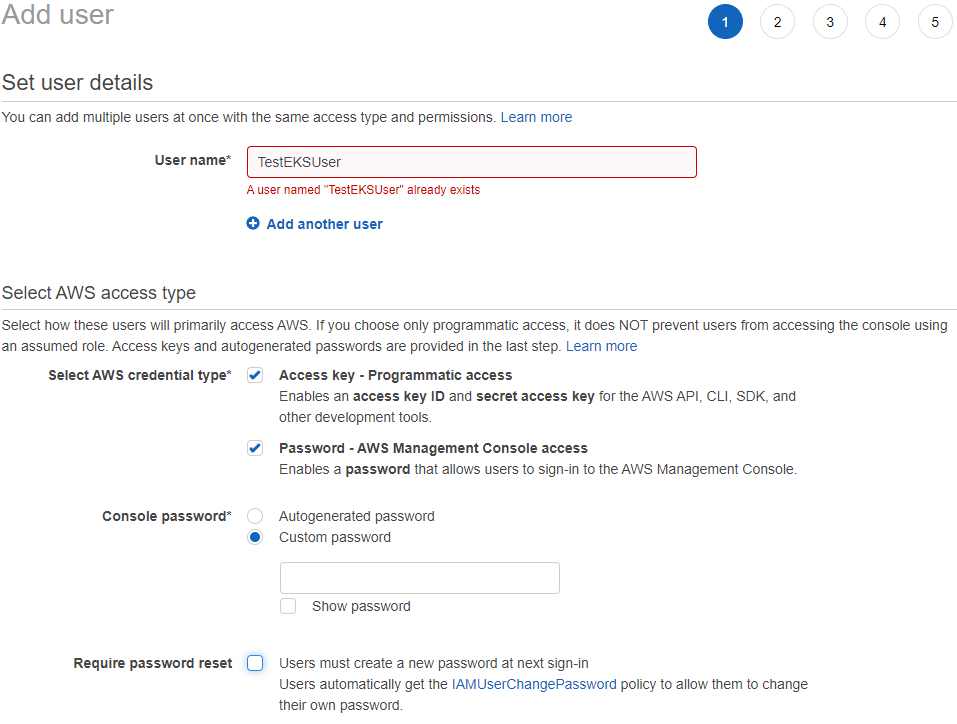

1 创建一个IAM用户(Root用户操作)

在AWS中创建一个IAM用户,权限够用就行。

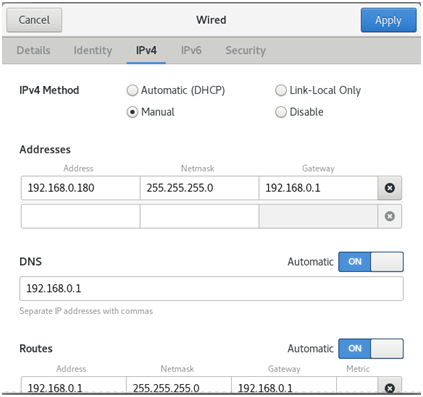

在AWS管理控制台,点击”Add users”:

其它页面默认就好。最后保存好下载的CSV文件,里面包含的Access Key和Secret Access Key在AWS CLI里面会用到。

2 创建策略和角色(Root用户操作)

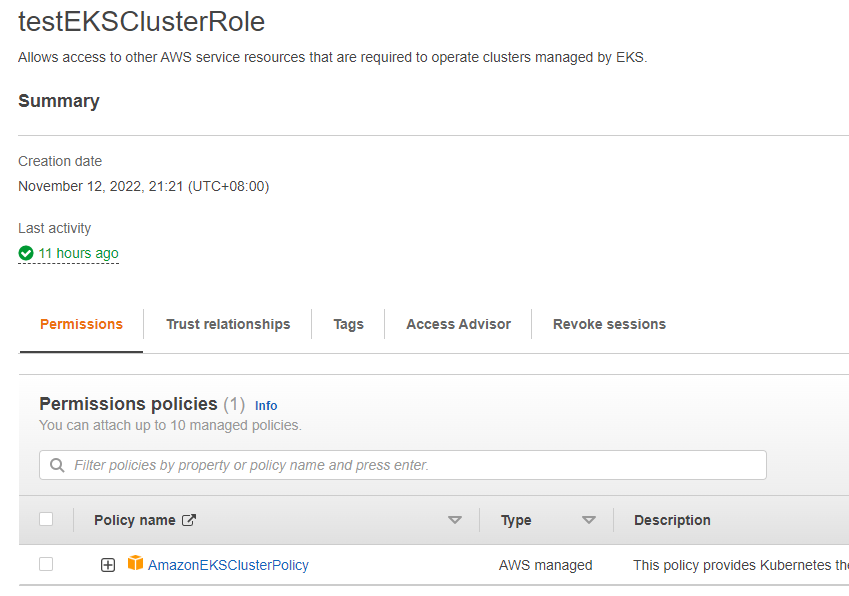

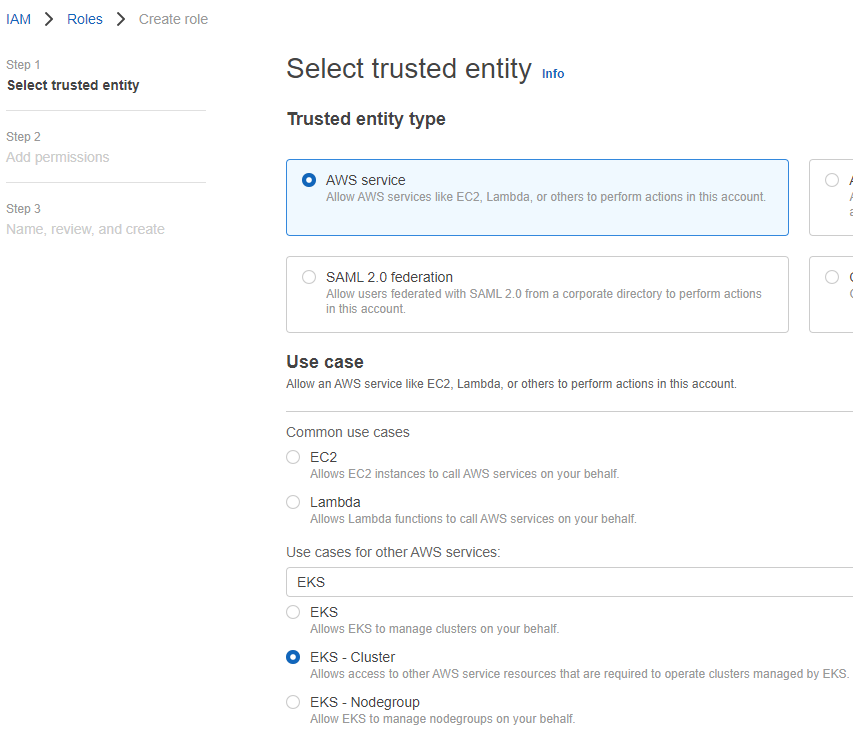

2.1 创建EKS集群角色

给EKS集群创建一个角色:”testEKSClusterRole”,它包含一个策略: AmazonEKSClusterPolicy。

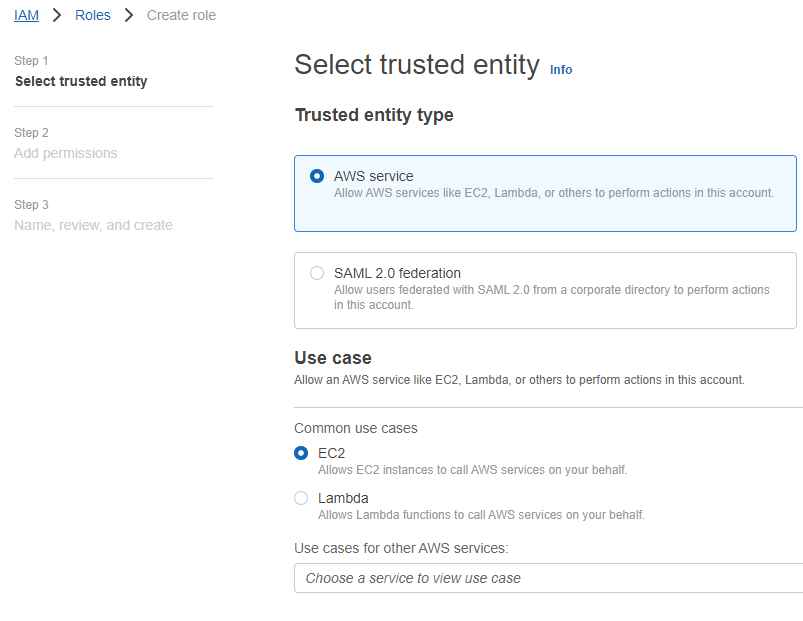

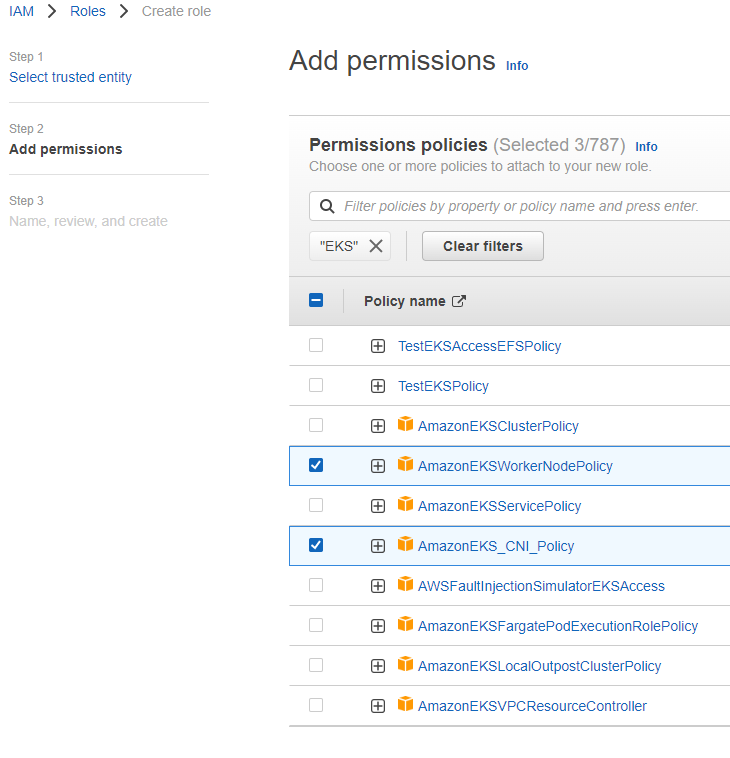

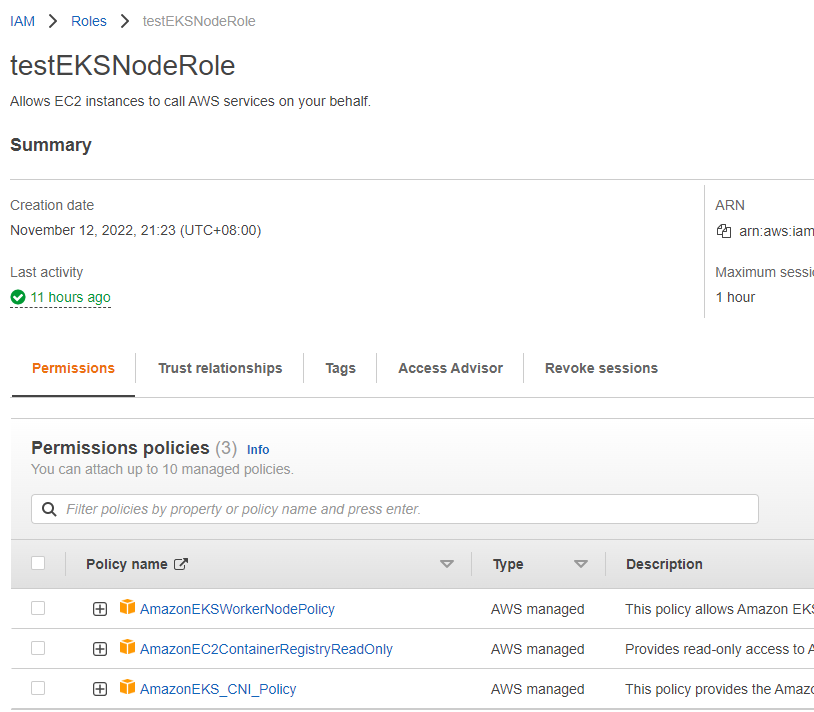

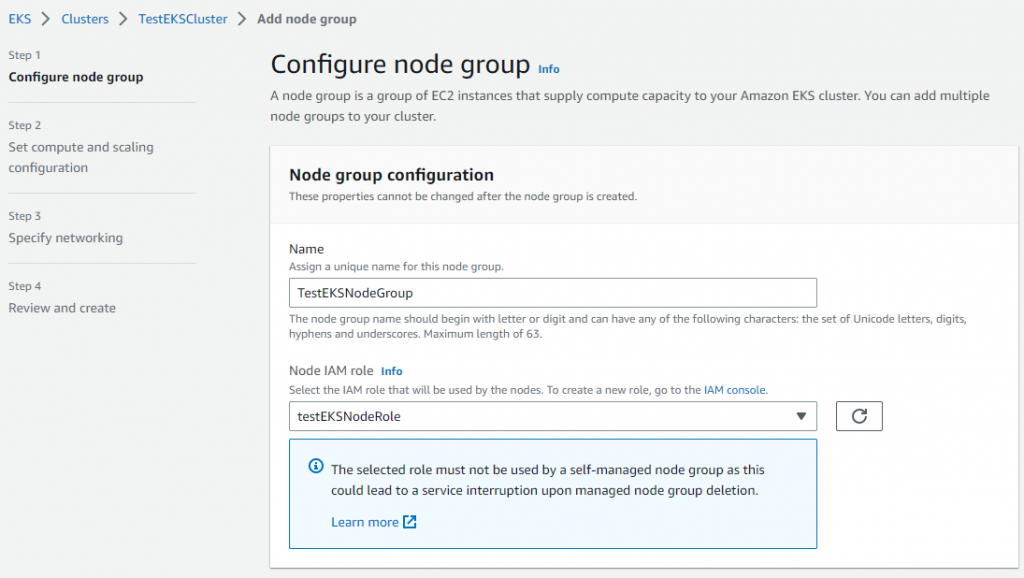

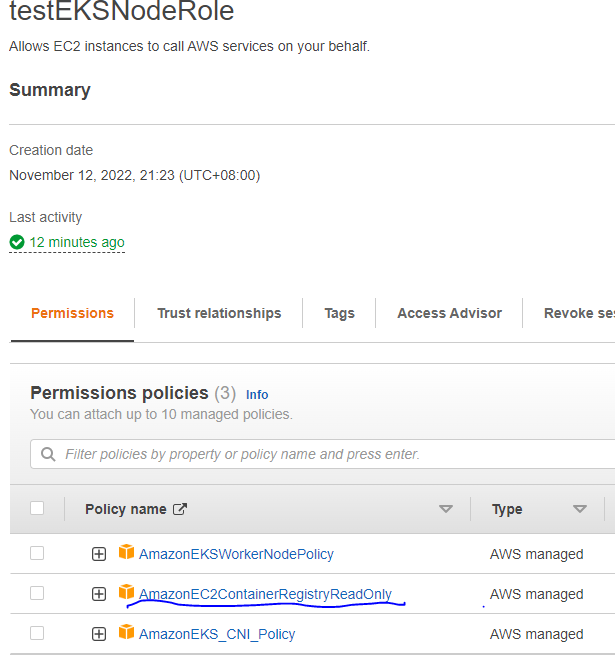

2.2 创建集群节点组角色

创建角色”testEKSNodeRole”,包含如下策略:

AmazonEKSWorkerNodePolicy

AmazonEC2ContainerRegistryReadOnly

AmazonEKS_CNI_Policy

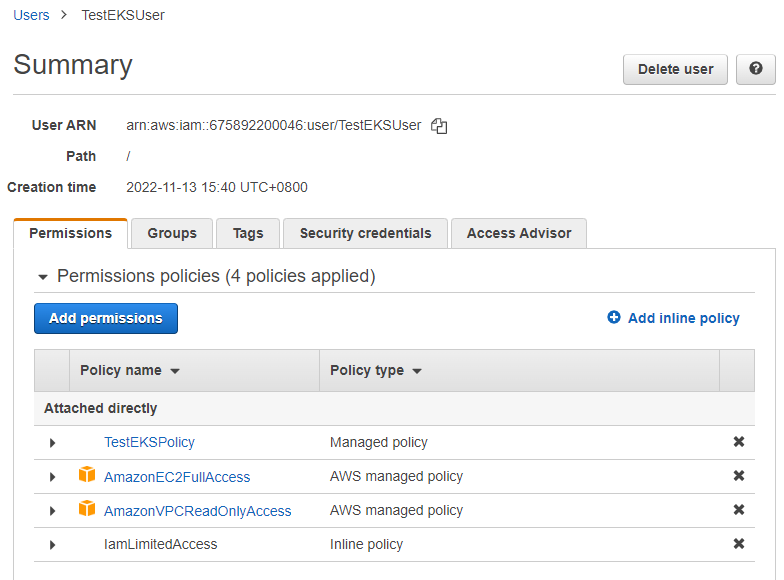

2.3 给IAM用户添加权限

用户需要如下4个权限。你也可以创建一个用户组,并给其赋予权限,然后加入用户。

赋予受管策略”AmazonEC2FullAccess”, “AmazonVPCReadOnlyAccess”, “AmazonEC2FullAccess”。

添加一个包含如下内容的自定义策略:”TestEKSPolicy”

(请修改账号ID675892200046)

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": "eks:*",

"Resource": "*"

},

{

"Action": [

"ssm:GetParameter",

"ssm:GetParameters"

],

"Resource": [

"arn:aws:ssm:*:675892200046:parameter/aws/*",

"arn:aws:ssm:*::parameter/aws/*"

],

"Effect": "Allow"

},

{

"Action": [

"kms:CreateGrant",

"kms:DescribeKey"

],

"Resource": "*",

"Effect": "Allow"

},

{

"Action": [

"logs:PutRetentionPolicy"

],

"Resource": "*",

"Effect": "Allow"

}

]

} |

添加一个包含如下内容的自定义策略:”IamLimitedAccess”

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "VisualEditor0",

"Effect": "Allow",

"Action": "iam:CreateServiceLinkedRole",

"Resource": "*",

"Condition": {

"StringEquals": {

"iam:AWSServiceName": [

"eks.amazonaws.com",

"eks-nodegroup.amazonaws.com",

"eks-fargate.amazonaws.com"

]

}

}

},

{

"Sid": "VisualEditor1",

"Effect": "Allow",

"Action": [

"iam:CreateInstanceProfile",

"iam:TagRole",

"iam:RemoveRoleFromInstanceProfile",

"iam:DeletePolicy",

"iam:CreateRole",

"iam:AttachRolePolicy",

"iam:PutRolePolicy",

"iam:AddRoleToInstanceProfile",

"iam:ListInstanceProfilesForRole",

"iam:PassRole",

"iam:DetachRolePolicy",

"iam:DeleteRolePolicy",

"iam:ListAttachedRolePolicies",

"iam:DeleteOpenIDConnectProvider",

"iam:DeleteInstanceProfile",

"iam:GetRole",

"iam:GetInstanceProfile",

"iam:GetPolicy",

"iam:DeleteRole",

"iam:ListInstanceProfiles",

"iam:CreateOpenIDConnectProvider",

"iam:CreatePolicy",

"iam:ListPolicyVersions",

"iam:GetOpenIDConnectProvider",

"iam:TagOpenIDConnectProvider",

"iam:GetRolePolicy"

],

"Resource": [

"arn:aws:iam::675892200046:role/testEKSNodeRole",

"arn:aws:iam::675892200046:role/testEKSClusterRole",

"arn:aws:iam::675892200046:role/aws-service-role/eks-nodegroup.amazonaws.com/AWSServiceRoleForAmazonEKSNodegroup",

"arn:aws:iam::675892200046:instance-profile/*",

"arn:aws:iam::675892200046:policy/*",

"arn:aws:iam::675892200046:oidc-provider/*"

]

},

{

"Sid": "VisualEditor2",

"Effect": "Allow",

"Action": "iam:GetRole",

"Resource": "arn:aws:iam::675892200046:role/*"

},

{

"Sid": "VisualEditor3",

"Effect": "Allow",

"Action": "iam:ListRoles",

"Resource": "*"

}

]

} |

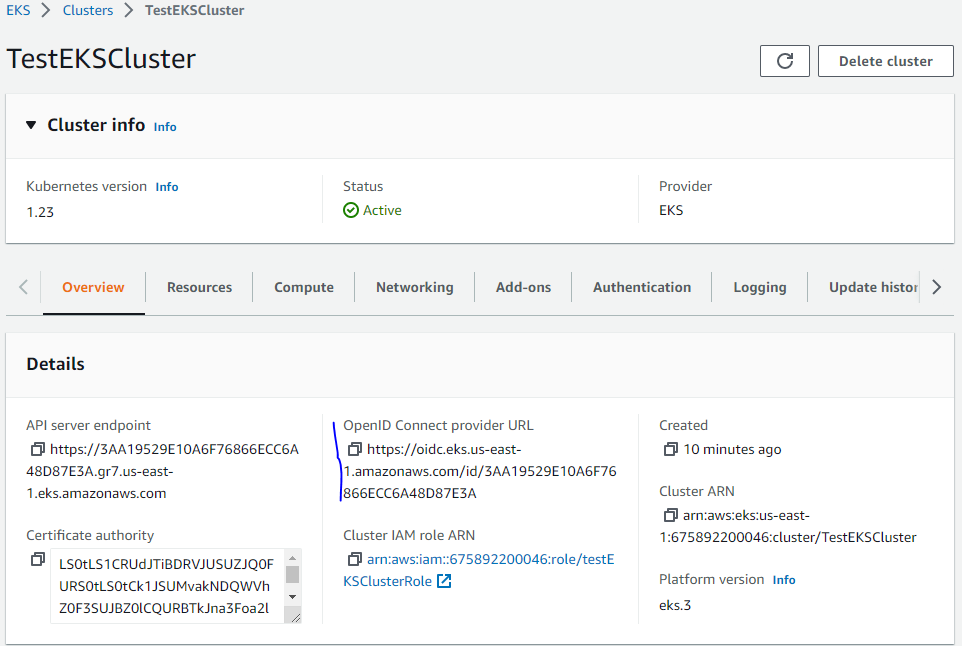

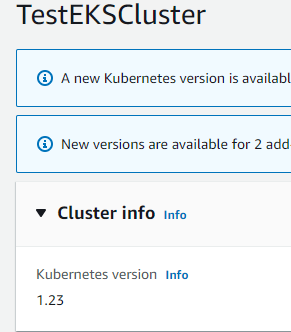

3 创建EKS集群(IAM用户)

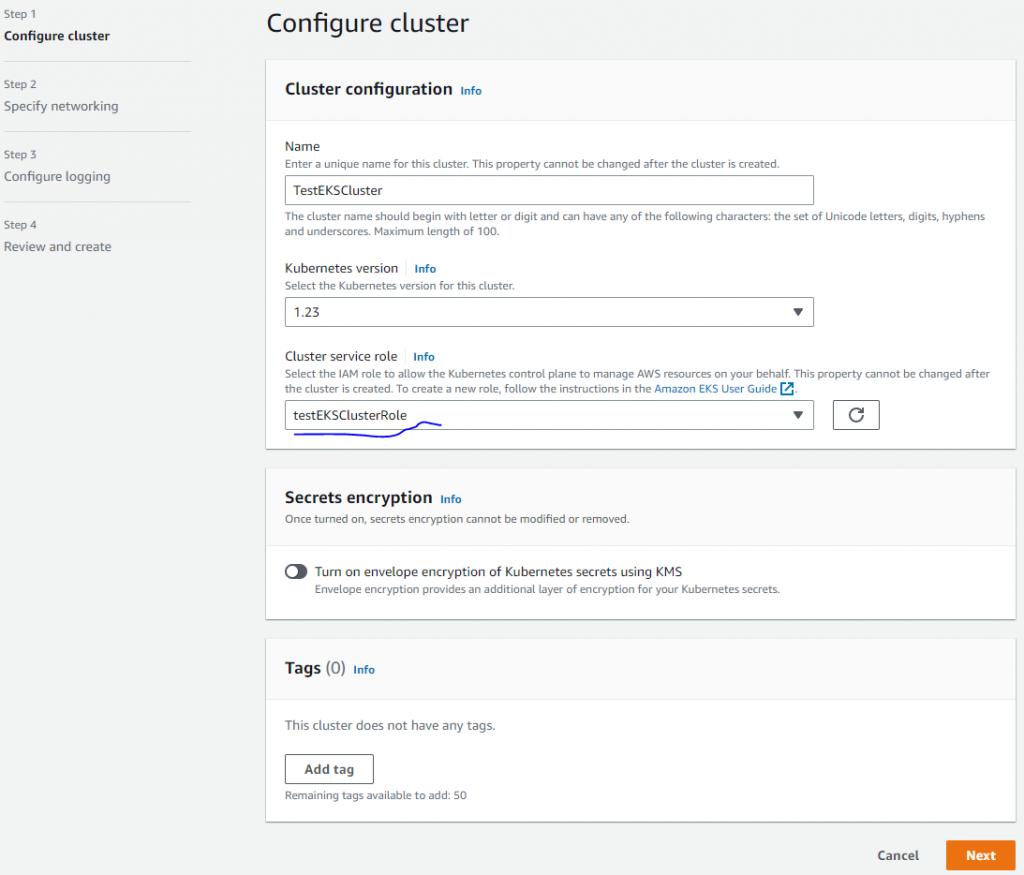

3.1 创建EKS集群控制平面

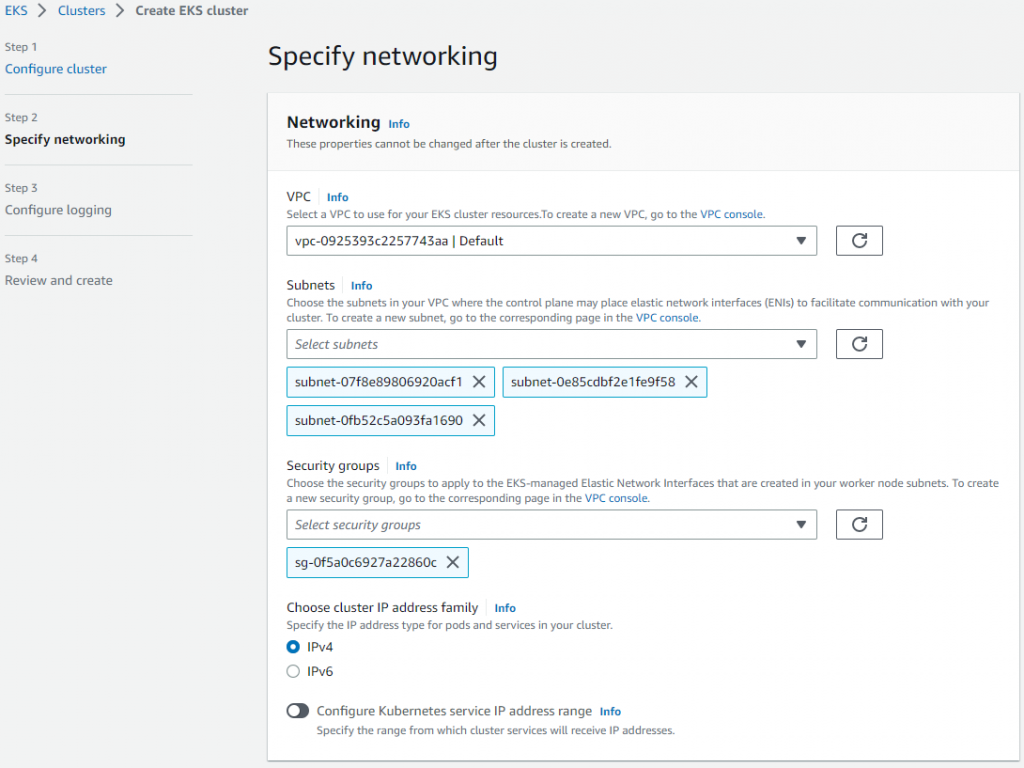

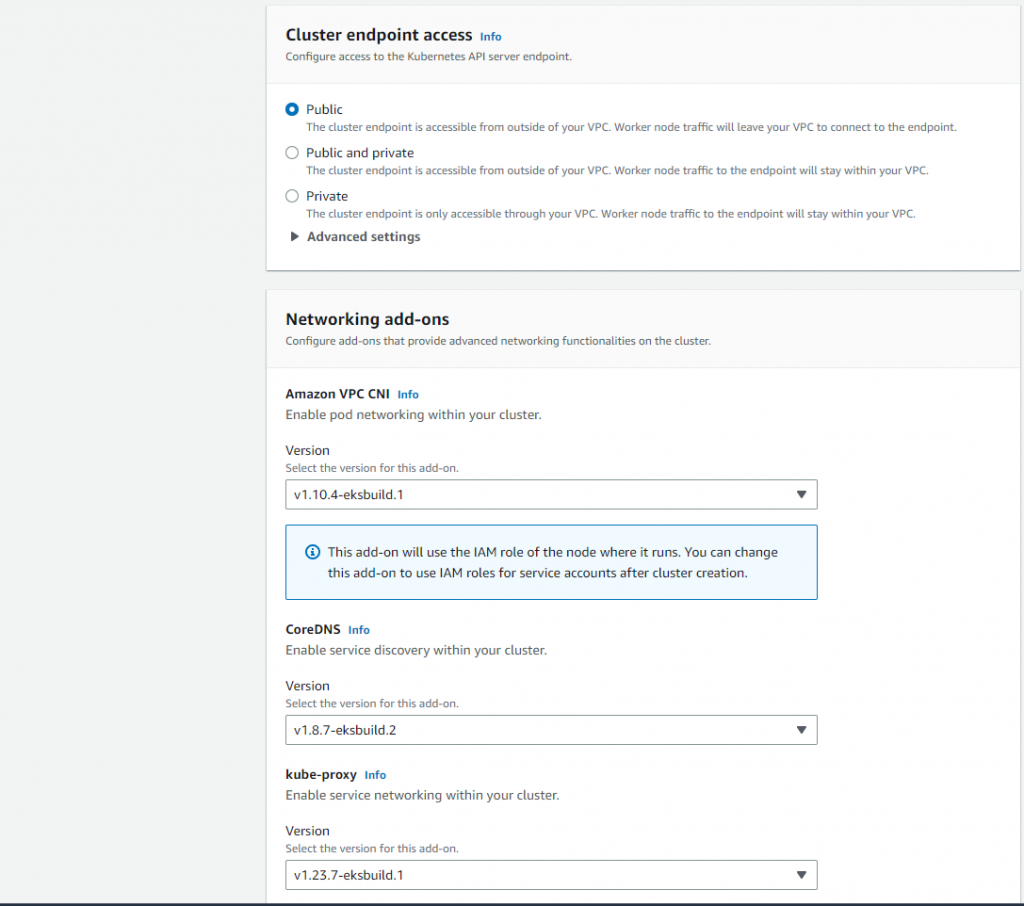

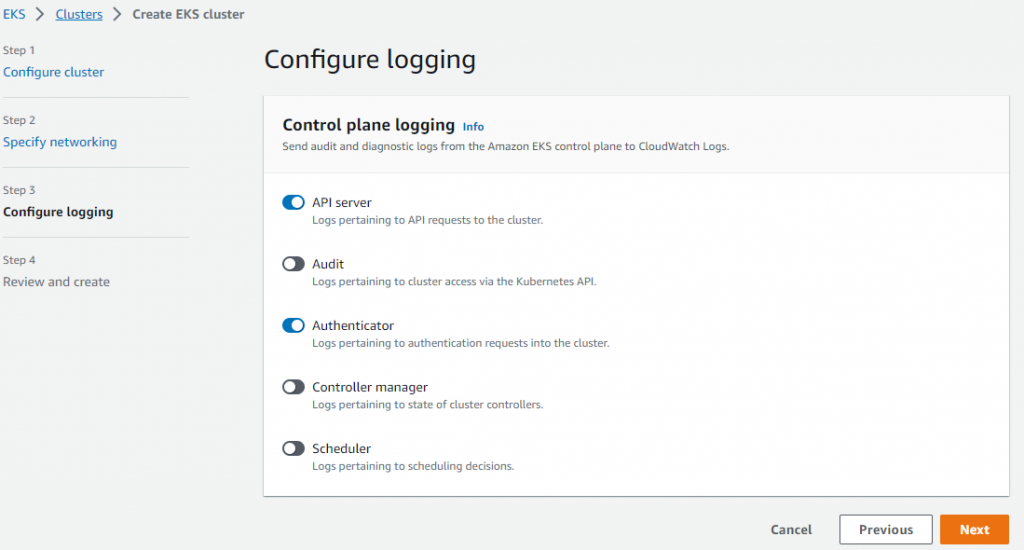

在EKS产品页面,点击”Create Cluster”。

如果你没有在”Custer service role”下拉列表中看见角色,请检查第2步。

在子网”Subnets”中, 3个子网就好了。

在集群端点访问”Cluster endpoint access”中,选 “Public”就好,生产环境,请选择”Private”。

在网络插件”Networking add-ons”中,默认就好。

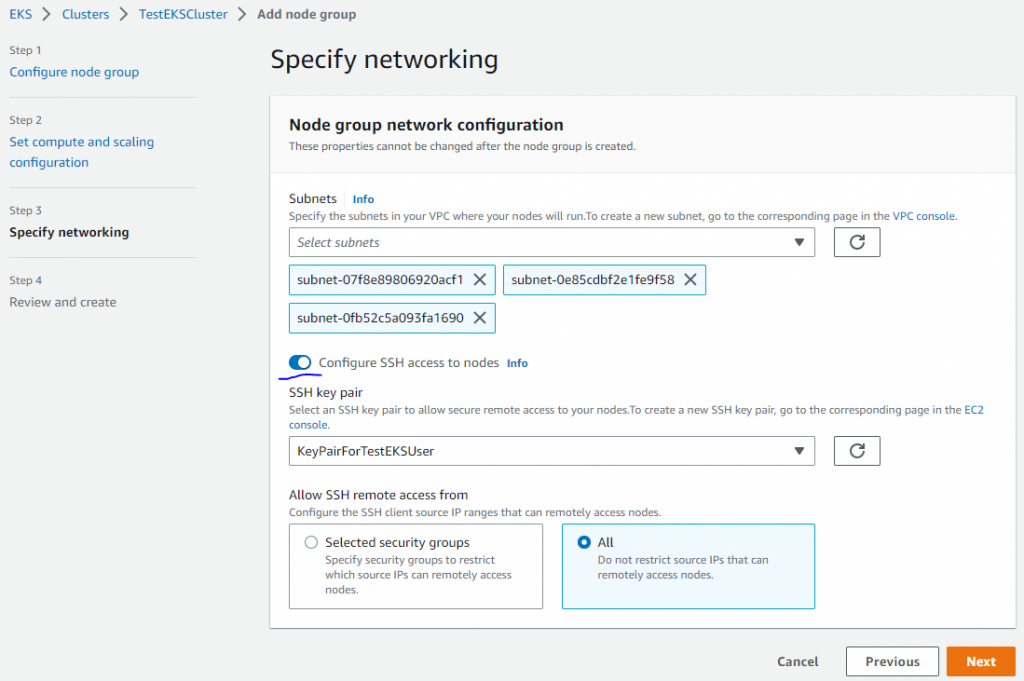

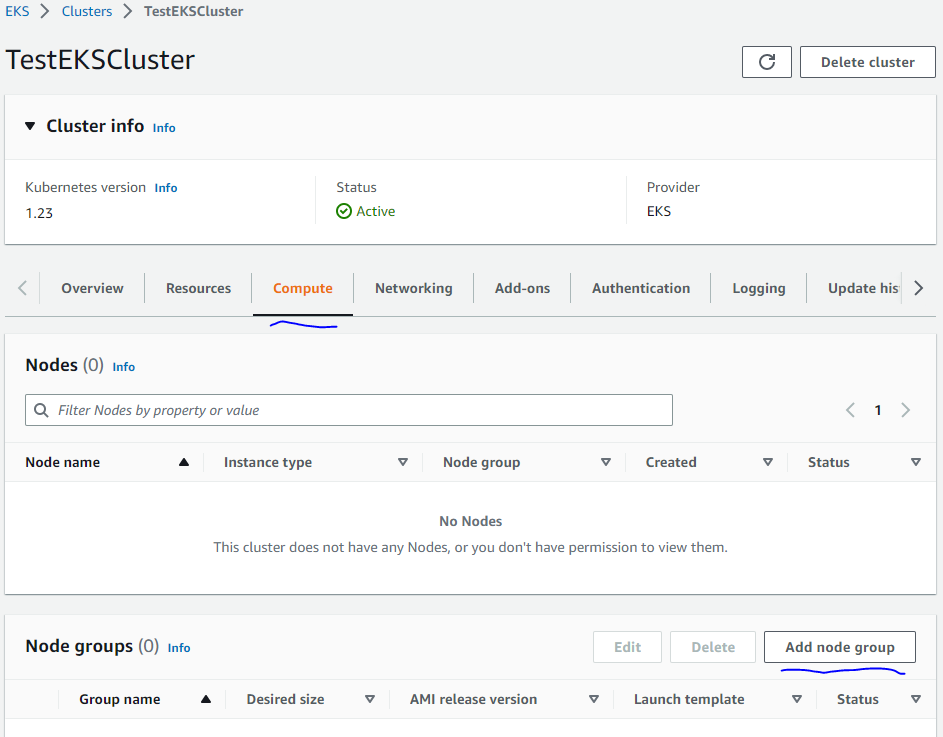

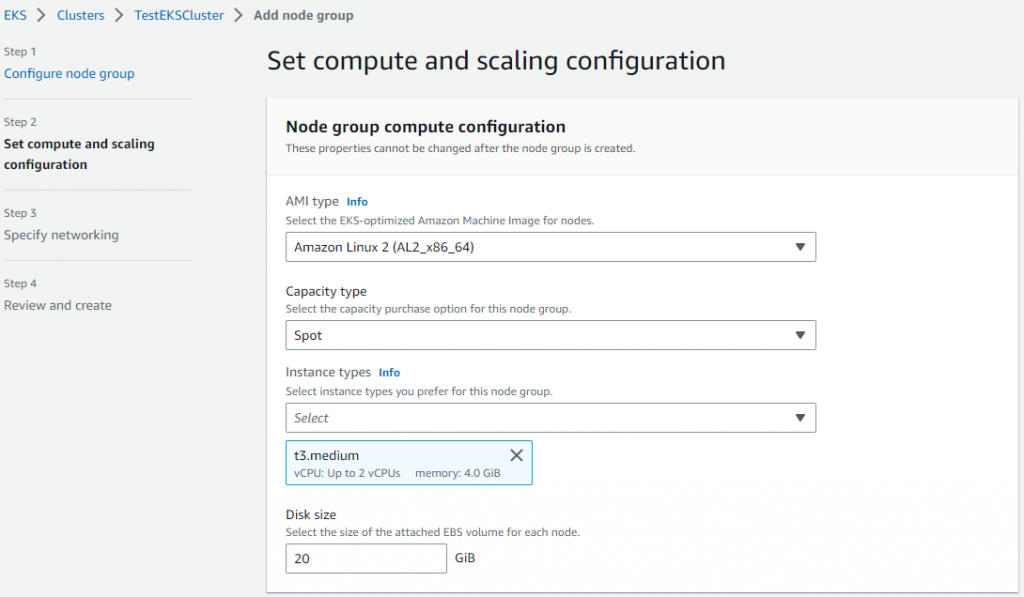

3.2 添加工作节点到集群

当集群创建成功了”Active”, 点击Compute标签中的”Add node group”来创建工作节点。

4 设置AWS CLI 工具和Kubectl 工具(IAM用户)

4.1 配置AWS CLI

安装AWS CLI后,运行”aws configure”来配置第一步中的IAM账号:

curl "https://awscli.amazonaws.com/awscli-exe-linux-x86_64.zip" -o "awscliv2.zip" unzip awscliv2.zip sudo ./aws/install aws configure [awscli@bogon ~]$ aws sts get-caller-identity { "UserId": "AIDAZ2XSQQJXKNKFI4YDF", "Account": "675892200046", "Arn": "arn:aws:iam::675892200046:user/TestEKSUser" } |

4.2 配置Kubectl

[awscli@bogon ~]$ aws eks --region us-east-1 update-kubeconfig --name TestEKSCluster Updated context arn:aws:eks:us-east-1:675892200046:cluster/TestEKSCluster in /home/awscli/.kube/config |

5 设置EKS的存储EFS

5.1 创建接入EFS的策略(Root用户操作)

自定义一策略:”TestEKSAccessEFSPolicy”

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"elasticfilesystem:DescribeAccessPoints",

"elasticfilesystem:DescribeFileSystems"

],

"Resource": "*"

},

{

"Effect": "Allow",

"Action": [

"elasticfilesystem:CreateAccessPoint"

],

"Resource": "*",

"Condition": {

"StringLike": {

"aws:RequestTag/efs.csi.aws.com/cluster": "true"

}

}

},

{

"Effect": "Allow",

"Action": "elasticfilesystem:DeleteAccessPoint",

"Resource": "*",

"Condition": {

"StringEquals": {

"aws:ResourceTag/efs.csi.aws.com/cluster": "true"

}

}

}

]

} |

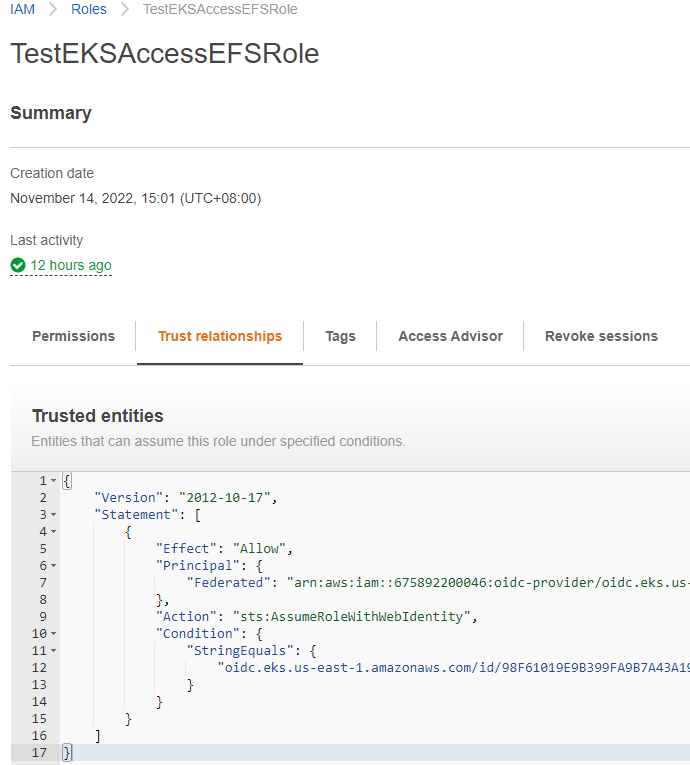

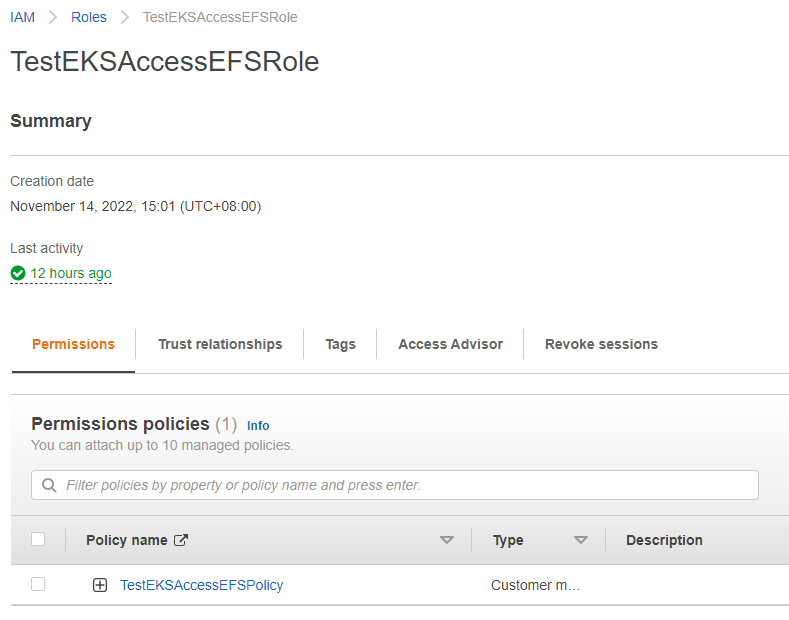

5.2 创建访问EFS的角色(Root用户操作)

创建角色”TestEKSAccessEFSRole”并分配策略”TestEKSAccessEFSPolicy”。

在信任关系”Trust relationships”中,修改如下内容。

替换”oidc.eks.us-east-1.amazonaws.com/id/98F61019E9B399FA9B7A43A19B56DF14″为你EKS的”OpenID Connect provider URL”。

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Federated": "arn:aws:iam::675892200046:oidc-provider/oidc.eks.us-east-1.amazonaws.com/id/98F61019E9B399FA9B7A43A19B56DF14"

},

"Action": "sts:AssumeRoleWithWebIdentity",

"Condition": {

"StringEquals": {

"oidc.eks.us-east-1.amazonaws.com/id/98F61019E9B399FA9B7A43A19B56DF14:sub": "system:serviceaccount:kube-system:efs-csi-controller-sa"

}

}

}

]

} |

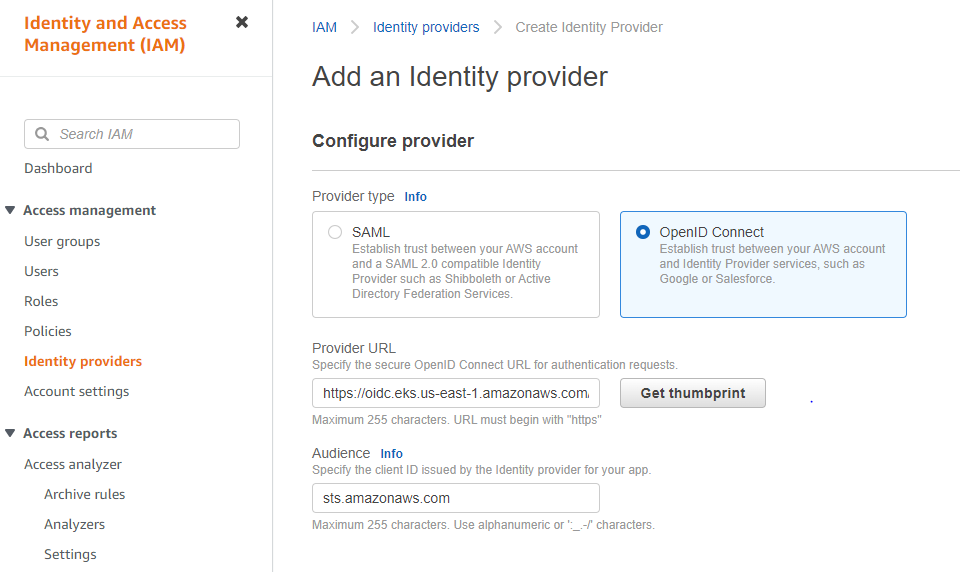

5.3 为OpenID Connect创建Identity Provider(Root用户操作)

填入提供URL和审计URL “sts.amazonaws.com”, 点击”Get thumbprint”, 然后单击”Add provider”。

5.4 在EKS中创建服务账户(IAM用户)

创建文件”efs-service-account.yaml”,包含如下内容,然后”kubectl apply -f efs-service-account.yaml”创建账户,注意修改account id。

apiVersion: v1 kind: ServiceAccount metadata: name: efs-csi-controller-sa namespace: kube-system labels: app.kubernetes.io/name: aws-efs-csi-driver annotations: eks.amazonaws.com/role-arn: arn:aws:iam::675892200046:role/TestEKSAccessEFSRole |

5.5 创建EFS CSI 插件(IAM用户)

执行如下命令获取EFS插件的安装yaml文件:driver.yaml

kubectl kustomize "github.com/kubernetes-sigs/aws-efs-csi-driver/deploy/kubernetes/overlays/stable/ecr?ref=release-1.3" > driver.yaml |

上面已经创建了服务账号,所以driver.yaml文件里面的”efs-csi-controller-sa”段可以去掉。

接着运行命令 “kubectl apply -f driver.yaml”创建CSI插件。

apiVersion: v1 kind: ServiceAccount metadata: name: efs-csi-controller-sa namespace: kube-system labels: app.kubernetes.io/name: aws-efs-csi-driver annotations: eks.amazonaws.com/role-arn: arn:aws:iam::675892200046:role/TestEKSAccessEFSRole --- apiVersion: v1 kind: ServiceAccount metadata: labels: app.kubernetes.io/name: aws-efs-csi-driver name: efs-csi-node-sa namespace: kube-system --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: labels: app.kubernetes.io/name: aws-efs-csi-driver name: efs-csi-external-provisioner-role rules: - apiGroups: - "" resources: - persistentvolumes verbs: - get - list - watch - create - delete - apiGroups: - "" resources: - persistentvolumeclaims verbs: - get - list - watch - update - apiGroups: - storage.k8s.io resources: - storageclasses verbs: - get - list - watch - apiGroups: - "" resources: - events verbs: - list - watch - create - patch - apiGroups: - storage.k8s.io resources: - csinodes verbs: - get - list - watch - apiGroups: - "" resources: - nodes verbs: - get - list - watch - apiGroups: - coordination.k8s.io resources: - leases verbs: - get - watch - list - delete - update - create --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: labels: app.kubernetes.io/name: aws-efs-csi-driver name: efs-csi-provisioner-binding roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: efs-csi-external-provisioner-role subjects: - kind: ServiceAccount name: efs-csi-controller-sa namespace: kube-system --- apiVersion: apps/v1 kind: Deployment metadata: labels: app.kubernetes.io/name: aws-efs-csi-driver name: efs-csi-controller namespace: kube-system spec: replicas: 2 selector: matchLabels: app: efs-csi-controller app.kubernetes.io/instance: kustomize app.kubernetes.io/name: aws-efs-csi-driver template: metadata: labels: app: efs-csi-controller app.kubernetes.io/instance: kustomize app.kubernetes.io/name: aws-efs-csi-driver spec: containers: - args: - --endpoint=$(CSI_ENDPOINT) - --logtostderr - --v=2 - --delete-access-point-root-dir=false env: - name: CSI_ENDPOINT value: unix:///var/lib/csi/sockets/pluginproxy/csi.sock image: 602401143452.dkr.ecr.us-west-2.amazonaws.com/eks/aws-efs-csi-driver:v1.3.8 imagePullPolicy: IfNotPresent livenessProbe: failureThreshold: 5 httpGet: path: /healthz port: healthz initialDelaySeconds: 10 periodSeconds: 10 timeoutSeconds: 3 name: efs-plugin ports: - containerPort: 9909 name: healthz protocol: TCP securityContext: privileged: true volumeMounts: - mountPath: /var/lib/csi/sockets/pluginproxy/ name: socket-dir - args: - --csi-address=$(ADDRESS) - --v=2 - --feature-gates=Topology=true - --extra-create-metadata - --leader-election env: - name: ADDRESS value: /var/lib/csi/sockets/pluginproxy/csi.sock image: 602401143452.dkr.ecr.us-west-2.amazonaws.com/eks/csi-provisioner:v2.1.1 imagePullPolicy: IfNotPresent name: csi-provisioner volumeMounts: - mountPath: /var/lib/csi/sockets/pluginproxy/ name: socket-dir - args: - --csi-address=/csi/csi.sock - --health-port=9909 image: 602401143452.dkr.ecr.us-west-2.amazonaws.com/eks/livenessprobe:v2.2.0 imagePullPolicy: IfNotPresent name: liveness-probe volumeMounts: - mountPath: /csi name: socket-dir hostNetwork: true nodeSelector: kubernetes.io/os: linux priorityClassName: system-cluster-critical priorityClassName: system-cluster-critical serviceAccountName: efs-csi-controller-sa volumes: - emptyDir: {} name: socket-dir --- apiVersion: apps/v1 kind: DaemonSet metadata: labels: app.kubernetes.io/name: aws-efs-csi-driver name: efs-csi-node namespace: kube-system spec: selector: matchLabels: app: efs-csi-node app.kubernetes.io/instance: kustomize app.kubernetes.io/name: aws-efs-csi-driver template: metadata: labels: app: efs-csi-node app.kubernetes.io/instance: kustomize app.kubernetes.io/name: aws-efs-csi-driver spec: affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: eks.amazonaws.com/compute-type operator: NotIn values: - fargate containers: - args: - --endpoint=$(CSI_ENDPOINT) - --logtostderr - --v=2 env: - name: CSI_ENDPOINT value: unix:/csi/csi.sock image: 602401143452.dkr.ecr.us-west-2.amazonaws.com/eks/aws-efs-csi-driver:v1.3.8 imagePullPolicy: IfNotPresent livenessProbe: failureThreshold: 5 httpGet: path: /healthz port: healthz initialDelaySeconds: 10 periodSeconds: 2 timeoutSeconds: 3 name: efs-plugin ports: - containerPort: 9809 name: healthz protocol: TCP securityContext: privileged: true volumeMounts: - mountPath: /var/lib/kubelet mountPropagation: Bidirectional name: kubelet-dir - mountPath: /csi name: plugin-dir - mountPath: /var/run/efs name: efs-state-dir - mountPath: /var/amazon/efs name: efs-utils-config - mountPath: /etc/amazon/efs-legacy name: efs-utils-config-legacy - args: - --csi-address=$(ADDRESS) - --kubelet-registration-path=$(DRIVER_REG_SOCK_PATH) - --v=2 env: - name: ADDRESS value: /csi/csi.sock - name: DRIVER_REG_SOCK_PATH value: /var/lib/kubelet/plugins/efs.csi.aws.com/csi.sock - name: KUBE_NODE_NAME valueFrom: fieldRef: fieldPath: spec.nodeName image: 602401143452.dkr.ecr.us-west-2.amazonaws.com/eks/csi-node-driver-registrar:v2.1.0 imagePullPolicy: IfNotPresent name: csi-driver-registrar volumeMounts: - mountPath: /csi name: plugin-dir - mountPath: /registration name: registration-dir - args: - --csi-address=/csi/csi.sock - --health-port=9809 - --v=2 image: 602401143452.dkr.ecr.us-west-2.amazonaws.com/eks/livenessprobe:v2.2.0 imagePullPolicy: IfNotPresent name: liveness-probe volumeMounts: - mountPath: /csi name: plugin-dir dnsPolicy: ClusterFirst hostNetwork: true nodeSelector: beta.kubernetes.io/os: linux priorityClassName: system-node-critical serviceAccountName: efs-csi-node-sa tolerations: - operator: Exists volumes: - hostPath: path: /var/lib/kubelet type: Directory name: kubelet-dir - hostPath: path: /var/lib/kubelet/plugins/efs.csi.aws.com/ type: DirectoryOrCreate name: plugin-dir - hostPath: path: /var/lib/kubelet/plugins_registry/ type: Directory name: registration-dir - hostPath: path: /var/run/efs type: DirectoryOrCreate name: efs-state-dir - hostPath: path: /var/amazon/efs type: DirectoryOrCreate name: efs-utils-config - hostPath: path: /etc/amazon/efs type: DirectoryOrCreate name: efs-utils-config-legacy --- apiVersion: storage.k8s.io/v1 kind: CSIDriver metadata: annotations: helm.sh/hook: pre-install, pre-upgrade helm.sh/hook-delete-policy: before-hook-creation helm.sh/resource-policy: keep name: efs.csi.aws.com spec: attachRequired: false |

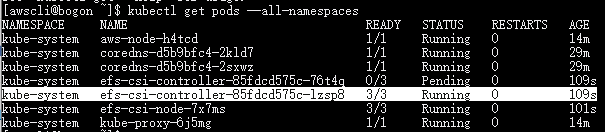

等一会,”efs-csi-controller*”应该就绪了。

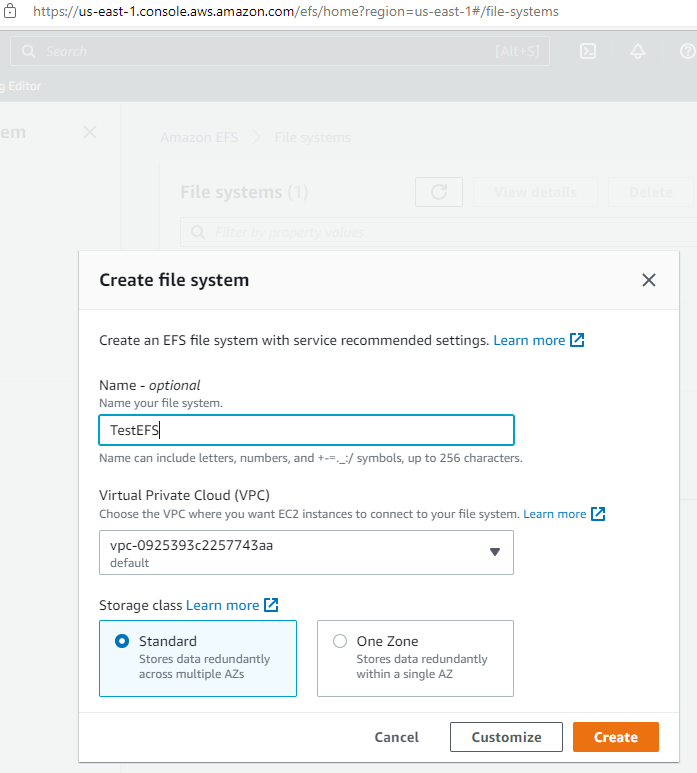

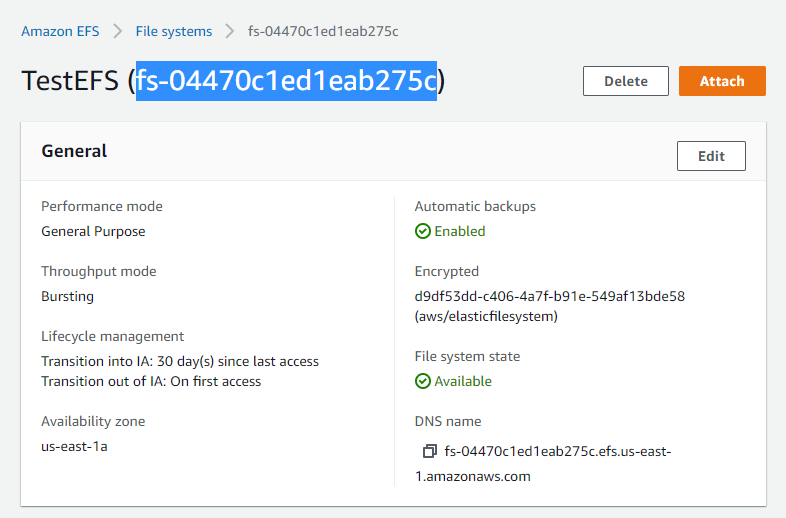

5.6 创建EFS文件系统(Root用户操作)

在Amazon EFS产品中,点击”Create file system”开始创建:

选择”Standard”作为存储类,这样可用区里面的所有节点都可以访问。

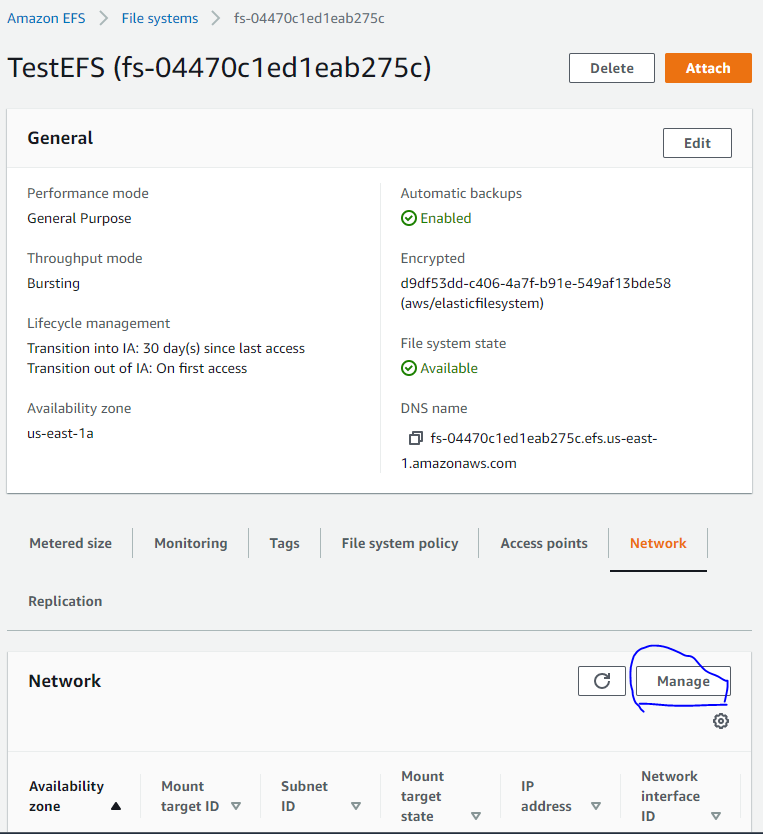

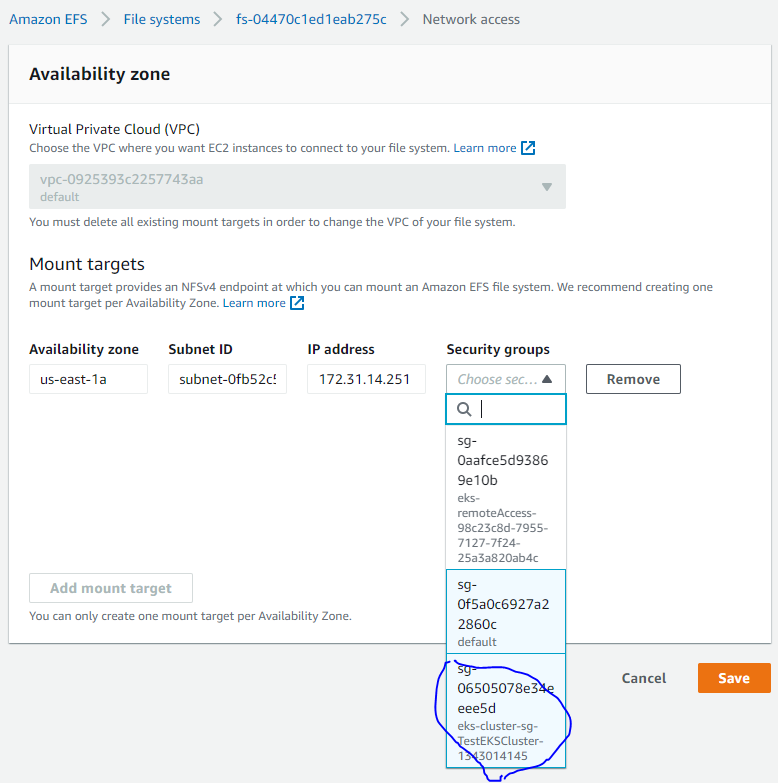

创建完成后,等待”Network”可用,然后点击”Manage”按钮添加集群安全组。

5.7 创建Kubernetes里面的存储类(IAM用户)

安装如下内容创建”storageclass.yaml”,并运行”kubectl apply -f storageclass.yaml”来创建。

注意修改”fileSystemId”成你自己的,通过如下图查询。

kind: StorageClass apiVersion: storage.k8s.io/v1 metadata: name: efs-sc provisioner: efs.csi.aws.com parameters: provisioningMode: efs-ap fileSystemId: fs-04470c1ed1eab275c directoryPerms: "700" gidRangeStart: "1000" # optional gidRangeEnd: "2000" # optional basePath: "/dynamic_provisioning" # optional |

6 部署Jenkins来测试(IAM用户)

6.1 部署Jenkins

注意设置存储类为efs-sc。

helm repo add jenkinsci https://charts.jenkins.io/

helm install my-jenkins jenkinsci/jenkins –version 4.1.17 –set persistence.storageClass=efs-sc

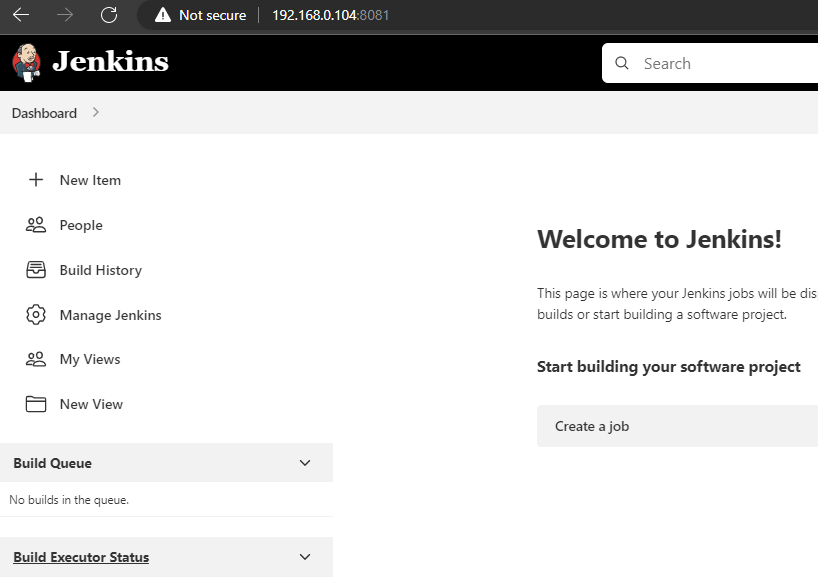

6.2 验证结果

等Jenkins启动后,可以采用端口转发来临时访问。

[awscli@bogon ~]$ kubectl port-forward svc/my-jenkins --address=0.0.0.0 8081:8080 Forwarding from 0.0.0.0:8081 -> 8080 Handling connection for 8081 Handling connection for 8081 Handling connection for 8081 Handling connection for 8081 Handling connection for 8081 Handling connection for 8081 Handling connection for 8081 Handling connection for 8081 Handling connection for 8081 Handling connection for 8081 Handling connection for 8081 Handling connection for 8081 Handling connection for 8081 |

7 集群自动伸缩

7.1 创建一个自动伸缩策略供EKS使用

首先创建一个策略:”TestEKSClusterAutoScalePolicy”

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"autoscaling:DescribeAutoScalingGroups",

"autoscaling:DescribeAutoScalingInstances",

"autoscaling:DescribeLaunchConfigurations",

"autoscaling:DescribeTags",

"autoscaling:SetDesiredCapacity",

"autoscaling:TerminateInstanceInAutoScalingGroup",

"ec2:DescribeLaunchTemplateVersions",

"ec2:DescribeInstanceTypes"

],

"Resource": "*"

}

]

} |

7.2 创建一个自动伸缩角色供EKS使用

接着创建一个角色:”TestEKSClusterAutoScaleRole”,使用上面创建的策略:”TestEKSClusterAutoScalePolicy”,并且按照如下设置”trusted entities”。

注意open id需要从你的EKS集群详情页面获取。

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Federated": "arn:aws:iam::675892200046:oidc-provider/oidc.eks.us-east-1.amazonaws.com/id/BEDCA5446D2676BB0A51B7BECFB36773"

},

"Action": "sts:AssumeRoleWithWebIdentity",

"Condition": {

"StringEquals": {

"oidc.eks.us-east-1.amazonaws.com/id/BEDCA5446D2676BB0A51B7BECFB36773:sub": "system:serviceaccount:kube-system:cluster-autoscaler"

}

}

}

]

} |

7.3 部署cluster scaler

使用如下命令获取部署文件。

wget https://raw.githubusercontent.com/kubernetes/autoscaler/master/cluster-autoscaler/cloudprovider/aws/examples/cluster-autoscaler-autodiscover.yaml |

然后修改”cluster-autoscaler-autodiscover.yaml” :

1 添加annotation “eks.amazonaws.com/role-arn” 到服务账号 ServiceAccount “cluster-autoscaler”上,见下面的代码。

2 在Deployment “cluster-autoscaler”中,修改

3 添加2个参数(- –balance-similar-node-groups – –skip-nodes-with-system-pods=false)到步骤2处代码的下一行。

4 添加注解annotation “cluster-autoscaler.kubernetes.io/safe-to-evict 在代码行”prometheus.io/port: ‘8085’”下面。

5 在https://github.com/kubernetes/autoscaler/releases找到对应的镜像版本,需要和你的EKS Kubernetes版本一致。

6 最后,运行”kubectl apply -f cluster-autoscaler-autodiscover.yaml”来创建。

--- apiVersion: v1 kind: ServiceAccount metadata: name: cluster-autoscaler namespace: kube-system labels: k8s-addon: cluster-autoscaler.addons.k8s.io k8s-app: cluster-autoscaler annotations: eks.amazonaws.com/role-arn: arn:aws:iam::675892200046:role/TestEKSClusterAutoScaleRole --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: name: cluster-autoscaler labels: k8s-addon: cluster-autoscaler.addons.k8s.io k8s-app: cluster-autoscaler rules: - apiGroups: [""] resources: ["events", "endpoints"] verbs: ["create", "patch"] - apiGroups: [""] resources: ["pods/eviction"] verbs: ["create"] - apiGroups: [""] resources: ["pods/status"] verbs: ["update"] - apiGroups: [""] resources: ["endpoints"] resourceNames: ["cluster-autoscaler"] verbs: ["get", "update"] - apiGroups: [""] resources: ["nodes"] verbs: ["watch", "list", "get", "update"] - apiGroups: [""] resources: - "namespaces" - "pods" - "services" - "replicationcontrollers" - "persistentvolumeclaims" - "persistentvolumes" verbs: ["watch", "list", "get"] - apiGroups: ["extensions"] resources: ["replicasets", "daemonsets"] verbs: ["watch", "list", "get"] - apiGroups: ["policy"] resources: ["poddisruptionbudgets"] verbs: ["watch", "list"] - apiGroups: ["apps"] resources: ["statefulsets", "replicasets", "daemonsets"] verbs: ["watch", "list", "get"] - apiGroups: ["storage.k8s.io"] resources: ["storageclasses", "csinodes", "csidrivers", "csistoragecapacities"] verbs: ["watch", "list", "get"] - apiGroups: ["batch", "extensions"] resources: ["jobs"] verbs: ["get", "list", "watch", "patch"] - apiGroups: ["coordination.k8s.io"] resources: ["leases"] verbs: ["create"] - apiGroups: ["coordination.k8s.io"] resourceNames: ["cluster-autoscaler"] resources: ["leases"] verbs: ["get", "update"] --- apiVersion: rbac.authorization.k8s.io/v1 kind: Role metadata: name: cluster-autoscaler namespace: kube-system labels: k8s-addon: cluster-autoscaler.addons.k8s.io k8s-app: cluster-autoscaler rules: - apiGroups: [""] resources: ["configmaps"] verbs: ["create","list","watch"] - apiGroups: [""] resources: ["configmaps"] resourceNames: ["cluster-autoscaler-status", "cluster-autoscaler-priority-expander"] verbs: ["delete", "get", "update", "watch"] --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: cluster-autoscaler labels: k8s-addon: cluster-autoscaler.addons.k8s.io k8s-app: cluster-autoscaler roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: cluster-autoscaler subjects: - kind: ServiceAccount name: cluster-autoscaler namespace: kube-system --- apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: name: cluster-autoscaler namespace: kube-system labels: k8s-addon: cluster-autoscaler.addons.k8s.io k8s-app: cluster-autoscaler roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: cluster-autoscaler subjects: - kind: ServiceAccount name: cluster-autoscaler namespace: kube-system --- apiVersion: apps/v1 kind: Deployment metadata: name: cluster-autoscaler namespace: kube-system labels: app: cluster-autoscaler spec: replicas: 1 selector: matchLabels: app: cluster-autoscaler template: metadata: labels: app: cluster-autoscaler annotations: prometheus.io/scrape: 'true' prometheus.io/port: '8085' cluster-autoscaler.kubernetes.io/safe-to-evict: "false" spec: priorityClassName: system-cluster-critical securityContext: runAsNonRoot: true runAsUser: 65534 fsGroup: 65534 serviceAccountName: cluster-autoscaler containers: - image: k8s.gcr.io/autoscaling/cluster-autoscaler:v1.23.0 name: cluster-autoscaler resources: limits: cpu: 100m memory: 600Mi requests: cpu: 100m memory: 600Mi command: - ./cluster-autoscaler - --v=4 - --stderrthreshold=info - --cloud-provider=aws - --skip-nodes-with-local-storage=false - --expander=least-waste - --node-group-auto-discovery=asg:tag=k8s.io/cluster-autoscaler/enabled,k8s.io/cluster-autoscaler/TestEKSCluster - --balance-similar-node-groups - --skip-nodes-with-system-pods=false volumeMounts: - name: ssl-certs mountPath: /etc/ssl/certs/ca-certificates.crt #/etc/ssl/certs/ca-bundle.crt for Amazon Linux Worker Nodes readOnly: true imagePullPolicy: "Always" volumes: - name: ssl-certs hostPath: path: "/etc/ssl/certs/ca-bundle.crt" |

7.4 部署metrics server

使用metrics server我们可以获取pods的metrics,着色HPA的基础。

apiVersion: v1 kind: ServiceAccount metadata: labels: k8s-app: metrics-server name: metrics-server namespace: kube-system --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: labels: k8s-app: metrics-server rbac.authorization.k8s.io/aggregate-to-admin: "true" rbac.authorization.k8s.io/aggregate-to-edit: "true" rbac.authorization.k8s.io/aggregate-to-view: "true" name: system:aggregated-metrics-reader rules: - apiGroups: - metrics.k8s.io resources: - pods - nodes verbs: - get - list - watch --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: labels: k8s-app: metrics-server name: system:metrics-server rules: - apiGroups: - "" resources: - pods - nodes - nodes/stats - namespaces - configmaps verbs: - get - list - watch --- apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: labels: k8s-app: metrics-server name: metrics-server-auth-reader namespace: kube-system roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: extension-apiserver-authentication-reader subjects: - kind: ServiceAccount name: metrics-server namespace: kube-system --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: labels: k8s-app: metrics-server name: metrics-server:system:auth-delegator roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:auth-delegator subjects: - kind: ServiceAccount name: metrics-server namespace: kube-system --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: labels: k8s-app: metrics-server name: system:metrics-server roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:metrics-server subjects: - kind: ServiceAccount name: metrics-server namespace: kube-system --- apiVersion: v1 kind: Service metadata: labels: k8s-app: metrics-server name: metrics-server namespace: kube-system spec: ports: - name: https port: 443 protocol: TCP targetPort: https selector: k8s-app: metrics-server --- apiVersion: apps/v1 kind: Deployment metadata: labels: k8s-app: metrics-server name: metrics-server namespace: kube-system spec: selector: matchLabels: k8s-app: metrics-server strategy: rollingUpdate: maxUnavailable: 0 template: metadata: labels: k8s-app: metrics-server spec: containers: - args: - --cert-dir=/tmp - --secure-port=4443 - --kubelet-insecure-tls - --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname - --kubelet-use-node-status-port - --metric-resolution=15s image: k8s.gcr.io/metrics-server/metrics-server:v0.5.2 imagePullPolicy: IfNotPresent livenessProbe: failureThreshold: 3 httpGet: path: /livez port: https scheme: HTTPS periodSeconds: 10 name: metrics-server ports: - containerPort: 4443 name: https protocol: TCP readinessProbe: failureThreshold: 3 httpGet: path: /readyz port: https scheme: HTTPS initialDelaySeconds: 20 periodSeconds: 10 resources: requests: cpu: 100m memory: 200Mi securityContext: readOnlyRootFilesystem: true runAsNonRoot: true runAsUser: 1000 volumeMounts: - mountPath: /tmp name: tmp-dir nodeSelector: kubernetes.io/os: linux priorityClassName: system-cluster-critical serviceAccountName: metrics-server volumes: - emptyDir: {} name: tmp-dir --- apiVersion: apiregistration.k8s.io/v1 kind: APIService metadata: labels: k8s-app: metrics-server name: v1beta1.metrics.k8s.io spec: group: metrics.k8s.io groupPriorityMinimum: 100 insecureSkipTLSVerify: true service: name: metrics-server namespace: kube-system version: v1beta1 versionPriority: 100 |

7.5 测试集群伸缩cluster scaling

部署一个niginx来测试:

apiVersion: apps/v1 kind: Deployment metadata: name: nginx-deployment labels: app: nginx spec: replicas: 1 selector: matchLabels: app: nginx template: metadata: labels: app: nginx spec: containers: - name: nginx image: nginx:1.14.2 ports: - containerPort: 80 |

目前只有1个节点。

[awscli@bogon ~]$ kubectl top node NAME CPU(cores) CPU% MEMORY(bytes) MEMORY% ip-172-31-17-148.ec2.internal 52m 2% 635Mi 19% [awscli@bogon ~]$ kubectl top pods --all-namespaces NAMESPACE NAME CPU(cores) MEMORY(bytes) default nginx-deployment-9456bbbf9-qlpcb 0m 2Mi kube-system aws-node-m6xjs 3m 34Mi kube-system cluster-autoscaler-5c4d9b6d4c-k2csm 2m 22Mi kube-system coredns-d5b9bfc4-4bvnn 1m 12Mi kube-system coredns-d5b9bfc4-z2ppq 1m 12Mi kube-system kube-proxy-x55c8 1m 10Mi kube-system metrics-server-84cd7b5645-prh6c 3m 16Mi 现在,我们把上面创建的测试POD副本设置到30,应为当前节点容量不够,一会儿后,一个新的节点(ip-172-31-91-231.ec2.internal)启动并加入到了集群。 [awscli@bogon ~]$ kubectl top node NAME CPU(cores) CPU% MEMORY(bytes) MEMORY% ip-172-31-17-148.ec2.internal 66m 3% 726Mi 21% ip-172-31-91-231.ec2.internal 774m 40% 569Mi 17% [awscli@bogon ~]$ kubectl top pods --all-namespaces NAMESPACE NAME CPU(cores) MEMORY(bytes) default nginx-deployment-9456bbbf9-2tgpl 0m 2Mi default nginx-deployment-9456bbbf9-5jdsm 0m 2Mi default nginx-deployment-9456bbbf9-5vt9l 2m 2Mi default nginx-deployment-9456bbbf9-8ldm7 0m 2Mi default nginx-deployment-9456bbbf9-9m499 0m 2Mi default nginx-deployment-9456bbbf9-cpmqs 0m 2Mi default nginx-deployment-9456bbbf9-d6p4k 2m 2Mi default nginx-deployment-9456bbbf9-f2z87 2m 2Mi default nginx-deployment-9456bbbf9-f8w2f 0m 2Mi default nginx-deployment-9456bbbf9-fwjg4 0m 2Mi default nginx-deployment-9456bbbf9-kfmv8 0m 2Mi default nginx-deployment-9456bbbf9-knn2t 0m 2Mi default nginx-deployment-9456bbbf9-mq5sv 0m 2Mi default nginx-deployment-9456bbbf9-plh7h 0m 2Mi default nginx-deployment-9456bbbf9-qlpcb 0m 2Mi default nginx-deployment-9456bbbf9-tz22s 0m 2Mi default nginx-deployment-9456bbbf9-v6ccx 0m 2Mi default nginx-deployment-9456bbbf9-v9rc8 0m 2Mi default nginx-deployment-9456bbbf9-vwsfr 0m 2Mi default nginx-deployment-9456bbbf9-x2jnb 0m 2Mi default nginx-deployment-9456bbbf9-xhllv 0m 2Mi default nginx-deployment-9456bbbf9-z7hhr 0m 2Mi default nginx-deployment-9456bbbf9-zj7qc 0m 2Mi default nginx-deployment-9456bbbf9-zqptw 0m 2Mi kube-system aws-node-f4kf4 2m 35Mi kube-system aws-node-m6xjs 3m 35Mi kube-system cluster-autoscaler-5c4d9b6d4c-k2csm 3m 26Mi kube-system coredns-d5b9bfc4-4bvnn 1m 12Mi kube-system coredns-d5b9bfc4-z2ppq 1m 12Mi kube-system kube-proxy-qqrw9 1m 10Mi kube-system kube-proxy-x55c8 1m 10Mi kube-system metrics-server-84cd7b5645-prh6c 4m 16Mi |

8 在EKS中访问ECR

应为EKS托管的Node Group中的Node,我们不能修改上面的docker配置文件,所有不能用我们自己的Harbor除非你有正确的证书。所以采用AWS ECR就没有这些麻烦了。

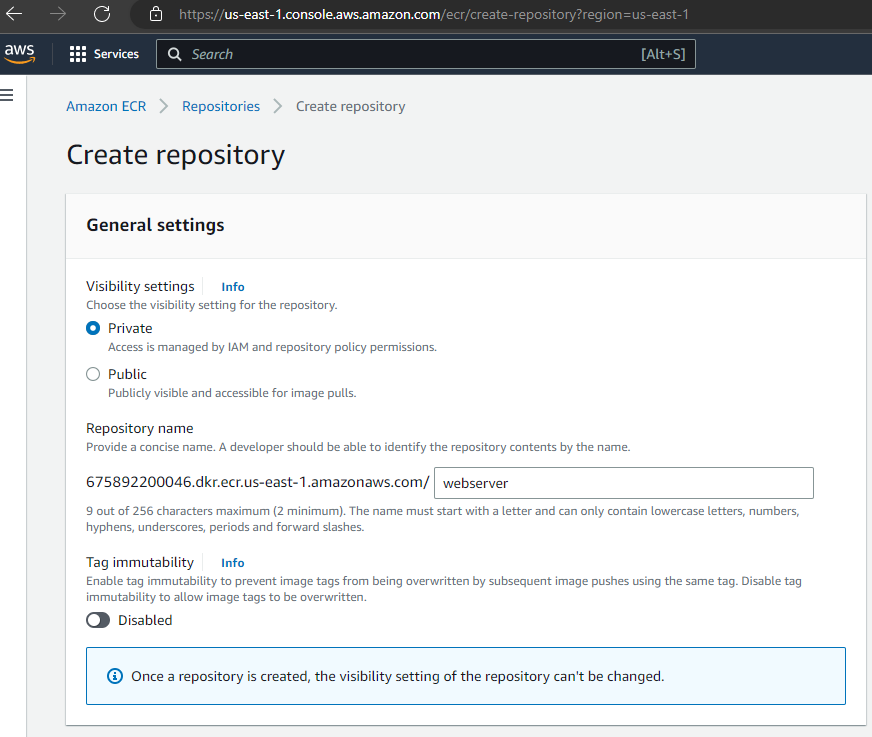

8.1 创建repository

首先创建一个内联策略:”TestEKSonECRPolicy”,然后才能创建docker repository, 获取login token并上传镜像。

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "VisualEditor0",

"Effect": "Allow",

"Action": [

"ecr:CreateRepository",

"ecr:GetDownloadUrlForLayer",

"ecr:DescribeRegistry",

"ecr:GetAuthorizationToken",

"ecr:UploadLayerPart",

"ecr:ListImages",

"ecr:DeleteRepository",

"ecr:PutImage",

"ecr:UntagResource",

"ecr:BatchGetImage",

"ecr:CompleteLayerUpload",

"ecr:DescribeImages",

"ecr:TagResource",

"ecr:DescribeRepositories",

"ecr:InitiateLayerUpload",

"ecr:BatchCheckLayerAvailability"

],

"Resource": "*"

}

]

} |

8.2 在EKS中拉取镜像

需要确认你的EKS node role托管角色有策略: AmazonEC2ContainerRegistryReadOnly

8.3 在EKS中推送镜像

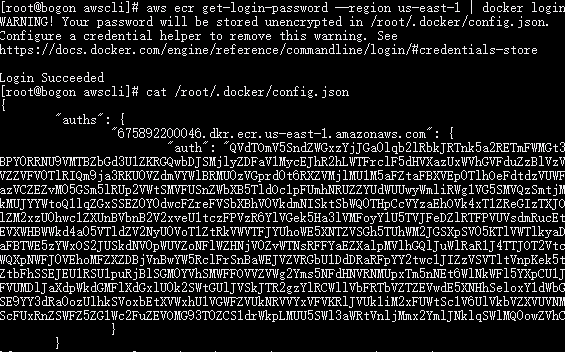

运行如下命令获取login token.(注意修改ECR端点成你的).

aws ecr get-login-password --region us-east-1 | docker login --username AWS --password-stdin 675892200046.dkr.ecr.us-east-1.amazonaws.com |

为Jenkins命名空间创建一个secret token,然后Jenkins中的pipeline就可以使用docker推送镜像到ECR中。

kubectl create secret generic awsecr --from-file=.dockerconfigjson=config.json --type=kubernetes.io/dockerconfigjson -n jenkins |

本作品采用知识共享署名 4.0 国际许可协议进行许可。