原创文章,转载请注明: 转载自慢慢的回味

本文链接地址: Apollo自动驾驶Yolo障碍物检测

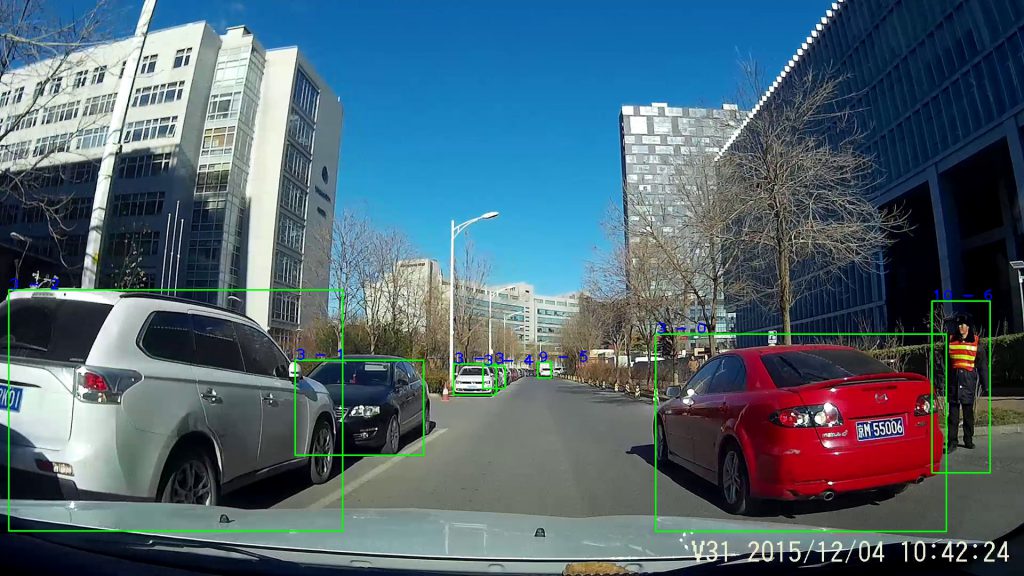

本篇以单元测试camera_lib_obstacle_detector_yolo_yolo_obstacle_detector_test.cc的测试demo_test为例来分析Apollo自动驾驶怎么使用Yolo算法来进行障碍物检测。

单元测试的Yolo算法基于Yolo V3改进,是单尺度检测的。

7个对象的信息

type alpha xmin ymin xmax ymax height width long type_probs visible_ratios[0-3] cut_off_ratios[0-1] 3 0 0 1.198 1229.00 626.00 1774.00 996.00 1.142 1.450 4.151 0 0 0 0 0.500 0 0.000 0.000 0.628 0.372 0.023 0.074 3 0 0 -1.278 553.00 675.00 794.00 853.00 1.608 1.631 4.254 0 0 0 0 0.500 0 0.571 0.000 0.000 0.429 0.039 0.169 3 0 0 -1.150 16.00 543.00 642.00 995.00 1.515 1.686 4.116 0 0 0 0 0.500 0 0.000 0.460 0.540 0.000 0.047 0.138 3 0 0 -1.460 850.00 682.00 930.00 740.00 1.606 1.838 3.782 0 0 0 0 0.500 0 0.883 0.000 0.000 0.117 0.012 0.013 3 0 0 -1.379 905.00 686.00 947.00 728.00 1.112 1.359 3.224 0 0 0 0 0.500 0 0.859 0.000 0.000 0.141 0.019 0.030 9 0 0 0.693 1008.00 679.00 1033.00 708.00 0.570 1.045 1.451 0 0 0 0 0.456 0 0.000 0.000 0.817 0.183 0.059 0.040 10 0 0 0.653 1747.00 564.00 1856.00 886.00 1.749 0.498 0.501 0 0 0 0 0.499 0 0.000 0.000 0.440 0.560 0.122 0.209 |

Yolo算法来进行障碍物检测测试用例

//单元测试通过加载一张测试图片test.jpg,然后用Yolo算法进行推理标记,输出output.jpg。 TEST(YoloCameraDetectorTest, demo_test) { //这里必须使用GPU inference::CudaUtil::set_device_id(0); cv::Mat cv_img = cv::imread( "/apollo/modules/perception/testdata/" "camera/lib/obstacle/detector/yolo/img/test.jpg"); ACHECK(!cv_img.empty()) << "image is empty."; base::Image8U image(cv_img.rows, cv_img.cols, base::Color::BGR); //注意这儿的mutable_cpu_ptr。在Apollo里面很多类型的数据存储都为Blob,它使用SyncedMemory类型进行CPU和GPU对应内存的拷贝。 //SyncedMemory类里保存着cpu_ptr_和gpu_ptr_ 2个指针,分别指向主机内存和显卡内存的地址。 for (int y = 0; y < cv_img.rows; ++y) { memcpy(image.mutable_cpu_ptr(y), cv_img.ptr<uint8_t>(y), image.width_step()); } CameraFrame frame; DataProvider data_provider; frame.data_provider = &data_provider; if (frame.track_feature_blob == nullptr) { frame.track_feature_blob.reset(new base::Blob<float>()); } DataProvider::InitOptions dp_init_options; dp_init_options.sensor_name = "onsemi_obstacle"; dp_init_options.image_height = cv_img.rows; dp_init_options.image_width = cv_img.cols; dp_init_options.device_id = 0; ACHECK(frame.data_provider->Init(dp_init_options)); ACHECK(frame.data_provider->FillImageData(cv_img.rows, cv_img.cols, image.gpu_data(), "bgr8")); ObstacleDetectorInitOptions init_options; init_options.root_dir = "/apollo/modules/perception/testdata/" "camera/lib/obstacle/detector/yolo/data/"; init_options.conf_file = "config.pt"; base::BrownCameraDistortionModel model; common::LoadBrownCameraIntrinsic( "/apollo/modules/perception/testdata/" "camera/lib/obstacle/detector/yolo/params/" "onsemi_obstacle_intrinsics.yaml", &model); init_options.base_camera_model = model.get_camera_model(); BaseObstacleDetector *detector = BaseObstacleDetectorRegisterer::GetInstanceByName("YoloObstacleDetector"); CHECK_EQ(detector->Name(), "YoloObstacleDetector"); //初始化YoloObstacleDetector,详细分析见下节。 EXPECT_TRUE(detector->Init(init_options)); ObstacleDetectorOptions options; //利用网络推测出结果,详细分析见下节。 EXPECT_TRUE(detector->Detect(options, &frame)); EXPECT_FALSE(detector->Detect(options, nullptr)); //迭代侦测到的目标物体,并打印到日志。 // EXPECT_EQ(frame.detected_objects.size(), 8); int obj_id = 0; for (auto obj : frame.detected_objects) { // AINFO << static_cast<int>(obj->type) << "\t" // << static_cast<int>(obj->sub_type); auto &box = obj->camera_supplement.box; cv::rectangle( cv_img, cv::Point(static_cast<int>(box.xmin), static_cast<int>(box.ymin)), cv::Point(static_cast<int>(box.xmax), static_cast<int>(box.ymax)), cv::Scalar(0, 255, 0), 2); std::stringstream text; text << static_cast<int>(obj->sub_type) << " - " << obj_id++; fprintf(stderr, "%4d 0 0 %6.3f %8.2f %8.2f %8.2f %8.2f %6.3f %6.3f %6.3f " "0 0 0 0 %6.3f %4d %6.3f %6.3f %6.3f %6.3f %6.3f %6.3f\n", static_cast<int>(obj->sub_type), obj->camera_supplement.alpha, obj->camera_supplement.box.xmin, obj->camera_supplement.box.ymin, obj->camera_supplement.box.xmax, obj->camera_supplement.box.ymax, obj->size[2], obj->size[1], obj->size[0], obj->type_probs[static_cast<int>(obj->type)], 0, obj->camera_supplement.visible_ratios[0], obj->camera_supplement.visible_ratios[1], obj->camera_supplement.visible_ratios[2], obj->camera_supplement.visible_ratios[3], obj->camera_supplement.cut_off_ratios[0], obj->camera_supplement.cut_off_ratios[1]); cv::putText( cv_img, text.str(), cv::Point(static_cast<int>(box.xmin), static_cast<int>(box.ymin)), cv::FONT_HERSHEY_PLAIN, 2, cv::Scalar(255, 0, 0), 2); } cv::imwrite("output.jpg", cv_img); delete detector; } |

Yolo检测器初始化

bool YoloObstacleDetector::Init(const ObstacleDetectorInitOptions &options) { gpu_id_ = options.gpu_id; BASE_CUDA_CHECK(cudaSetDevice(gpu_id_)); BASE_CUDA_CHECK(cudaStreamCreate(&stream_)); base_camera_model_ = options.base_camera_model; ACHECK(base_camera_model_ != nullptr) << "base_camera_model is nullptr!"; //config_path这儿为/apollo/modules/perception/testdata/camera/lib/obstacle/detector/yolo/data/config.pt /* model_param { model_name: "./ut" proto_file: "caffe.pt" weight_file: "caffe.model" anchors_file: "anchors.txt" types_file: "types.txt" feature_file: "feature.pt" offset_ratio: 0.288889 confidence_threshold: 0.5 min_2d_height: 10 model_type: "RTNet" cropped_ratio: 0.711111 resized_width: 1440 aligned_pixel: 32 ori_cycle: 2 min_3d_height: 0.1 light_vis_conf_threshold: 0 light_swt_conf_threshold: 0 with_box3d: true with_lights: true with_ratios: true border_ratio: 0.01 } net_param { det1_loc_blob: "loc_pred" det1_obj_blob: "obj_pred" det1_cls_blob: "cls_pred" det1_ori_blob: "ori_pred" det1_dim_blob: "dim_pred" input_blob: "data" feat_blob: "conv3_3" } nms_param { type: "NormalNMS" threshold: 0.5 sigma: 0.4 inter_cls_nms_thresh: 0.6 } */ std::string config_path = GetAbsolutePath(options.root_dir, options.conf_file); if (!cyber::common::GetProtoFromFile(config_path, &yolo_param_)) { AERROR << "read proto_config fail"; return false; } const auto &model_param = yolo_param_.model_param(); std::string model_root = GetAbsolutePath(options.root_dir, model_param.model_name()); std::string anchors_file = GetAbsolutePath(model_root, model_param.anchors_file()); std::string types_file = GetAbsolutePath(model_root, model_param.types_file()); std::string expand_file = GetAbsolutePath(model_root, model_param.expand_file()); LoadInputShape(model_param); LoadParam(yolo_param_); min_dims_.min_2d_height /= static_cast<float>(height_); //加载anchor文件。即每个grid需要预测16种bounding box。 /* 16 4.9434993 1.516986 2.1259836 1.6779645 19.452609 17.815241 3.1458852 2.4994355 15.0302664 2.3736405 1.2374577 2.8255595 5.5330938 3.605915 2.4232311 0.8086055 0.3672315 0.6450615 1.3549788 1.2046775 0.9085392 0.726555 0.772209 2.031382 4.0958478 9.108235 0.5070438 1.26041 10.0207692 6.877788 1.9708173 4.677844 */ if (!LoadAnchors(anchors_file, &anchors_)) { return false; } //预测分类为8种 /* CAR VAN BUS TRUCK CYCLIST TRICYCLIST PEDESTRIAN TRAFFICCONE */ if (!LoadTypes(types_file, &types_)) { return false; } if (!LoadExpand(expand_file, &expands_)) { return false; } ACHECK(expands_.size() == types_.size()); if (!InitNet(yolo_param_, model_root)) { return false; } //绑定Yolo的输入输出变量地址到Yolo网络上。 InitYoloBlob(yolo_param_.net_param()); if (!InitFeatureExtractor(model_root)) { return false; } return true; } //初始化深度网络 bool YoloObstacleDetector::InitNet(const yolo::YoloParam &yolo_param, const std::string &model_root) { const auto &model_param = yolo_param.model_param(); std::string proto_file = GetAbsolutePath(model_root, model_param.proto_file()); std::string weight_file = GetAbsolutePath(model_root, model_param.weight_file()); std::vector<std::string> input_names; std::vector<std::string> output_names; // init Net auto const &net_param = yolo_param.net_param(); input_names.push_back(net_param.input_blob()); output_names.push_back(net_param.det1_loc_blob()); output_names.push_back(net_param.det1_obj_blob()); output_names.push_back(net_param.det1_cls_blob()); output_names.push_back(net_param.det1_ori_conf_blob()); output_names.push_back(net_param.det1_ori_blob()); output_names.push_back(net_param.det1_dim_blob()); output_names.push_back(net_param.det2_loc_blob()); output_names.push_back(net_param.det2_obj_blob()); output_names.push_back(net_param.det2_cls_blob()); output_names.push_back(net_param.det2_ori_conf_blob()); output_names.push_back(net_param.det2_ori_blob()); output_names.push_back(net_param.det2_dim_blob()); output_names.push_back(net_param.det3_loc_blob()); output_names.push_back(net_param.det3_obj_blob()); output_names.push_back(net_param.det3_cls_blob()); output_names.push_back(net_param.det3_ori_conf_blob()); output_names.push_back(net_param.det3_ori_blob()); output_names.push_back(net_param.det3_dim_blob()); output_names.push_back(net_param.lof_blob()); output_names.push_back(net_param.lor_blob()); output_names.push_back(net_param.brvis_blob()); output_names.push_back(net_param.brswt_blob()); output_names.push_back(net_param.ltvis_blob()); output_names.push_back(net_param.ltswt_blob()); output_names.push_back(net_param.rtvis_blob()); output_names.push_back(net_param.rtswt_blob()); output_names.push_back(net_param.feat_blob()); output_names.push_back(net_param.area_id_blob()); output_names.push_back(net_param.visible_ratio_blob()); output_names.push_back(net_param.cut_off_ratio_blob()); // init Net const auto &model_type = model_param.model_type(); AINFO << "model_type=" << model_type; //这儿为RTNet,即调用Nvida的Tensorrt进行推理 inference_.reset(inference::CreateInferenceByName(model_type, proto_file, weight_file, output_names, input_names, model_root)); if (nullptr == inference_.get()) { AERROR << "Failed to init CNNAdapter"; return false; } inference_->set_gpu_id(gpu_id_); std::vector<int> shape = {1, height_, width_, 3}; std::map<std::string, std::vector<int>> shape_map{ {net_param.input_blob(), shape}}; //构建网络 if (!inference_->Init(shape_map)) { return false; } inference_->Infer(); return true; } //绑定Yolo的输入输出变量地址到Yolo网络上。 void YoloObstacleDetector::InitYoloBlob(const yolo::NetworkParam &net_param) { //尺度1检测结果是否含有对象,形状为[1,36,90,16] //图像经历了4次池化,所以原图为576x1440分辨率。 auto obj_blob_scale1 = inference_->get_blob(net_param.det1_obj_blob()); //尺度2当前无 auto obj_blob_scale2 = inference_->get_blob(net_param.det2_obj_blob()); //尺度3当前无 auto obj_blob_scale3 = inference_->get_blob(net_param.det3_obj_blob()); int output_height_scale1 = obj_blob_scale1->shape(1); int output_width_scale1 = obj_blob_scale1->shape(2); //obj_size=51840=36*90*16 int obj_size = output_height_scale1 * output_width_scale1 * static_cast<int>(anchors_.size()) / anchorSizeFactor; if (obj_blob_scale2) { int output_height_scale2 = obj_blob_scale2->shape(1); int output_width_scale2 = obj_blob_scale2->shape(2); int output_height_scale3 = obj_blob_scale3->shape(1); int output_width_scale3 = obj_blob_scale3->shape(2); obj_size = (output_height_scale1 * output_width_scale1 + output_height_scale2 * output_width_scale2 + output_height_scale3 * output_width_scale3) * static_cast<int>(anchors_.size()) / anchorSizeFactor / numScales; } //存储最终结果 bounding box 数据,形状为[1,1,51840,32],即每个grid每种bounding box下的结果数据,每个共32个数据。 yolo_blobs_.res_box_blob.reset( new base::Blob<float>(1, 1, obj_size, kBoxBlockSize)); //存储最终结果 分类 数据,形状为[1,1,8+1, 51840],即每个grid每种bounding box下的属于某个分类的结果。 yolo_blobs_.res_cls_blob.reset(new base::Blob<float>( 1, 1, static_cast<int>(types_.size() + 1), obj_size)); //分配主机内存给yolo_blobs_.res_cls_blob yolo_blobs_.res_cls_blob->cpu_data(); overlapped_.reset( new base::Blob<bool>(std::vector<int>{obj_k_, obj_k_}, true)); overlapped_->cpu_data(); overlapped_->gpu_data(); idx_sm_.reset(new base::Blob<int>(std::vector<int>{obj_k_}, true)); //形状为[1,1,16,2] yolo_blobs_.anchor_blob.reset( new base::Blob<float>(1, 1, static_cast<int>(anchors_.size() / 2), 2)); yolo_blobs_.expand_blob.reset( new base::Blob<float>(1, 1, 1, static_cast<int>(expands_.size()))); auto expand_cpu_data = yolo_blobs_.expand_blob->mutable_cpu_data(); memcpy(expand_cpu_data, expands_.data(), expands_.size() * sizeof(float)); //申请主机内存,复制数据,并拷贝数据到显卡内存 auto anchor_cpu_data = yolo_blobs_.anchor_blob->mutable_cpu_data(); memcpy(anchor_cpu_data, anchors_.data(), anchors_.size() * sizeof(float)); yolo_blobs_.anchor_blob->gpu_data(); image_.reset(new base::Image8U(height_, width_, base::Color::RGB)); //第1个侦测到对象的bounding box,形状为[1,36,90,64],每个grid有16个bounding box,每个bounding box有x,y,w,h四个值。 yolo_blobs_.det1_loc_blob = inference_->get_blob(yolo_param_.net_param().det1_loc_blob()); //第1个侦测的是否有物体,形状为[1,36,90,16] yolo_blobs_.det1_obj_blob = inference_->get_blob(yolo_param_.net_param().det1_obj_blob()); //第1个侦测的分类,形状为[1,36,180,64],应该为[1,36,90,128] yolo_blobs_.det1_cls_blob = inference_->get_blob(yolo_param_.net_param().det1_cls_blob()); yolo_blobs_.det1_ori_conf_blob = inference_->get_blob(yolo_param_.net_param().det1_ori_conf_blob()); //形状为[1,36,90,32] yolo_blobs_.det1_ori_blob = inference_->get_blob(yolo_param_.net_param().det1_ori_blob()); //形状为[1,36,90,48] yolo_blobs_.det1_dim_blob = inference_->get_blob(yolo_param_.net_param().det1_dim_blob()); yolo_blobs_.det2_loc_blob = inference_->get_blob(yolo_param_.net_param().det2_loc_blob()); yolo_blobs_.det2_obj_blob = inference_->get_blob(yolo_param_.net_param().det2_obj_blob()); yolo_blobs_.det2_cls_blob = inference_->get_blob(yolo_param_.net_param().det2_cls_blob()); yolo_blobs_.det2_ori_conf_blob = inference_->get_blob(yolo_param_.net_param().det2_ori_conf_blob()); yolo_blobs_.det2_ori_blob = inference_->get_blob(yolo_param_.net_param().det2_ori_blob()); yolo_blobs_.det2_dim_blob = inference_->get_blob(yolo_param_.net_param().det2_dim_blob()); yolo_blobs_.det3_loc_blob = inference_->get_blob(yolo_param_.net_param().det3_loc_blob()); yolo_blobs_.det3_obj_blob = inference_->get_blob(yolo_param_.net_param().det3_obj_blob()); yolo_blobs_.det3_cls_blob = inference_->get_blob(yolo_param_.net_param().det3_cls_blob()); yolo_blobs_.det3_ori_conf_blob = inference_->get_blob(yolo_param_.net_param().det3_ori_conf_blob()); yolo_blobs_.det3_ori_blob = inference_->get_blob(yolo_param_.net_param().det3_ori_blob()); yolo_blobs_.det3_dim_blob = inference_->get_blob(yolo_param_.net_param().det3_dim_blob()); //Light of front yolo_blobs_.lof_blob = inference_->get_blob(yolo_param_.net_param().lof_blob()); //Light of rear yolo_blobs_.lor_blob = inference_->get_blob(yolo_param_.net_param().lor_blob()); //Brake visible,形状为[1,36,90,16] yolo_blobs_.brvis_blob = inference_->get_blob(yolo_param_.net_param().brvis_blob()); //Brake switch on,形状为[1,36,90,16] yolo_blobs_.brswt_blob = inference_->get_blob(yolo_param_.net_param().brswt_blob()); //Left visible,形状为[1,36,90,16] yolo_blobs_.ltvis_blob = inference_->get_blob(yolo_param_.net_param().ltvis_blob()); //Right switch on,形状为[1,36,90,16] yolo_blobs_.ltswt_blob = inference_->get_blob(yolo_param_.net_param().ltswt_blob()); //Right visible,形状为[1,36,90,16] yolo_blobs_.rtvis_blob = inference_->get_blob(yolo_param_.net_param().rtvis_blob()); //Right switch on,形状为[1,36,90,16] yolo_blobs_.rtswt_blob = inference_->get_blob(yolo_param_.net_param().rtswt_blob()); yolo_blobs_.area_id_blob = inference_->get_blob(yolo_param_.net_param().area_id_blob()); //障碍物可见比例,形状为[1,36,90,64] yolo_blobs_.visible_ratio_blob = inference_->get_blob(yolo_param_.net_param().visible_ratio_blob()); //障碍物剪裁掉比例 yolo_blobs_.cut_off_ratio_blob = inference_->get_blob(yolo_param_.net_param().cut_off_ratio_blob()); } |

Yolo检测器推理

调用网络进行推理

bool YoloObstacleDetector::Detect(const ObstacleDetectorOptions &options, CameraFrame *frame) { if (frame == nullptr) { return false; } Timer timer; if (cudaSetDevice(gpu_id_) != cudaSuccess) { AERROR << "Failed to set device to " << gpu_id_; return false; } //获取输入input_blob ,把图像image_传入 auto input_blob = inference_->get_blob(yolo_param_.net_param().input_blob()); AINFO << "Start: " << static_cast<double>(timer.Toc()) * 0.001 << "ms"; DataProvider::ImageOptions image_options; image_options.target_color = base::Color::BGR; image_options.crop_roi = base::RectI( 0, offset_y_, static_cast<int>(base_camera_model_->get_width()), static_cast<int>(base_camera_model_->get_height()) - offset_y_); image_options.do_crop = true; frame->data_provider->GetImage(image_options, image_.get()); AINFO << "GetImageBlob: " << static_cast<double>(timer.Toc()) * 0.001 << "ms"; inference::ResizeGPU(*image_, input_blob, frame->data_provider->src_width(), 0); AINFO << "Resize: " << static_cast<double>(timer.Toc()) * 0.001 << "ms"; //调用预测器预测,推测原理过程见B站,搜索“Yolo” /////////////////////////// detection part /////////////////////////// inference_->Infer(); AINFO << "Network Forward: " << static_cast<double>(timer.Toc()) * 0.001 << "ms"; //获取障碍物,详细分析见下节 get_objects_cpu(yolo_blobs_, stream_, types_, nms_, yolo_param_.model_param(), light_vis_conf_threshold_, light_swt_conf_threshold_, overlapped_.get(), idx_sm_.get(), &(frame->detected_objects)); AINFO << "GetObj: " << static_cast<double>(timer.Toc()) * 0.001 << "ms"; filter_bbox(min_dims_, &(frame->detected_objects)); FeatureExtractorOptions feat_options; feat_options.normalized = true; AINFO << "Post1: " << static_cast<double>(timer.Toc()) * 0.001 << "ms"; feature_extractor_->Extract(feat_options, frame); AINFO << "Extract: " << static_cast<double>(timer.Toc()) * 0.001 << "ms"; //网络输出的bounding box是归一化的,这儿需要还原成原始尺寸 recover_bbox(frame->data_provider->src_width(), frame->data_provider->src_height() - offset_y_, offset_y_, &frame->detected_objects); // post processing int left_boundary = static_cast<int>(border_ratio_ * static_cast<float>(image_->cols())); int right_boundary = static_cast<int>((1.0f - border_ratio_) * static_cast<float>(image_->cols())); for (auto &obj : frame->detected_objects) { // recover alpha obj->camera_supplement.alpha /= ori_cycle_; // get area_id from visible_ratios if (yolo_param_.model_param().num_areas() == 0) { obj->camera_supplement.area_id = get_area_id(obj->camera_supplement.visible_ratios); } // clear cut off ratios auto &box = obj->camera_supplement.box; if (box.xmin >= left_boundary) { obj->camera_supplement.cut_off_ratios[2] = 0; } if (box.xmax <= right_boundary) { obj->camera_supplement.cut_off_ratios[3] = 0; } } AINFO << "Post2: " << static_cast<double>(timer.Toc()) * 0.001 << "ms"; return true; } |

从主机内存获取预测到的对象

void get_objects_cpu(const YoloBlobs &yolo_blobs, const cudaStream_t &stream, const std::vector<base::ObjectSubType> &types, const NMSParam &nms, const yolo::ModelParam &model_param, float light_vis_conf_threshold, float light_swt_conf_threshold, base::Blob<bool> *overlapped, base::Blob<int> *idx_sm, std::vector<base::ObjectPtr> *objects) { std::map<base::ObjectSubType, std::vector<int>> indices; std::map<base::ObjectSubType, std::vector<float>> conf_scores; int num_kept = 0; int num_classes = types.size(); //因为使用CUDA,所以从GPU中获取结果 num_kept = get_objects_gpu(yolo_blobs, stream, types, nms, model_param, light_vis_conf_threshold, light_swt_conf_threshold, overlapped, idx_sm, indices, conf_scores); objects->clear(); if (num_kept == 0) { return; } objects->reserve(num_kept+static_cast<int>(objects->size())); //从GPU中拷贝Bounding box数据到CPU const float *cpu_box_data = yolo_blobs.res_box_blob->cpu_data(); ObjectMaintainer maintainer; //indices map<label,indices> 存储每个分类的对象索引值 for (auto it = indices.begin(); it != indices.end(); ++it) { base::ObjectSubType label = it->first; if (conf_scores.find(label) == conf_scores.end()) { // Something bad happened if there are no predictions for current label. continue; } //当前对象的最大评分 const std::vector<float> &scores = conf_scores.find(label)->second; std::vector<int> &indice = it->second; for (size_t j = 0; j < indice.size(); ++j) { int idx = indice[j]; //根据索引值获取对象的bounding box的值 const float *bbox = cpu_box_data + idx * kBoxBlockSize; if (scores[idx] < model_param.confidence_threshold()) { continue; } base::ObjectPtr obj = nullptr; obj.reset(new base::Object); obj->type = base::kSubType2TypeMap.at(label); obj->sub_type = label; obj->type_probs.assign( static_cast<int>(base::ObjectType::MAX_OBJECT_TYPE), 0); obj->sub_type_probs.assign( static_cast<int>(base::ObjectSubType::MAX_OBJECT_TYPE), 0); float total = 1e-5; //获取当前bounding box下对象所属每个类的概率值 for (int k = 0; k < num_classes; ++k) { auto &vis_type_k = types[k]; auto &obj_type_k = base::kSubType2TypeMap.at(vis_type_k); auto &conf_score = conf_scores[vis_type_k][idx]; obj->type_probs[static_cast<int>(obj_type_k)] += conf_score; obj->sub_type_probs[static_cast<int>(vis_type_k)] = conf_score; total += conf_score; } obj->confidence = obj->type_probs[static_cast<int>(obj->type)]; for (size_t k = 0; k < obj->type_probs.size(); ++k) { obj->type_probs[k] /= total; } //设置bounding box的xmin,ymin,xmax,ymax fill_base(obj, bbox); //设置障碍物的长宽高alpha fill_bbox3d(model_param.with_box3d(), obj, bbox + 4); //设置前后的bounding box fill_frbox(model_param.with_frbox(), obj, bbox + 8); //设置刹车灯,左右灯的状态和可见度 fill_lights(model_param.with_lights(), obj, bbox + 16); //设置可见比例 fill_ratios(model_param.with_ratios(), obj, bbox + 22); fill_area_id(model_param.num_areas() > 0, obj, bbox + 30); //添加到返回对象 if (maintainer.Add(idx, obj)) { objects->push_back(obj); } } } |

从显卡的内存中获取预测到的对象

int get_objects_gpu(const YoloBlobs &yolo_blobs, const cudaStream_t &stream, const std::vector<base::ObjectSubType> &types, const NMSParam &nms, const yolo::ModelParam &model_param, float light_vis_conf_threshold, float light_swt_conf_threshold, base::Blob<bool> *overlapped, base::Blob<int> *idx_sm, const std::map<base::ObjectSubType, std::vector<int>> &indices_cns, const std::map<base::ObjectSubType, std::vector<float>> &conf_scores_cns) { auto& indices = const_cast<std::map<base::ObjectSubType, std::vector<int>>& >(indices_cns); auto& conf_scores = const_cast<std::map<base::ObjectSubType, std::vector<float>>& >(conf_scores_cns); bool multi_scale = false; if (yolo_blobs.det2_obj_blob) { multi_scale = true; } //8个分类 int num_classes = types.size(); //1 int batch = yolo_blobs.det1_obj_blob->shape(0); //16 int num_anchor = yolo_blobs.anchor_blob->shape(2); int num_anchor_per_scale = num_anchor; if (multi_scale) { num_anchor_per_scale /= numScales; } CHECK_EQ(batch, 1) << "batch size should be 1!"; std::vector<int> height_vec, width_vec, num_candidates_vec; //36 height_vec.push_back(yolo_blobs.det1_obj_blob->shape(1)); //90 width_vec.push_back(yolo_blobs.det1_obj_blob->shape(2)); if (multi_scale) { height_vec.push_back(yolo_blobs.det2_obj_blob->shape(1)); height_vec.push_back(yolo_blobs.det3_obj_blob->shape(1)); width_vec.push_back(yolo_blobs.det2_obj_blob->shape(2)); width_vec.push_back(yolo_blobs.det3_obj_blob->shape(2)); } //num_candidates_vec[0]=36*90*16=51840,这儿只有1个候选尺度 for (size_t i = 0; i < height_vec.size(); i++) { num_candidates_vec.push_back(height_vec[i] * width_vec[i] * num_anchor_per_scale); } //bounding box数据,目前只有1个尺度的数据,第一个元素的形状为[1,36,90,64] const float *loc_data_vec[3] = { yolo_blobs.det1_loc_blob->gpu_data(), yolo_blobs.det2_loc_blob ? yolo_blobs.det2_loc_blob->gpu_data() : nullptr, yolo_blobs.det3_loc_blob ? yolo_blobs.det3_loc_blob->gpu_data() : nullptr}; //是否含有对象(非背景)的判断,第一个元素的形状为[1,36,90,16] const float *obj_data_vec[3] = { yolo_blobs.det1_obj_blob->gpu_data(), yolo_blobs.det2_obj_blob ? yolo_blobs.det2_obj_blob->gpu_data() : nullptr, yolo_blobs.det3_obj_blob ? yolo_blobs.det3_obj_blob->gpu_data() : nullptr}; //分类数据,第一个元素的形状为[1,36,90,128] const float *cls_data_vec[3] = { yolo_blobs.det1_cls_blob->gpu_data(), yolo_blobs.det2_cls_blob ? yolo_blobs.det2_cls_blob->gpu_data() : nullptr, yolo_blobs.det3_cls_blob ? yolo_blobs.det3_cls_blob->gpu_data() : nullptr}; const float *ori_data_vec[3] = { get_gpu_data(model_param.with_box3d(), *yolo_blobs.det1_ori_blob), multi_scale ? get_gpu_data(model_param.with_box3d(), *yolo_blobs.det2_ori_blob) : nullptr, multi_scale ? get_gpu_data(model_param.with_box3d(), *yolo_blobs.det3_ori_blob) : nullptr}; const float *dim_data_vec[3] = { get_gpu_data(model_param.with_box3d(), *yolo_blobs.det1_dim_blob), multi_scale ? get_gpu_data(model_param.with_box3d(), *yolo_blobs.det2_dim_blob) : nullptr, multi_scale ? get_gpu_data(model_param.with_box3d(), *yolo_blobs.det3_dim_blob) : nullptr}; // TODO[KaWai]: add 3 scale frbox data and light data. const float *lof_data = get_gpu_data(model_param.with_frbox(), *yolo_blobs.lof_blob); const float *lor_data = get_gpu_data(model_param.with_frbox(), *yolo_blobs.lor_blob); const float *area_id_data = get_gpu_data(model_param.num_areas() > 0, *yolo_blobs.area_id_blob); const float *visible_ratio_data = get_gpu_data(model_param.with_ratios(), *yolo_blobs.visible_ratio_blob); const float *cut_off_ratio_data = get_gpu_data(model_param.with_ratios(), *yolo_blobs.cut_off_ratio_blob); const auto &with_lights = model_param.with_lights(); const float *brvis_data = get_gpu_data(with_lights, *yolo_blobs.brvis_blob); const float *brswt_data = get_gpu_data(with_lights, *yolo_blobs.brswt_blob); const float *ltvis_data = get_gpu_data(with_lights, *yolo_blobs.ltvis_blob); const float *ltswt_data = get_gpu_data(with_lights, *yolo_blobs.ltswt_blob); const float *rtvis_data = get_gpu_data(with_lights, *yolo_blobs.rtvis_blob); const float *rtswt_data = get_gpu_data(with_lights, *yolo_blobs.rtswt_blob); //all_scales_num_candidates=1*36*90*16=51840 int all_scales_num_candidates = 0; for (size_t i = 0; i < num_candidates_vec.size(); i++) { all_scales_num_candidates += num_candidates_vec[i]; } //[1,1,51840,32] yolo_blobs.res_box_blob->Reshape( std::vector<int>{1, 1, all_scales_num_candidates, kBoxBlockSize}); //[1,1,9,51840] yolo_blobs.res_cls_blob->Reshape( std::vector<int>{1, 1, num_classes + 1, all_scales_num_candidates}); //当前代码是CUDA程序,获取GPU对应的指针数据,然后调用下面的CUDA Kernel计算 float *res_box_data = yolo_blobs.res_box_blob->mutable_gpu_data(); float *res_cls_data = yolo_blobs.res_cls_blob->mutable_gpu_data(); const int thread_size = 512; // TODO[KaWai]: use different stream to process scales in parallel. int num_candidates_offset = 0; //迭代每个候选尺度,当前只有1个36*90,原始图片进行了4次下采样 for (int i = 0; i < num_candidates_vec.size(); i++) { int block_size = (num_candidates_vec[i] + thread_size - 1) / thread_size; //[1,36,90,64] const float *loc_data = loc_data_vec[i]; //[1,36,90,16] const float *obj_data = obj_data_vec[i]; //[1,36,90,128] const float *cls_data = cls_data_vec[i]; const float *ori_data = ori_data_vec[i]; const float *dim_data = dim_data_vec[i]; const float *anchor_data = yolo_blobs.anchor_blob->gpu_data() + num_anchor_per_scale * 2 * i; const float *expand_data = yolo_blobs.expand_blob->gpu_data(); //90 const int width = width_vec[i]; //36 const int height = height_vec[i]; //分配到每个CUDA Kernel上进行并行计算 get_object_kernel<<<block_size, thread_size, 0, stream>>>( num_candidates_vec[i], loc_data, obj_data, cls_data, ori_data, dim_data, lof_data, lor_data, area_id_data, visible_ratio_data, cut_off_ratio_data, brvis_data, brswt_data, ltvis_data, ltswt_data, rtvis_data, rtswt_data, anchor_data, yolo_blobs.expand_blob->gpu_data(), width, height, num_anchor_per_scale, num_classes, model_param.confidence_threshold(), light_vis_conf_threshold, light_swt_conf_threshold, model_param.with_box3d(), model_param.with_frbox(), model_param.with_lights(), model_param.with_ratios(), multi_scale, model_param.num_areas(), res_box_data + num_candidates_offset * kBoxBlockSize, res_cls_data, num_candidates_offset, all_scales_num_candidates); cudaStreamSynchronize(stream); num_candidates_offset += num_candidates_vec[i]; } const float *cpu_cls_data = yolo_blobs.res_cls_blob->cpu_data(); std::vector<int> all_indices(all_scales_num_candidates); std::iota(all_indices.begin(), all_indices.end(), 0); std::vector<int> rest_indices; int top_k = idx_sm->count(); int num_kept = 0; // inter-cls NMS apply_nms_gpu( res_box_data, cpu_cls_data + num_classes * all_scales_num_candidates, all_indices, kBoxBlockSize, nms.inter_cls_conf_thresh, top_k, nms.inter_cls_nms_thresh, &rest_indices, overlapped, idx_sm, stream); for (int k = 0; k < num_classes; ++k) { apply_nms_gpu(res_box_data, cpu_cls_data + k * all_scales_num_candidates, rest_indices, kBoxBlockSize, model_param.confidence_threshold(), top_k, nms.threshold, &(indices[types[k]]), overlapped, idx_sm, stream); num_kept += indices[types[k]].size(); std::vector<float> conf_score( cpu_cls_data + k * all_scales_num_candidates, cpu_cls_data + (k + 1) * all_scales_num_candidates); conf_scores.insert(std::make_pair(types[k], conf_score)); cudaStreamSynchronize(stream); } return num_kept; } __global__ void get_object_kernel( int n, const float *loc_data, const float *obj_data, const float *cls_data, const float *ori_data, const float *dim_data, const float *lof_data, const float *lor_data, const float *area_id_data, const float *visible_ratio_data, const float *cut_off_ratio_data, const float *brvis_data, const float *brswt_data, const float *ltvis_data, const float *ltswt_data, const float *rtvis_data, const float *rtswt_data, const float *anchor_data, const float *expand_data, int width, int height, int num_anchors, int num_classes, float confidence_threshold, float light_vis_conf_threshold, float light_swt_conf_threshold, bool with_box3d, bool with_frbox, bool with_lights, bool with_ratios, bool multi_scale, int num_areas, float *res_box_data, float *res_cls_data, int res_cls_offset, int all_scales_num_candidates) { for (int i = blockIdx.x * blockDim.x + threadIdx.x; i < (n); i += blockDim.x * gridDim.x) { //32 int box_block = kBoxBlockSize; //当前bounding box索引值 int idx = i; int c = idx % num_anchors; idx = idx / num_anchors; int w = idx % width; idx = idx / width; int h = idx; //当前bounding box索引值 int loc_index = (h * width + w) * num_anchors + c; //当前bounding box索引偏移量,对应的4个x,y,w,h值,每个框有4个值,索引偏移量乘4 int offset_loc = loc_index * 4; //当前分类索引偏移量,对应的8个分类,索引偏移量乘8 int offset_cls = loc_index * num_classes; //当前grid有多大概率是物体而不是背景 float scale = obj_data[loc_index]; //当前grid预测的bounding box在特征图上对应的中心值cx,除以width是为了得到归一化值 //sigmoid_gpu可以保证sigmoid_gpu(loc_data[offset_loc + 0])的结果在[-1,1]之间 //这样cx的值不会漂移出当前grid。 //loc_data[0,1,2,3]依次存储预测的中心点x,y和预测的bounding box宽度和高度。 float cx = (w + sigmoid_gpu(loc_data[offset_loc + 0])) / width; float cy = (h + sigmoid_gpu(loc_data[offset_loc + 1])) / height; //hw的值为预测的bounding box宽度的一半 float hw = exp(max(minExpPower, min(loc_data[offset_loc + 2], maxExpPower))) * anchor_data[2 * c] / width * 0.5; float hh = exp(max(minExpPower, min(loc_data[offset_loc + 3], maxExpPower))) * anchor_data[2 * c + 1] / height * 0.5; float max_prob = 0.f; int max_index = 0; for (int k = 0; k < num_classes; ++k) { //当前分类的概率需要乘以当前grid是否含有object的概率,如果不含有object直接概率为0 float prob = cls_data[offset_cls + k] * scale; res_cls_data[k * all_scales_num_candidates + res_cls_offset + i] = prob; if (prob > max_prob) { max_prob = prob; max_index = k; } } //只有8个分类,第9个值存储最大分类概率值 res_cls_data[num_classes * all_scales_num_candidates + res_cls_offset + i] = max_prob; auto &&dst_ptr = res_box_data + i * box_block; hw += expand_data[max_index]; //算出bounding box的xmin,ymin,xmax,minmax dst_ptr[0] = cx - hw; dst_ptr[1] = cy - hh; dst_ptr[2] = cx + hw; dst_ptr[3] = cy + hh; if (with_box3d) { int offset_ori = loc_index * 2; dst_ptr[4] = atan2(ori_data[offset_ori + 1], ori_data[offset_ori]); int offset_dim = loc_index * 3; if (multi_scale) { offset_dim = loc_index * num_classes * 3 + max_index * 3; } //物体3维尺寸 dst_ptr[5] = dim_data[offset_dim + 0]; dst_ptr[6] = dim_data[offset_dim + 1]; dst_ptr[7] = dim_data[offset_dim + 2]; } if (with_frbox) { { int offset_lof = loc_index * 4; auto &&src_ptr = lof_data + offset_lof; auto sb_x = src_ptr[0] * hw * 2 + cx; auto sb_y = src_ptr[1] * hh * 2 + cy; auto sb_hw = exp(src_ptr[2]) * hw; auto sb_hh = exp(src_ptr[3]) * hh; dst_ptr[8] = sb_x - sb_hw; dst_ptr[9] = sb_y - sb_hh; dst_ptr[10] = sb_x + sb_hw; dst_ptr[11] = sb_y + sb_hh; } { int offset_lor = loc_index * 4; auto &&src_ptr = lor_data + offset_lor; auto sb_x = src_ptr[0] * hw * 2 + cx; auto sb_y = src_ptr[1] * hh * 2 + cy; auto sb_hw = exp(src_ptr[2]) * hw; auto sb_hh = exp(src_ptr[3]) * hh; dst_ptr[12] = sb_x - sb_hw; dst_ptr[13] = sb_y - sb_hh; dst_ptr[14] = sb_x + sb_hw; dst_ptr[15] = sb_y + sb_hh; } } if (with_lights) { dst_ptr[16] = sigmoid_gpu(brvis_data[loc_index]); dst_ptr[17] = sigmoid_gpu(brswt_data[loc_index]); dst_ptr[18] = sigmoid_gpu(ltvis_data[loc_index]); dst_ptr[19] = sigmoid_gpu(ltswt_data[loc_index]); dst_ptr[20] = sigmoid_gpu(rtvis_data[loc_index]); dst_ptr[21] = sigmoid_gpu(rtswt_data[loc_index]); dst_ptr[16] = dst_ptr[16] > light_vis_conf_threshold ? dst_ptr[16] : 0; dst_ptr[18] = dst_ptr[18] > light_vis_conf_threshold ? dst_ptr[18] : 0; dst_ptr[20] = dst_ptr[20] > light_vis_conf_threshold ? dst_ptr[20] : 0; float swt_score = 0; swt_score = dst_ptr[16] * dst_ptr[17]; dst_ptr[17] = swt_score > light_swt_conf_threshold ? swt_score : 0; swt_score = dst_ptr[18] * dst_ptr[19]; dst_ptr[19] = swt_score > light_swt_conf_threshold ? swt_score : 0; swt_score = dst_ptr[20] * dst_ptr[21]; dst_ptr[21] = swt_score > light_swt_conf_threshold ? swt_score : 0; } if (with_ratios) { // 0~3: cos2, left, visa, visb auto vis_pred = visible_ratio_data + loc_index * 4; auto vis_ptr = dst_ptr + 22; vis_ptr[0] = vis_ptr[1] = vis_ptr[2] = vis_ptr[3] = 0; const float hi_th = 0.75; const float lo_th = 1.f - hi_th; if (vis_pred[2] >= hi_th && vis_pred[3] >= hi_th) { // 2 (1, 3) vis_ptr[0] = vis_pred[0]; vis_ptr[1] = 1 - vis_pred[0]; } else if (vis_pred[2] <= lo_th && vis_pred[3] >= hi_th) { // 4 (3, 5) vis_ptr[2] = vis_pred[0]; vis_ptr[1] = 1 - vis_pred[0]; } else if (vis_pred[2] <= lo_th && vis_pred[3] <= lo_th) { // 6 (5, 7) vis_ptr[2] = vis_pred[0]; vis_ptr[3] = 1 - vis_pred[0]; } else if (vis_pred[2] >= hi_th && vis_pred[3] <= lo_th) { // 8 (7, 1) vis_ptr[0] = vis_pred[0]; vis_ptr[3] = 1 - vis_pred[0]; } else { vis_ptr[2] = vis_pred[0]; if (vis_pred[1] > 0.5) { vis_ptr[1] = 1 - vis_pred[0]; } else { vis_ptr[3] = 1 - vis_pred[0]; } } int offset_cut = loc_index * 4; dst_ptr[26] = cut_off_ratio_data[offset_cut + 0]; dst_ptr[27] = cut_off_ratio_data[offset_cut + 1]; dst_ptr[28] = cut_off_ratio_data[offset_cut + 2]; dst_ptr[29] = cut_off_ratio_data[offset_cut + 3]; } if (num_areas > 0) { int offset_area_id = loc_index * num_areas; int max_area_id = 0; for (int area_id = 1; area_id < num_areas; ++area_id) { if (area_id_data[offset_area_id + area_id] > area_id_data[offset_area_id + max_area_id]) { max_area_id = area_id; } } dst_ptr[30] = max_area_id + 1; dst_ptr[31] = area_id_data[offset_area_id + max_area_id]; } } } |

本作品采用知识共享署名 4.0 国际许可协议进行许可。

Yolo算法来进行障碍物检测(type-id)

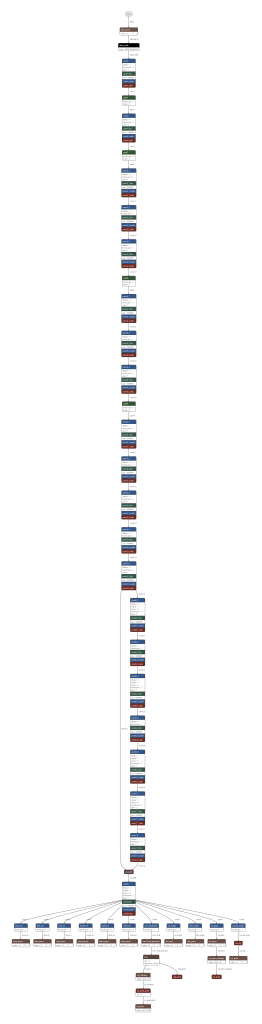

Yolo算法来进行障碍物检测(type-id) 本测试使用的Yolo网络模型

本测试使用的Yolo网络模型