原创文章,转载请注明: 转载自慢慢的回味

本文链接地址: Apollo自动驾驶车道检测

本篇以单元测试camera_lib_lane_postprocessor_darkscnn_lane_postprocessor_test.cc的测试camera_lane_postprocessor_point_test为例来分析Apollo自动驾驶怎么进行车道检测。

利用DarkSCNN算法对摄像头拍摄到的路面图片进行预测,来获取车道线在以车辆坐标系下的位置。

Content:

DarkSCNN车道检测测试用例

TEST(darkSCNNLanePostprocessor, camera_lane_postprocessor_point_test) { unsetenv("MODULE_PATH"); unsetenv("CYBER_PATH"); // turn on this flag to output visualization results bool enable_visualization_ = true; // initialize lane detector LaneDetectorInitOptions init_options; LaneDetectorOptions detetor_options; //侦测器初始化参数目录,config.pt如下: //model_param { // model_name: "darkSCNN" 模型名称 // proto_file: "deploy.prototxt" 模型构建文件 // weight_file: "deploy.caffemodel" 模型的权重参数文件 // resize_height: 480 模型的输入输出需要图片的尺寸高度 // resize_width: 640 // model_type: "RTNet" init_options.conf_file = "config.pt"; init_options.root_dir = "/apollo/modules/perception/testdata/" "camera/lib/lane/postprocessor/darkSCNN/data/"; base::BrownCameraDistortionModel model; //加载相机的固有内部参数 //这个是相机生产好后就具有的不变参数 //height: 1080 分辨率高度 //width: 1920 分辨率宽度 //distortion_model: plumb_bob 畸变模型 //D: [-0.5681640875816327, 0.3220844473501115, -0.0028078227984000767, -0.0009352721569044129, 0.0] //失真系数,包括(k1, k2, t1, t2, k3) //K: [2022.5639693330495, 0.0, 989.3893672805314, 0.0, 2014.0535251884398, 570.6145712367711, 0.0, 0.0, 1.0] //相机内参, 3x3矩阵【fx 0 0;0 fy 0; cx cy 1】 //R: [1.0, 0.0, 0.0, 0.0, 1.0, 0.0, 0.0, 0.0, 1.0] //一个3x3的旋转矩阵,仅对双目相机有效,使左右极线平行。 //P: [1729.4515380859375, 0.0, 997.0791139046996, 0.0, 0.0, 1926.0577392578125, 571.4609883012963, 0.0, 0.0, 0.0, 1.0, 0.0] //3x4投影矩阵,即【fx′ 0 cx′ Tx; 0 fy′ cy′ Ty; 0 0 1 0】,在单目相机中,Tx=Ty=0。 common::LoadBrownCameraIntrinsic( "/apollo/modules/perception/testdata/" "camera/lib/lane/postprocessor/darkSCNN/params/" "onsemi_obstacle_intrinsics.yaml", &model); init_options.base_camera_model = model.get_camera_model(); // BaseLaneDetector *detector = // BaseLaneDetectorRegisterer::GetInstanceByName("darkSCNNLaneDetector"); std::shared_ptr<DarkSCNNLaneDetector> detector(new DarkSCNNLaneDetector); AINFO << "detector: " << detector->Name(); //详见下面的 车道检测器初始化 EXPECT_TRUE(detector->Init(init_options)); // initialize lane postprocessor std::shared_ptr<DarkSCNNLanePostprocessor> lane_postprocessor; lane_postprocessor.reset(new DarkSCNNLanePostprocessor); LanePostprocessorInitOptions postprocessor_init_options; postprocessor_init_options.detect_config_root = "/apollo/modules/perception/testdata/" "camera/lib/lane/postprocessor/darkSCNN/data/"; postprocessor_init_options.detect_config_name = "config.pt"; postprocessor_init_options.root_dir = "/apollo/modules/perception/testdata/" "camera/lib/lane/postprocessor/darkSCNN/data/"; postprocessor_init_options.conf_file = "lane_postprocessor_config.pt"; //车道检测后处理器初始化 lane_postprocessor->Init(postprocessor_init_options); LanePostprocessorOptions postprocessor_options; const std::string result_dir = "./result"; // set pitch angle float pitch_angle = 0.0f; // set camera_ground_height (unit:meter) float camera_ground_height = 1.6f; CameraFrame frame; frame.camera_k_matrix = model.get_intrinsic_params(); CalibrationServiceInitOptions calibration_service_init_options; calibration_service_init_options.calibrator_working_sensor_name = "onsemi_obstacle"; std::map<std::string, Eigen::Matrix3f> name_intrinsic_map; name_intrinsic_map["onsemi_obstacle"] = frame.camera_k_matrix; calibration_service_init_options.name_intrinsic_map = name_intrinsic_map; calibration_service_init_options.calibrator_method = "LaneLineCalibrator"; calibration_service_init_options.image_height = static_cast<int>(model.get_height()); calibration_service_init_options.image_width = static_cast<int>(model.get_width()); OnlineCalibrationService calibration_service; //镜头矫正服务初始化 ACHECK(calibration_service.Init(calibration_service_init_options)); std::map<std::string, float> name_camera_ground_height_map; std::map<std::string, float> name_camera_pitch_angle_diff_map; name_camera_ground_height_map["onsemi_obstacle"] = camera_ground_height; name_camera_pitch_angle_diff_map["onsemi_obstacle"] = 0; calibration_service.SetCameraHeightAndPitch(name_camera_ground_height_map, name_camera_pitch_angle_diff_map, pitch_angle); frame.calibration_service = &calibration_service; // image data initialized std::shared_ptr<base::SyncedMemory> img_gpu_data; DataProvider::InitOptions dp_init_options; DataProvider data_provider; frame.data_provider = &data_provider; base::MotionBufferPtr motion_buffer; // initialize visualizer and set homography for lane_postprocessor Visualizer visualize_; double pitch_adj = 0; double yaw_adj = 0; double roll_adj = 0; std::string visual_camera = "onsemi_obstacle"; EigenMap<std::string, Eigen::Matrix4d> extrinsic_map; EigenMap<std::string, Eigen::Matrix3f> intrinsic_map; Eigen::Matrix4d ex_camera2lidar; Eigen::Matrix4d ex_lidar2imu; Eigen::Matrix3d homography_im2car_; //加载相机的固有内外部参数 //这是相机在安装的时候决定的,包括平移和旋转。比如: //transform: // translation: // x: 0.4739955200956851 // y: 0.1816468094791985 // z: -0.1045108851198503 // rotation: // x: -0.5085689478608929 // y: 0.5050486777018733 // z: -0.4870943885012868 // w: 0.4990215577646031 LoadExtrinsics( "/apollo/modules/perception/testdata/" "camera/lib/lane/postprocessor/darkSCNN/params/" "onsemi_obstacle_extrinsics.yaml", &ex_camera2lidar); LoadExtrinsics( "/apollo/modules/perception/testdata/" "camera/lib/lane/postprocessor/darkSCNN/params/" "velodyne128_novatel_extrinsics.yaml", &ex_lidar2imu); intrinsic_map["onsemi_obstacle"] = frame.camera_k_matrix; extrinsic_map["onsemi_obstacle"] = ex_camera2lidar; std::vector<std::string> camera_names; camera_names.emplace_back(visual_camera); //初始化相机可视化组件 EXPECT_TRUE(visualize_.Init_all_info_single_camera( camera_names, visual_camera, intrinsic_map, extrinsic_map, ex_lidar2imu, pitch_adj, yaw_adj, roll_adj, calibration_service_init_options.image_height, calibration_service_init_options.image_width)); homography_im2car_ = visualize_.homography_im2car(visual_camera); lane_postprocessor->SetIm2CarHomography(homography_im2car_); AINFO << "Initilize visualizer finished!"; if (enable_visualization_) { visualize_.write_out_img_ = true; visualize_.cv_imshow_img_ = true; visualize_.SetDirectory(result_dir); } // process each test image for (int i = 1; i < 5; i++) { std::string impath = cv::format( "/apollo/modules/perception/testdata/" "camera/lib/lane/postprocessor/" "darkSCNN/data/test_%d.jpg", i); cv::Mat img = cv::imread(impath); ACHECK(!img.empty()) << "input image is empty."; int size = img.cols * img.rows * img.channels(); img_gpu_data.reset(new base::SyncedMemory(size, true)); memcpy(img_gpu_data->mutable_cpu_data(), img.data, size * sizeof(uint8_t)); dp_init_options.image_height = img.rows; dp_init_options.image_width = img.cols; dp_init_options.device_id = 0; dp_init_options.sensor_name = "onsemi_obstacle"; frame.data_provider->Init(dp_init_options); frame.data_provider->FillImageData( img.rows, img.cols, (const uint8_t *)(img_gpu_data->mutable_gpu_data()), "bgr8"); //检测帧中的车道线 EXPECT_TRUE(detector->Detect(detetor_options, &frame)); AINFO << "detection finished!"; //输出车道掩膜图,单元格原始数据为1-13,为了能够看清楚,所以像素值20倍了 cv::Mat lane_map(480, 640, CV_32FC1); memcpy(lane_map.data, frame.lane_detected_blob->cpu_data(), 640 * 480 * sizeof(float)); for (int x = 1; x < lane_map.cols - 1; ++x) { for (int y = 1; y < lane_map.rows - 1; ++y) { int value = static_cast<int>(round(lane_map.at<float>(y, x))); // lane on left if( value > 0 && value < 13 ){ lane_map.at<float>(y, x) = value * 20; } } } char path[1000]; snprintf(path, sizeof(path), "%s/%06d_lanemap.jpg", result_dir.c_str(), i ); cv::imwrite(path, lane_map); //进行2D和3D的后续处理 // postprocess EXPECT_EQ(lane_postprocessor->Name(), "DarkSCNNLanePostprocessor"); EXPECT_TRUE(lane_postprocessor->Process2D(postprocessor_options, &frame)); AINFO << "2D postprocess finished!"; EXPECT_TRUE(lane_postprocessor->Process3D(postprocessor_options, &frame)); AINFO << "3D postprocess finished!"; if (enable_visualization_) { frame.frame_id = i; frame.timestamp = static_cast<double>(i); Eigen::Affine3d world2camera = Eigen::Affine3d::Identity(); visualize_.ShowResult_all_info_single_camera(img, frame, motion_buffer, world2camera); } // delete detector; } } |

车道检测器初始化

bool DarkSCNNLaneDetector::Init(const LaneDetectorInitOptions &options) { std::string proto_path = GetAbsolutePath(options.root_dir, options.conf_file); if (!GetProtoFromFile(proto_path, &darkscnn_param_)) { AINFO << "load proto param failed, root dir: " << options.root_dir; return false; } std::string param_str; google::protobuf::TextFormat::PrintToString(darkscnn_param_, ¶m_str); AINFO << "darkSCNN param: " << param_str; const auto model_param = darkscnn_param_.model_param(); std::string model_root = GetAbsolutePath(options.root_dir, model_param.model_name()); std::string proto_file = GetAbsolutePath(model_root, model_param.proto_file()); std::string weight_file = GetAbsolutePath(model_root, model_param.weight_file()); AINFO << " proto_file: " << proto_file; AINFO << " weight_file: " << weight_file; AINFO << " model_root: " << model_root; //得到相机的输入分辨率 base_camera_model_ = options.base_camera_model; if (base_camera_model_ == nullptr) { AERROR << "options.intrinsic is nullptr!"; input_height_ = 1080; input_width_ = 1920; } else { input_height_ = static_cast<uint16_t>(base_camera_model_->get_height()); input_width_ = static_cast<uint16_t>(base_camera_model_->get_width()); } ACHECK(input_width_ > 0) << "input width should be more than 0"; ACHECK(input_height_ > 0) << "input height should be more than 0"; AINFO << "input_height: " << input_height_; AINFO << "input_width: " << input_width_; //得到相机图片处理的偏移量,调整大小参数,剪裁参数, //通过基础处理,图片更有利于SCNN识别。 // compute image provider parameters input_offset_y_ = static_cast<uint16_t>(model_param.input_offset_y()); input_offset_x_ = static_cast<uint16_t>(model_param.input_offset_x()); resize_height_ = static_cast<uint16_t>(model_param.resize_height()); resize_width_ = static_cast<uint16_t>(model_param.resize_width()); crop_height_ = static_cast<uint16_t>(model_param.crop_height()); crop_width_ = static_cast<uint16_t>(model_param.crop_width()); confidence_threshold_lane_ = model_param.confidence_threshold(); CHECK_LE(crop_height_, input_height_) << "crop height larger than input height"; CHECK_LE(crop_width_, input_width_) << "crop width larger than input width"; if (model_param.is_bgr()) { data_provider_image_option_.target_color = base::Color::BGR; image_mean_[0] = model_param.mean_b(); image_mean_[1] = model_param.mean_g(); image_mean_[2] = model_param.mean_r(); } else { data_provider_image_option_.target_color = base::Color::RGB; image_mean_[0] = model_param.mean_r(); image_mean_[1] = model_param.mean_g(); image_mean_[2] = model_param.mean_b(); } data_provider_image_option_.do_crop = true; data_provider_image_option_.crop_roi.x = input_offset_x_; data_provider_image_option_.crop_roi.y = input_offset_y_; data_provider_image_option_.crop_roi.height = crop_height_; data_provider_image_option_.crop_roi.width = crop_width_; cudaDeviceProp prop; cudaGetDeviceProperties(&prop, options.gpu_id); AINFO << "GPU: " << prop.name; const auto net_param = darkscnn_param_.net_param(); net_inputs_.push_back(net_param.input_blob()); net_outputs_.push_back(net_param.seg_blob()); if (model_param.model_type() == "CaffeNet" && net_param.has_vpt_blob() && net_param.vpt_blob().size() > 0) { net_outputs_.push_back(net_param.vpt_blob()); } //SCNN网络输入参数 for (auto name : net_inputs_) { AINFO << "net input blobs: " << name; } //SCNN网络输出参数 for (auto name : net_outputs_) { AINFO << "net output blobs: " << name; } //根据配置加载网络模型,比如这儿使用Nvidia的RTNet,加载过程见下一节 //然后初始化网络cnnadapter_lane_->Init // initialize caffe net const auto &model_type = model_param.model_type(); AINFO << "model_type: " << model_type; cnnadapter_lane_.reset( inference::CreateInferenceByName(model_type, proto_file, weight_file, net_outputs_, net_inputs_, model_root)); ACHECK(cnnadapter_lane_ != nullptr); cnnadapter_lane_->set_gpu_id(options.gpu_id); ACHECK(resize_width_ > 0) << "resize width should be more than 0"; ACHECK(resize_height_ > 0) << "resize height should be more than 0"; std::vector<int> shape = {1, 3, resize_height_, resize_width_}; std::map<std::string, std::vector<int>> input_reshape{ {net_inputs_[0], shape}}; AINFO << "input_reshape: " << input_reshape[net_inputs_[0]][0] << ", " << input_reshape[net_inputs_[0]][1] << ", " << input_reshape[net_inputs_[0]][2] << ", " << input_reshape[net_inputs_[0]][3]; if (!cnnadapter_lane_->Init(input_reshape)) { AINFO << "net init fail."; return false; } for (auto &input_blob_name : net_inputs_) { auto input_blob = cnnadapter_lane_->get_blob(input_blob_name); AINFO << input_blob_name << ": " << input_blob->channels() << " " << input_blob->height() << " " << input_blob->width(); } auto output_blob = cnnadapter_lane_->get_blob(net_outputs_[0]); AINFO << net_outputs_[0] << " : " << output_blob->channels() << " " << output_blob->height() << " " << output_blob->width(); lane_output_height_ = output_blob->height(); lane_output_width_ = output_blob->width(); num_lanes_ = output_blob->channels(); if (net_outputs_.size() > 1) { vpt_mean_.push_back(model_param.vpt_mean_dx()); vpt_mean_.push_back(model_param.vpt_mean_dy()); vpt_std_.push_back(model_param.vpt_std_dx()); vpt_std_.push_back(model_param.vpt_std_dy()); } std::vector<int> lane_shape = {1, 1, lane_output_height_, lane_output_width_}; lane_blob_.reset(new base::Blob<float>(lane_shape)); return true; } |

RTNet网络加载

//根据配置的参数RTNet创建RTNet网络 Inference *CreateInferenceByName(const std::string &name, const std::string &proto_file, const std::string &weight_file, const std::vector<std::string> &outputs, const std::vector<std::string> &inputs, const std::string &model_root) { if (name == "RTNet") { return new RTNet(proto_file, weight_file, outputs, inputs); } else if (name == "RTNetInt8") { return new RTNet(proto_file, weight_file, outputs, inputs, model_root); } else if (name == "TorchDet") { return new TorchDet(proto_file, weight_file, outputs, inputs); } else if (name == "TorchNet") { return new TorchNet(proto_file, weight_file, outputs, inputs); } else if (name == "Obstacle") { return new ObstacleDetector(proto_file, weight_file, outputs, inputs); } return nullptr; } //构造函数加载权值和网络参数 RTNet::RTNet(const std::string &net_file, const std::string &model_file, const std::vector<std::string> &outputs, const std::vector<std::string> &inputs) : output_names_(outputs), input_names_(inputs) { loadWeights(model_file, &weight_map_); net_param_.reset(new NetParameter); loadNetParams(net_file, net_param_.get()); } //初始化网络,通过parse_with_api从文件中读取网络的模型并生成网络 bool RTNet::Init(const std::map<std::string, std::vector<int>> &shapes) { if (gpu_id_ < 0) { AINFO << "must use gpu mode"; return false; } BASE_CUDA_CHECK(cudaSetDevice(gpu_id_)); // stream will only be destoried for gpu_id_ >= 0 cudaStreamCreate(&stream_); builder_ = nvinfer1::createInferBuilder(rt_gLogger); network_ = builder_->createNetwork(); parse_with_api(shapes); builder_->setMaxBatchSize(max_batch_size_); workspaceSize_ = 1 << 30; builder_->setMaxWorkspaceSize(workspaceSize_); cudaDeviceProp prop; cudaGetDeviceProperties(&prop, gpu_id_); bool int8_mode = checkInt8(prop.name, calibrator_); builder_->setInt8Mode(int8_mode); builder_->setInt8Calibrator(calibrator_); builder_->setDebugSync(true); nvinfer1::ICudaEngine *engine = builder_->buildCudaEngine(*network_); context_ = engine->createExecutionContext(); buffers_.resize(input_names_.size() + output_names_.size()); init_blob(&input_names_); init_blob(&output_names_); return true; } void RTNet::parse_with_api( const std::map<std::string, std::vector<int>> &shapes) { CHECK_GT(net_param_->layer_size(), 0); std::vector<LayerParameter> order; TensorMap tensor_map; TensorDimsMap tensor_dims_map; ParseNetParam(*net_param_, &tensor_dims_map, &tensor_modify_map_, &order); //添加网络输入层,输入层的名字为data,形状为[1,3,480,640] addInput(tensor_dims_map, shapes, &tensor_map); for (auto layer_param : order) { std::vector<nvinfer1::ITensor *> inputs; for (int j = 0; j < layer_param.bottom_size(); j++) { CHECK_NOTNULL(tensor_map[tensor_modify_map_[layer_param.bottom(j)]]); inputs.push_back(tensor_map[tensor_modify_map_[layer_param.bottom(j)]]); } //依次添加其他网络层 addLayer(layer_param, inputs.data(), layer_param.bottom_size(), &weight_map_, network_, &tensor_map, &tensor_modify_map_); } //输出层的形状为[1,13,480,640],每个通道表达所属每个车道的信息,图片上的单元格表示当前车道 //在当前位置是车道线的概率 CHECK_NE(output_names_.size(), static_cast<size_t>(0)); std::sort(output_names_.begin(), output_names_.end()); auto last = std::unique(output_names_.begin(), output_names_.end()); output_names_.erase(last, output_names_.end()); for (auto iter = output_names_.begin(); iter != output_names_.end();) { if (tensor_map.find(tensor_modify_map_[*iter]) != tensor_map.end()) { network_->markOutput(*tensor_map[tensor_modify_map_[*iter]]); iter++; } else { AINFO << "Erase output: " << *iter; iter = output_names_.erase(iter); } } } void RTNet::addLayer(const LayerParameter &layer_param, nvinfer1::ITensor *const *inputs, int nbInputs, WeightMap *weight_map, nvinfer1::INetworkDefinition *net, TensorMap *tensor_map, TensorModifyMap *tensor_modify_map) { //卷积层 if (layer_param.type() == "Convolution") { addConvLayer(layer_param, inputs, weight_map, net, tensor_map, tensor_modify_map); } //反卷积层 else if (layer_param.type() == "Deconvolution") { addDeconvLayer(layer_param, inputs, weight_map, net, tensor_map, tensor_modify_map); } //激活层 else if (layer_param.type() == "Sigmoid" || layer_param.type() == "TanH" || layer_param.type() == "ReLU") { addActiveLayer(layer_param, inputs, nbInputs, net, tensor_map, tensor_modify_map); } else if (layer_param.type() == "Concat") { addConcatLayer(layer_param, inputs, nbInputs, net, tensor_map, tensor_modify_map); } else if (layer_param.type() == "Slice") { addSliceLayer(layer_param, inputs, nbInputs, net, tensor_map, tensor_modify_map); } else if (layer_param.type() == "Reshape") { addReshapeLayer(layer_param, inputs, net, tensor_map, tensor_modify_map); } else if (layer_param.type() == "Padding") { addPaddingLayer(layer_param, inputs, net, tensor_map, tensor_modify_map); } else if (layer_param.type() == "Pooling") { addPoolingLayer(layer_param, inputs, net, tensor_map, tensor_modify_map); } else if (layer_param.type() == "Permute") { addPermuteLayer(layer_param, inputs, nbInputs, net, tensor_map, tensor_modify_map); } else if (layer_param.type() == "InnerProduct") { addInnerproductLayer(layer_param, inputs, weight_map, net, tensor_map, tensor_modify_map); } else if (layer_param.type() == "BatchNorm") { addBatchnormLayer(layer_param, inputs, weight_map, net, tensor_map, tensor_modify_map); } else if (layer_param.type() == "Scale") { addScaleLayer(layer_param, inputs, weight_map, net, tensor_map, tensor_modify_map); } else if (layer_param.type() == "Softmax") { addSoftmaxLayer(layer_param, inputs, nbInputs, net, tensor_map, tensor_modify_map); } else if (layer_param.type() == "Eltwise") { addEltwiseLayer(layer_param, inputs, net, tensor_map, tensor_modify_map); } else if (layer_param.type() == "ArgMax") { addArgmaxLayer(layer_param, inputs, nbInputs, net, tensor_map, tensor_modify_map); } else if (layer_param.type() == "Dropout") { AINFO << "skip dropout"; } else if (layer_param.type() == "Power") { addScaleLayer(layer_param, inputs, weight_map, net, tensor_map, tensor_modify_map); } else if (layer_param.type() == "DFMBPSROIAlign") { addDFMBPSROIAlignLayer(layer_param, inputs, nbInputs, net, tensor_map, tensor_modify_map); } else if (layer_param.type() == "RCNNProposal") { addRCNNProposalLayer(layer_param, inputs, nbInputs, net, tensor_map, tensor_modify_map); } else if (layer_param.type() == "RPNProposalSSD") { addRPNProposalSSDLayer(layer_param, inputs, nbInputs, net, tensor_map, tensor_modify_map); } else { AWARN << "unknown layer type:" << layer_param.type(); } } |

SCNN车道检测主函数

bool DarkSCNNLaneDetector::Detect(const LaneDetectorOptions &options, CameraFrame *frame) { if (frame == nullptr) { AINFO << "camera frame is empty."; return false; } auto start = std::chrono::high_resolution_clock::now(); auto data_provider = frame->data_provider; if (input_width_ != data_provider->src_width()) { AERROR << "Input size is not correct: " << input_width_ << " vs " << data_provider->src_width(); return false; } if (input_height_ != data_provider->src_height()) { AERROR << "Input size is not correct: " << input_height_ << " vs " << data_provider->src_height(); return false; } // use data provider to crop input image if (!data_provider->GetImage(data_provider_image_option_, &image_src_)) { return false; } // bottom 0 is data auto input_blob = cnnadapter_lane_->get_blob(net_inputs_[0]); auto blob_channel = input_blob->channels(); auto blob_height = input_blob->height(); auto blob_width = input_blob->width(); ADEBUG << "input_blob: " << blob_channel << " " << blob_height << " " << blob_width << std::endl; if (blob_height != resize_height_) { AERROR << "height is not equal" << blob_height << " vs " << resize_height_; return false; } if (blob_width != resize_width_) { AERROR << "width is not equal" << blob_width << " vs " << resize_width_; return false; } ADEBUG << "image_blob: " << image_src_.blob()->shape_string(); ADEBUG << "input_blob: " << input_blob->shape_string(); // resize the cropped image into network input blob inference::ResizeGPU( image_src_, input_blob, static_cast<int>(crop_width_), 0, static_cast<float>(image_mean_[0]), static_cast<float>(image_mean_[1]), static_cast<float>(image_mean_[2]), false, static_cast<float>(1.0)); ADEBUG << "resize gpu finish."; cudaDeviceSynchronize(); //调用网络进行预测 cnnadapter_lane_->Infer(); ADEBUG << "infer finish."; auto elapsed_1 = std::chrono::high_resolution_clock::now() - start; int64_t microseconds_1 = std::chrono::duration_cast<std::chrono::microseconds>(elapsed_1).count(); time_1 += microseconds_1; // convert network output to color map const auto seg_blob = cnnadapter_lane_->get_blob(net_outputs_[0]); ADEBUG << "seg_blob: " << seg_blob->shape_string(); //masks的形状为元素[480, 640]矩阵,大小为13的矢量 std::vector<cv::Mat> masks; for (int i = 0; i < num_lanes_; ++i) { cv::Mat tmp(lane_output_height_, lane_output_width_, CV_32FC1); //从SCNN网络输出复制当前车道的数据到tmp里面 memcpy(tmp.data, seg_blob->cpu_data() + lane_output_width_ * lane_output_height_ * i, lane_output_width_ * lane_output_height_ * sizeof(float)); // cv::resize(tmp // , tmp, cv::Size(lane_output_width_, lane_output_height_), 0, // 0); masks.push_back(tmp); } std::vector<int> cnt_pixels(13, 0); //合并车道线概论信息到mast_color图片上,图片的展现如下图的“DarkSCNN输出的车道线Mask图” cv::Mat mask_color(lane_output_height_, lane_output_width_, CV_32FC1); mask_color.setTo(cv::Scalar(0)); for (int c = 0; c < num_lanes_; ++c) { for (int h = 0; h < masks[c].rows; ++h) { for (int w = 0; w < masks[c].cols; ++w) { if (masks[c].at<float>(h, w) >= confidence_threshold_lane_) { mask_color.at<float>(h, w) = static_cast<float>(c); cnt_pixels[c]++; } } } } memcpy(lane_blob_->mutable_cpu_data(), reinterpret_cast<float *>(mask_color.data), lane_output_width_ * lane_output_height_ * sizeof(float)); // Don't use this way to copy data, it will modify data // lane_blob_->set_cpu_data((float*)mask_color.data); frame->lane_detected_blob = lane_blob_; cv::Mat input_map(480, 640, CV_32FC1); memcpy(input_map.data, input_blob->cpu_data(), 640 * 480 * sizeof(float)); cv::imwrite("/apollo/input.jpg", input_map); //获取网络输出的消失点 // retrieve vanishing point network output if (net_outputs_.size() > 1) { const auto vpt_blob = cnnadapter_lane_->get_blob(net_outputs_[1]); ADEBUG << "vpt_blob: " << vpt_blob->shape_string(); std::vector<float> v_point(2, 0); std::copy(vpt_blob->cpu_data(), vpt_blob->cpu_data() + 2, v_point.begin()); // compute coordinate in net input image v_point[0] = v_point[0] * vpt_std_[0] + vpt_mean_[0] + (static_cast<float>(blob_width) / 2); v_point[1] = v_point[1] * vpt_std_[1] + vpt_mean_[1] + (static_cast<float>(blob_height) / 2); // compute coordinate in original image v_point[0] = v_point[0] / static_cast<float>(blob_width) * static_cast<float>(crop_width_) + static_cast<float>(input_offset_x_); v_point[1] = v_point[1] / static_cast<float>(blob_height) * static_cast<float>(crop_height_) + static_cast<float>(input_offset_y_); ADEBUG << "vanishing point: " << v_point[0] << " " << v_point[1]; if (v_point[0] > 0 && v_point[0] < static_cast<float>(input_width_) && v_point[1] > 0 && v_point[0] < static_cast<float>(input_height_)) { frame->pred_vpt = v_point; } } auto elapsed_2 = std::chrono::high_resolution_clock::now() - start; int64_t microseconds_2 = std::chrono::duration_cast<std::chrono::microseconds>(elapsed_2).count(); time_2 += microseconds_2 - microseconds_1; time_num += 1; ADEBUG << "Avg detection infer time: " << time_1 / time_num << " Avg detection merge output time: " << time_2 / time_num; ADEBUG << "Lane detection done!"; return true; } |

2D后续处理

获取车道线2D对象frame->lane_objects

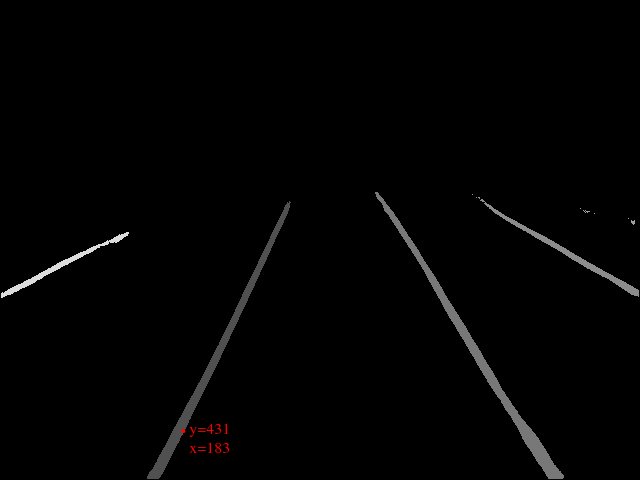

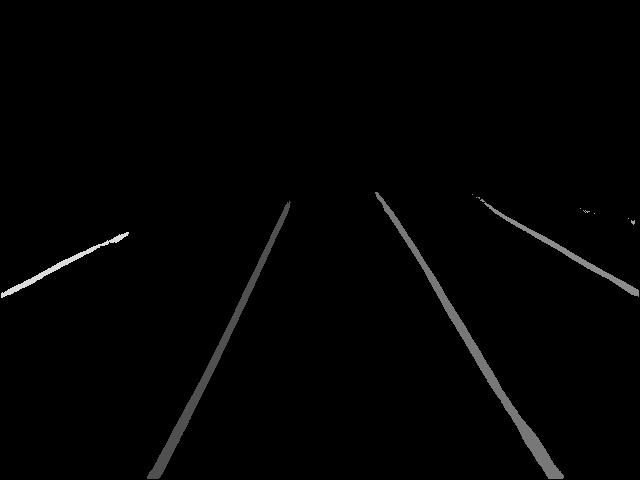

2D点示例

2D点示例

bool DarkSCNNLanePostprocessor::Process2D( const LanePostprocessorOptions& options, CameraFrame* frame) { ADEBUG << "Begin to Process2D."; frame->lane_objects.clear(); auto start = std::chrono::high_resolution_clock::now(); //复制前面输出的车道线信息,即“DarkSCNN输出的车道线Mask图” cv::Mat lane_map(lane_map_height_, lane_map_width_, CV_32FC1); memcpy(lane_map.data, frame->lane_detected_blob->cpu_data(), lane_map_width_ * lane_map_height_ * sizeof(float)); // if (options.use_lane_history && // (!use_history_ || time_stamp_ > options.timestamp)) { // InitLaneHistory(); // } // 1. Sample points on lane_map and project them onto world coordinate // TODO(techoe): Should be fixed int y = static_cast<int>(lane_map.rows * 0.9 - 1); // TODO(techoe): Should be fixed int step_y = (y - 40) * (y - 40) / 6400 + 1; xy_points.clear(); xy_points.resize(lane_type_num_); uv_points.clear(); uv_points.resize(lane_type_num_); //按行从下到上扫描,即从进到远。 while (y > 0) { //按列x循环 for (int x = 1; x < lane_map.cols - 1; ++x) { int value = static_cast<int>(round(lane_map.at<float>(y, x))); // lane on left //1到4类型的车道线在车的左边 //车道线和对应数字的含义如下: // {base::LaneLinePositionType::UNKNOWN, 0},未知车道线 // {base::LaneLinePositionType::FOURTH_LEFT, 1},左边第三车道左边线 // {base::LaneLinePositionType::THIRD_LEFT, 2},左边第二车道左边线 // {base::LaneLinePositionType::ADJACENT_LEFT, 3},邻接车道左边线 // {base::LaneLinePositionType::EGO_LEFT, 4},当前车道左边线 // {base::LaneLinePositionType::EGO_CENTER, 5},当前车道中心线 // {base::LaneLinePositionType::EGO_RIGHT, 6},当前车道右边线 // {base::LaneLinePositionType::ADJACENT_RIGHT, 7}, // {base::LaneLinePositionType::THIRD_RIGHT, 8}, // {base::LaneLinePositionType::FOURTH_RIGHT, 9}, // {base::LaneLinePositionType::OTHER, 10}, // {base::LaneLinePositionType::CURB_LEFT, 11},左边道沿线 // {base::LaneLinePositionType::CURB_RIGHT, 12}};右边道沿线 if ((value > 0 && value < 5) || value == 11) { //因为车道线在左边,所以车道线的右边界离车最近,即(x+1)就不应该是车道线了,此时x+1=0 // right edge (inner) of the lane if (value != static_cast<int>(round(lane_map.at<float>(y, x + 1)))) { //如图“2D点示例”里面的一个示例点, lane_map(x,y)=183,431,lane_map大小为640x480 //img_point在原始图片(1920x1080)里面,img_point(x,y)=549,1001 //经过转换,xy_p = trans_mat_ * img_point = (1.35401917, 0.260623962, 0.166781813) //xy_point为地面为平面,相机映射到地面上为原点的点,此时为xy_point =(8.11850643,1.56266415) //uv_point为相机拍摄的图像上的位置uv_point = (549,1001) Eigen::Matrix<float, 3, 1> img_point( static_cast<float>(x * roi_width_ / lane_map.cols), static_cast<float>(y * roi_height_ / lane_map.rows + roi_start_), 1.0); Eigen::Matrix<float, 3, 1> xy_p; xy_p = trans_mat_ * img_point; Eigen::Matrix<float, 2, 1> xy_point; Eigen::Matrix<float, 2, 1> uv_point; if (std::fabs(xy_p(2)) < 1e-6) continue; xy_point << xy_p(0) / xy_p(2), xy_p(1) / xy_p(2); // Filter out lane line points if (xy_point(0) < 0.0 || // This condition is only for front camera xy_point(0) > max_longitudinal_distance_ || std::abs(xy_point(1)) > 30.0) { continue; } uv_point << static_cast<float>(x * roi_width_ / lane_map.cols), static_cast<float>(y * roi_height_ / lane_map.rows + roi_start_); if (xy_points[value].size() < minNumPoints_ || xy_point(0) < 50.0f || std::fabs(xy_point(1) - xy_points[value].back()(1)) < 1.0f) { xy_points[value].push_back(xy_point); uv_points[value].push_back(uv_point); } } } else if (value >= 5 && value < lane_type_num_) { // Left edge (inner) of the lane if (value != static_cast<int>(round(lane_map.at<float>(y, x - 1)))) { Eigen::Matrix<float, 3, 1> img_point( static_cast<float>(x * roi_width_ / lane_map.cols), static_cast<float>(y * roi_height_ / lane_map.rows + roi_start_), 1.0); Eigen::Matrix<float, 3, 1> xy_p; xy_p = trans_mat_ * img_point; Eigen::Matrix<float, 2, 1> xy_point; Eigen::Matrix<float, 2, 1> uv_point; if (std::fabs(xy_p(2)) < 1e-6) continue; xy_point << xy_p(0) / xy_p(2), xy_p(1) / xy_p(2); // Filter out lane line points if (xy_point(0) < 0.0 || // This condition is only for front camera xy_point(0) > max_longitudinal_distance_ || std::abs(xy_point(1)) > 30.0) { continue; } uv_point << static_cast<float>(x * roi_width_ / lane_map.cols), static_cast<float>(y * roi_height_ / lane_map.rows + roi_start_); if (xy_points[value].size() < minNumPoints_ || xy_point(0) < 50.0f || std::fabs(xy_point(1) - xy_points[value].back()(1)) < 1.0f) { xy_points[value].push_back(xy_point); uv_points[value].push_back(uv_point); } } else if (value >= lane_type_num_) { AWARN << "Lane line value shouldn't be equal or more than: " << lane_type_num_; } } } step_y = (y - 45) * (y - 45) / 6400 + 1; y -= step_y; } auto elapsed_1 = std::chrono::high_resolution_clock::now() - start; int64_t microseconds_1 = std::chrono::duration_cast<std::chrono::microseconds>(elapsed_1).count(); time_1 += microseconds_1; // 2. Remove outliers and Do a ransac fitting std::vector<Eigen::Matrix<float, 4, 1>> coeffs; std::vector<Eigen::Matrix<float, 4, 1>> img_coeffs; std::vector<Eigen::Matrix<float, 2, 1>> selected_xy_points; coeffs.resize(lane_type_num_); img_coeffs.resize(lane_type_num_); for (int i = 1; i < lane_type_num_; ++i) { coeffs[i] << 0, 0, 0, 0; if (xy_points[i].size() < minNumPoints_) continue; Eigen::Matrix<float, 4, 1> coeff; // Solve linear system to estimate polynomial coefficients if (RansacFitting<float>(xy_points[i], &selected_xy_points, &coeff, 200, static_cast<int>(minNumPoints_), 0.1f)) { coeffs[i] = coeff; xy_points[i].clear(); xy_points[i] = selected_xy_points; } else { xy_points[i].clear(); } } auto elapsed_2 = std::chrono::high_resolution_clock::now() - start; int64_t microseconds_2 = std::chrono::duration_cast<std::chrono::microseconds>(elapsed_2).count(); time_2 += microseconds_2 - microseconds_1; //生成lane_objects对象 // 3. Write values into lane_objects std::vector<float> c0s(lane_type_num_, 0); for (int i = 1; i < lane_type_num_; ++i) { if (xy_points[i].size() < minNumPoints_) continue; c0s[i] = GetPolyValue( static_cast<float>(coeffs[i](3)), static_cast<float>(coeffs[i](2)), static_cast<float>(coeffs[i](1)), static_cast<float>(coeffs[i](0)), static_cast<float>(3.0)); } // [1] Determine lane spatial tag in special cases if (xy_points[4].size() < minNumPoints_ && xy_points[5].size() >= minNumPoints_) { std::swap(xy_points[4], xy_points[5]); std::swap(uv_points[4], uv_points[5]); std::swap(coeffs[4], coeffs[5]); std::swap(c0s[4], c0s[5]); } else if (xy_points[6].size() < minNumPoints_ && xy_points[5].size() >= minNumPoints_) { std::swap(xy_points[6], xy_points[5]); std::swap(uv_points[6], uv_points[5]); std::swap(coeffs[6], coeffs[5]); std::swap(c0s[6], c0s[5]); } if (xy_points[4].size() < minNumPoints_ && xy_points[11].size() >= minNumPoints_) { // Use left lane boundary as the right most missing left lane, bool use_boundary = true; for (int k = 3; k >= 1; --k) { if (xy_points[k].size() >= minNumPoints_) { use_boundary = false; break; } } if (use_boundary) { std::swap(xy_points[4], xy_points[11]); std::swap(uv_points[4], uv_points[11]); std::swap(coeffs[4], coeffs[11]); std::swap(c0s[4], c0s[11]); } } if (xy_points[6].size() < minNumPoints_ && xy_points[12].size() >= minNumPoints_) { // Use right lane boundary as the left most missing right lane, bool use_boundary = true; for (int k = 7; k <= 9; ++k) { if (xy_points[k].size() >= minNumPoints_) { use_boundary = false; break; } } if (use_boundary) { std::swap(xy_points[6], xy_points[12]); std::swap(uv_points[6], uv_points[12]); std::swap(coeffs[6], coeffs[12]); std::swap(c0s[6], c0s[12]); } } for (int i = 1; i < lane_type_num_; ++i) { base::LaneLine cur_object; if (xy_points[i].size() < minNumPoints_) { continue; } // [2] Set spatial label cur_object.pos_type = spatialLUT[i]; // [3] Determine which lines are valid according to the y value at x = 3 if ((i < 5 && c0s[i] < c0s[i + 1]) || (i > 5 && i < 10 && c0s[i] > c0s[i - 1])) { continue; } if (i == 11 || i == 12) { std::sort(c0s.begin(), c0s.begin() + 10); if ((c0s[i] > c0s[0] && i == 12) || (c0s[i] < c0s[9] && i == 11)) { continue; } } // [4] Write values cur_object.curve_car_coord.x_start = static_cast<float>(xy_points[i].front()(0)); cur_object.curve_car_coord.x_end = static_cast<float>(xy_points[i].back()(0)); cur_object.curve_car_coord.a = static_cast<float>(coeffs[i](3)); cur_object.curve_car_coord.b = static_cast<float>(coeffs[i](2)); cur_object.curve_car_coord.c = static_cast<float>(coeffs[i](1)); cur_object.curve_car_coord.d = static_cast<float>(coeffs[i](0)); // if (cur_object.curve_car_coord.x_end - // cur_object.curve_car_coord.x_start < 5) continue; // cur_object.order = 2; cur_object.curve_car_coord_point_set.clear(); for (size_t j = 0; j < xy_points[i].size(); ++j) { base::Point2DF p_j; p_j.x = static_cast<float>(xy_points[i][j](0)); p_j.y = static_cast<float>(xy_points[i][j](1)); cur_object.curve_car_coord_point_set.push_back(p_j); } cur_object.curve_image_point_set.clear(); for (size_t j = 0; j < uv_points[i].size(); ++j) { base::Point2DF p_j; p_j.x = static_cast<float>(uv_points[i][j](0)); p_j.y = static_cast<float>(uv_points[i][j](1)); cur_object.curve_image_point_set.push_back(p_j); } // cur_object.confidence.push_back(1); cur_object.confidence = 1.0f; frame->lane_objects.push_back(cur_object); } // Special case riding on a lane: // 0: no center lane, 1: center lane as left, 2: center lane as right int has_center_ = 0; for (auto lane_ : frame->lane_objects) { if (lane_.pos_type == base::LaneLinePositionType::EGO_CENTER) { if (lane_.curve_car_coord.d >= 0) { has_center_ = 1; } else if (lane_.curve_car_coord.d < 0) { has_center_ = 2; } break; } } // Change labels for all lanes from one side if (has_center_ == 1) { for (auto& lane_ : frame->lane_objects) { int spatial_id = spatialLUTind[lane_.pos_type]; if (spatial_id >= 1 && spatial_id <= 5) { lane_.pos_type = spatialLUT[spatial_id - 1]; } } } else if (has_center_ == 2) { for (auto& lane_ : frame->lane_objects) { int spatial_id = spatialLUTind[lane_.pos_type]; if (spatial_id >= 5 && spatial_id <= 9) { lane_.pos_type = spatialLUT[spatial_id + 1]; } } } auto elapsed = std::chrono::high_resolution_clock::now() - start; int64_t microseconds = std::chrono::duration_cast<std::chrono::microseconds>(elapsed).count(); // AINFO << "Time for writing: " << microseconds - microseconds_2 << " us"; time_3 += microseconds - microseconds_2; ++time_num; ADEBUG << "frame->lane_objects.size(): " << frame->lane_objects.size(); ADEBUG << "Avg sampling time: " << time_1 / time_num << " Avg fitting time: " << time_2 / time_num << " Avg writing time: " << time_3 / time_num; ADEBUG << "darkSCNN lane_postprocess done!"; return true; } |

本作品采用知识共享署名 4.0 国际许可协议进行许可。

摄像头输出图片

摄像头输出图片 DarkSCNN输出的车道线Mask图

DarkSCNN输出的车道线Mask图 融合到原图上的车道线

融合到原图上的车道线 input.jpg SCNN网络真正输入

input.jpg SCNN网络真正输入