The Spark Planner is the bridge from Logic Plan to Spark Plan which will convert Logic Plan to Spark Plan.

The SparkPlanner extends from SparkStrategies(Code in SparkStrategies.scala).

It have several strategies:

def strategies: Seq[Strategy] =

extraStrategies ++ (

FileSourceStrategy ::

DataSourceStrategy ::

DDLStrategy ::

SpecialLimits ::

Aggregation ::

JoinSelection ::

InMemoryScans ::

BasicOperators :: Nil) |

def strategies: Seq[Strategy] =

extraStrategies ++ (

FileSourceStrategy ::

DataSourceStrategy ::

DDLStrategy ::

SpecialLimits ::

Aggregation ::

JoinSelection ::

InMemoryScans ::

BasicOperators :: Nil)

Let me introduce several here:

SpecialLimits

The Logical Plan Limit will be converted to Spark Plan TakeOrderedAndProjectExec here.

/**

* Plans special cases of limit operators.

*/

object SpecialLimits extends Strategy {

override def apply(plan: LogicalPlan): Seq[SparkPlan] = plan match {

case logical.ReturnAnswer(rootPlan) => rootPlan match {

case logical.Limit(IntegerLiteral(limit), logical.Sort(order, true, child)) =>

execution.TakeOrderedAndProjectExec(limit, order, child.output, planLater(child)) :: Nil

case logical.Limit(

IntegerLiteral(limit),

logical.Project(projectList, logical.Sort(order, true, child))) =>

execution.TakeOrderedAndProjectExec(

limit, order, projectList, planLater(child)) :: Nil

case logical.Limit(IntegerLiteral(limit), child) =>

execution.CollectLimitExec(limit, planLater(child)) :: Nil

case other => planLater(other) :: Nil

}

case logical.Limit(IntegerLiteral(limit), logical.Sort(order, true, child)) =>

execution.TakeOrderedAndProjectExec(limit, order, child.output, planLater(child)) :: Nil

case logical.Limit(

IntegerLiteral(limit), logical.Project(projectList, logical.Sort(order, true, child))) =>

execution.TakeOrderedAndProjectExec(

limit, order, projectList, planLater(child)) :: Nil

case _ => Nil

}

} |

/**

* Plans special cases of limit operators.

*/

object SpecialLimits extends Strategy {

override def apply(plan: LogicalPlan): Seq[SparkPlan] = plan match {

case logical.ReturnAnswer(rootPlan) => rootPlan match {

case logical.Limit(IntegerLiteral(limit), logical.Sort(order, true, child)) =>

execution.TakeOrderedAndProjectExec(limit, order, child.output, planLater(child)) :: Nil

case logical.Limit(

IntegerLiteral(limit),

logical.Project(projectList, logical.Sort(order, true, child))) =>

execution.TakeOrderedAndProjectExec(

limit, order, projectList, planLater(child)) :: Nil

case logical.Limit(IntegerLiteral(limit), child) =>

execution.CollectLimitExec(limit, planLater(child)) :: Nil

case other => planLater(other) :: Nil

}

case logical.Limit(IntegerLiteral(limit), logical.Sort(order, true, child)) =>

execution.TakeOrderedAndProjectExec(limit, order, child.output, planLater(child)) :: Nil

case logical.Limit(

IntegerLiteral(limit), logical.Project(projectList, logical.Sort(order, true, child))) =>

execution.TakeOrderedAndProjectExec(

limit, order, projectList, planLater(child)) :: Nil

case _ => Nil

}

}

Aggregation

The Logical Plan Aggregate will be convert to bellow Spark Plan based on conditions:

SortAggregateExec

HashAggregateExec

ObjectHashAggregateExec

/**

* Used to plan the aggregate operator for expressions based on the AggregateFunction2 interface.

*/

object Aggregation extends Strategy {

def apply(plan: LogicalPlan): Seq[SparkPlan] = plan match {

case PhysicalAggregation(

groupingExpressions, aggregateExpressions, resultExpressions, child) =>

val (functionsWithDistinct, functionsWithoutDistinct) =

aggregateExpressions.partition(_.isDistinct)

...... |

/**

* Used to plan the aggregate operator for expressions based on the AggregateFunction2 interface.

*/

object Aggregation extends Strategy {

def apply(plan: LogicalPlan): Seq[SparkPlan] = plan match {

case PhysicalAggregation(

groupingExpressions, aggregateExpressions, resultExpressions, child) =>

val (functionsWithDistinct, functionsWithoutDistinct) =

aggregateExpressions.partition(_.isDistinct)

......

Let me use SQL “SELECT x.str, COUNT(*) FROM df x JOIN df y ON x.str = y.str GROUP BY x.str” for example.

And at here, “COUNT(*)” is an aggregate expression.

继续阅读“Spark Planner for Converting Logical Plan to Spark Plan”本作品采用知识共享署名 4.0 国际许可协议进行许可。

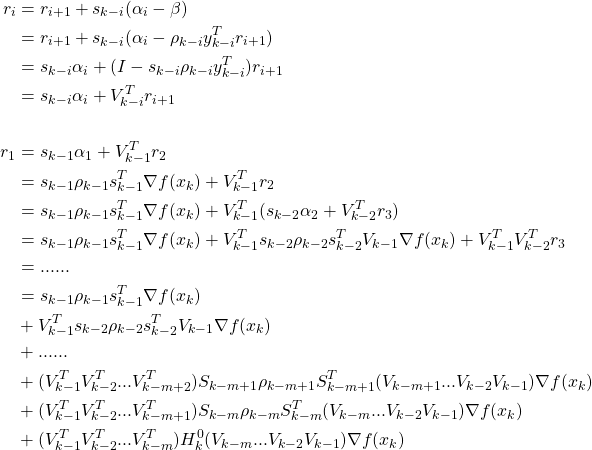

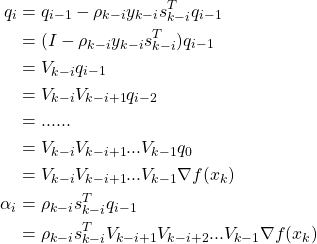

![]() 第k次迭代为:

第k次迭代为:![]()

![]() 为n x n对称正定矩阵,可以在每次迭代中更新。注意到这个构造函数在p=0的时候,

为n x n对称正定矩阵,可以在每次迭代中更新。注意到这个构造函数在p=0的时候,![]() 和

和![]() 和原函数是相同的。对其求导,得到

和原函数是相同的。对其求导,得到![]()

![]() 第k+1次迭代为(其中

第k+1次迭代为(其中![]() 可以用强Wolfe准则来计算):

可以用强Wolfe准则来计算):![]()

![]() (计算太复杂),Davidon提出用最近迭代的几步来计算它。

(计算太复杂),Davidon提出用最近迭代的几步来计算它。![]() ,现在构造其在k+1次迭代的二次函数:

,现在构造其在k+1次迭代的二次函数:![]()

![]() 呢?函数

呢?函数![]() 应该在

应该在![]() 处和

处和![]() 处和目标函数

处和目标函数![]() 导数一致。对其求导,并令

导数一致。对其求导,并令![]() ,即

,即![]() 处得:(

处得:(![]() 处自然相等,

处自然相等,![]() )

)![]()

![]()

![]() ,方程6为:

,方程6为:![]()

![]() ,得到另一个形式:

,得到另一个形式:![]()

![]()

![]() 的方法,令:

的方法,令:![]()

![]() 和

和![]() 为秩1或秩2的矩阵。

为秩1或秩2的矩阵。![]() 为:

为:![]()

![]()

![]()

![]() 和

和![]() 不唯一,令

不唯一,令![]() 和

和![]() 分别平行于

分别平行于![]() 和

和![]() ,即

,即![]() 和

和![]() ,带入方程11得:

,带入方程11得:![]()

![]() 和

和![]() 带入方程13得:

带入方程13得:![]()

![]()

![]() ,

,![]() ,

,![]() ,

,![]() 。

。![]()

![]()

![]()

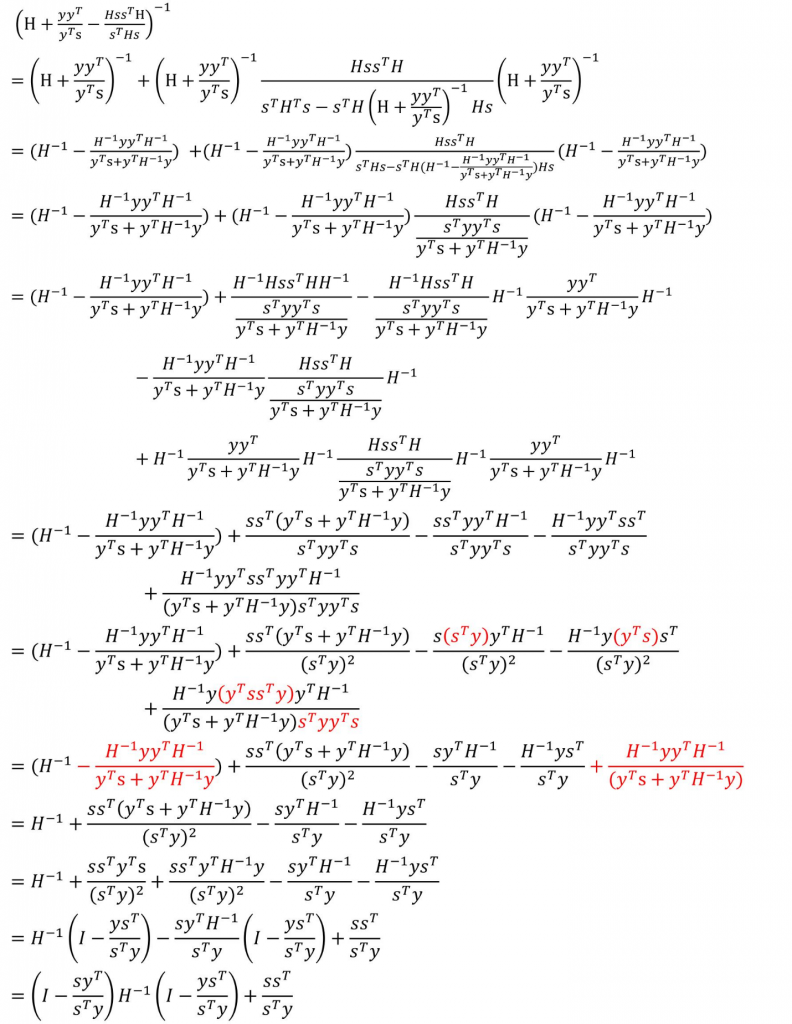

![]() 是方程中的

是方程中的![]() )(参考文档):

)(参考文档):

![]()

![]() ,方程19可得BFGS方法的迭代方程:

,方程19可得BFGS方法的迭代方程:![]()

![]() ,则BFGS方程可写成:

,则BFGS方程可写成:![]()

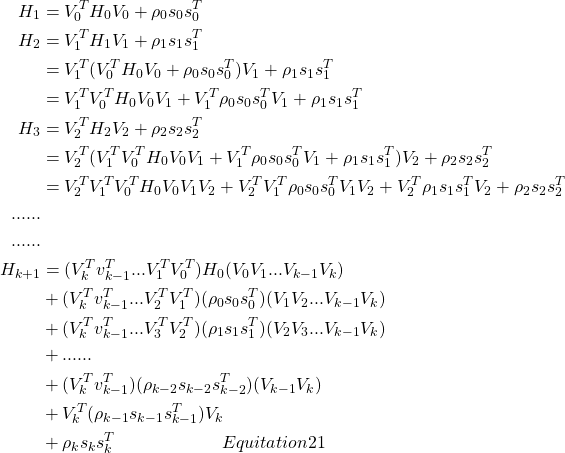

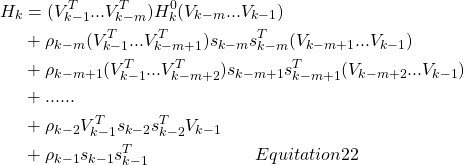

![]() 需要用到

需要用到![]() 组数据,数据量非常大,LBFGS算法就采取只去最近的m组数据来运算,即可以构造近似计算公式:

组数据,数据量非常大,LBFGS算法就采取只去最近的m组数据来运算,即可以构造近似计算公式:

![]() :

:

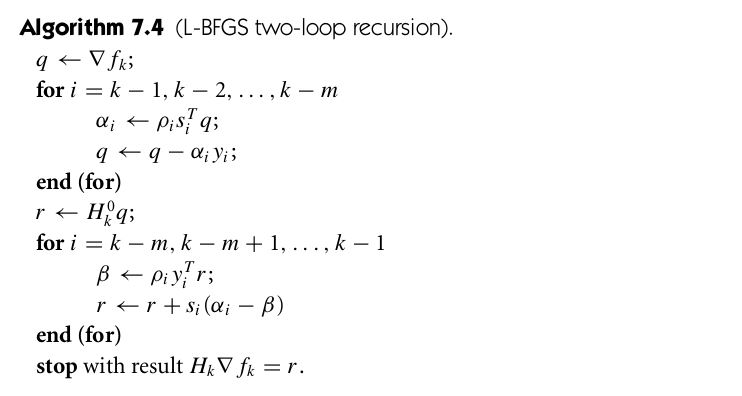

![]() ,这里的m指的是从现在到历史记录m次的后一次,因为LBFGS只记录m次历史:

,这里的m指的是从现在到历史记录m次的后一次,因为LBFGS只记录m次历史: